Yesterday I popped along on just an expo pass to London’s Excel centre for the Wearable Technology show. Despite its name it is not all about wrist watches that check your heart rate. The organisers have recognised the wider implications of things getting more instrumented, more data flowing and more business opportunities in the Internet of Things industry pattern. I had several reasons to pop along, the main one there will be a little more on later. I alluded to some big changes in a tweet. I don’t mean to tease but I am going to anyway.

One of the reasons I went to the show was to see some of the sports tracking wearables though. This was more in keeping with the title of the show. I was interested in both the physical monitoring, taking it past heart rate, and how the coaching software was shaping up to make sense of the data. I was also interested in anything that helped track the type of movement. Both these are from my training and teaching in Choi Kwang Do. A lot of the newer body monitoring kit was being built into skin tight performance clothing. That seems a good idea in general, and for a martial art, not having things on our wrists, yet getting some great training and tuning feedback during cardio and PACE training is going to be useful. There was only really one body movement tracker, related to boxing. It was based on the accelerometer principle, combined with an app that told you how many punches you were doing and at what rate and also showed the speed of the punch. It is not out for a while but it will be interesting to try this version with our more unusual martial arts moves. It is called Corner

Another reason to go, just in general because it’s what I have worked with for years is the VR and AR aspects of the show. There were a lot of headsets, both full immersive Rifts, Gear VR and also lots of peppers ghost, not really a hologram, heads up displays. There were some interesting uses to track warehouse goods in a HUD and also using projection onto a surface to avoid the need for glasses at all.

One company I spent a bit of time talking to and taking the demo was vTime

The preamble was good, and the demo was great and I wish them all the best of luck. It is a 3d chat room, avatar based where the users choose lovely rendered scene to sit in and converse, soon to share pictures etc. It is claiming to be a sociable not social network application. It is targeted at mobile first. I kept hearing the zuckerburg quote about one day people will just sit around a virtual campfire. Of course I was taken back to 2006, but tried not to get all grumpy and remind them everything old is new again. The campfire was a key part of the imagery and the experience we had online back then. Though then we could get up and walk about in the free form environment. In fact we still can, and SL had VR support. i.e. two cameras one for each eye. I still liked this new application, and if people connect and enjoy it, then so be it 🙂 I would dive in but it was focussed onto Gear VR and I only have my iPhone (and a load of things to drop that into to get stereo vision)

The full post this was in to date it is here

Also Rita J. King was our embedded story teller/journalist and wrote tales from the fire pit to explain the rise of the virtual community powered by people, avatars and Second Life. The PDF of the story is here, and should be useful reading for the next wave of virtual environments. Headset or otherwise. I would say it is essential reading in fact 🙂

The economist had a stand showing a great use of virtual worlds. They have a reconstruction, from photos and other data, of the Mosul artefacts that have been destroyed due to the conflicts in the middle east. The VR was a little old fashioned, but the principle and content was good.

It was fun talking to the marker based AR developers, as they showed me things like book covers coming alive. Once again, I had to let them know I new a little about it and of course I tried to sell the idea in my books to people. If I had though about it I would have had a stand to show sci-fi novels about VR and AR and IoT with Reconfigure and Contxt 🙂

I mentioned serendipity in the title. I had tweeted my location as I got to excel, but then not checked twitter as I was going around the exhibits. As I tweeted I was leaving I saw that my colleague from way back Martin Gale was at the show, speaking and presenting. It was fantastic to catch up and see how well he is doing, quote the Fast Show, ‘Ah Ted” multiple times. Thank you for the coffee :). It made a great end to a fantastic day out. A day in which I got to practice a little of what I will be doing in the very near future, around the subjects that I will be immersed in. I guess in startup terms someone would call it is pivot. There I go teasing again. Watch this space, if you are interested 🙂

blendedreality

A free offering for World Choi Kwang Do week

This week is, for our martial art, Choi Kwang Do, starting to be known as World CKD week. Primarily that is because March 2nd is the anniversary of Grandmaster Choi founding the art, originally in 1987. The week is being themed with the tag line Science meets Martial Arts. This is one of the main reasons Choi Kwang Do works for me. In class we learn, practice and teach things based on the reason they work.

I have written about our family’s martial art of choice many times and my exploration of technology in the art and how I arrived at the art via technology and serendipity too. There is also the more formal article in my writing portfolio about Virtual athletes

All this has led to Choi Kwang Do being a huge part of our family life and we have made so many good friends through it. There is a bond we all feel in the positive spirit of the art. It was shown this weekend as we celebrated with Master Scrimshaw the 5th Anniversary of BasingstokeCKD Our dohjang was full on Saturday with fellow students from Basingstoke, but also some good friends, old and new from other schools. We had black belt tag grading, colour belt grading, an incredible set of routines to go through in class and then a great social event with food and cakes. It was incredibly uplifting, and an ideal lead into World CKD week too!

To celebrate this World CKD week I have made Cont3xt free to download. It has an awful lot in it, pivotal to the story inspired by Choi Kwang Do as an art and a state of mind. It fits with the Science (Fiction) meets Martial Arts tagline for the week. Whilst I am doing this to encourage my fellow practitioners to see ways we can introduce Choi Kwang Do in many different ways, and as a way of saying thank you to them all there will of course be other people able to download and experience the books for free. All my author bio’s mention Choi Kwang Do.

We pledge Humility and Integrity, amongst other things, in the art. So promotion of one’s own work like this could feel a little uncomfortable. However I really want to share how CKD inspired elements fit into a science fiction techno thriller in a very positive way. It was the just getting on with it unbreakable spirit that we learn, that even got me to write these two books.

As Cont3xt is the follow up book I have also made Reconfigure free for the week too. The martial arts arrives in Cont3xt but not in the way you might think to start off with, in Reconfigure (book 1) Roisin has no such skills, but the book is there for free too for completeness.

I hope a few people get a chance to take a look, maybe even pop a few stars of reviews on Amazon. I would also love for someone to have read the Cont3xt, who doesn’t do CKD yet, and to look up the art , find their nearest school and start to train. It’s a long shot, but every person in the World is a potential student to join us, have some fun and learn something really useful about themselves.

Choi Kwang Do is practical self defence, but we aim to never need to use it, and we also don’t fight and hurt our fellow students via competition and sparring. I believe the quote is “It is better to be a warrior in a garden than a gardener in a war.”

So there we have it, the books are available free on Amazon, to be used in whatever way works for whom so ever needs it. Cont3xt and Reconfigure here.

Pil Seung! (Certain victory)

Lucky 7 years – Feeding Edge birthday

Wow. It is seven years since I started Feeding Edge Ltd. That is quite a long while isn’t it? The past year has been a more difficult one with less work in the pipeline for most of it. It has meant I have had to take stock and look to do other things, whilst the World catches up. It does seem strange given the dawn of the new wave of Virtual Reality, and Augmented Reality that I have not managed to find the right people to engage my expertise and background in both regular technology and virtual worlds. In part that was because I was focussing on one major contract, when that dried up suddenly there was no where to go. It is starting from zero again to build up and sell who I am and what I do.

My other startup work has always been ticking along under the covers, as we try and work the system to find the right person with the right vision to fund what we have in mind. It is a big a glorious project, but it all takes time. Lots of no, yes but and even a few lets do it, followed by oh hang can’t now other stuff has come up.

On the summer holiday, I had a good long think about whether to give this all up and try and find a regular position back in corporate life, I was hit with a flash of inspiration for the science fiction concept. It was so obvious that I just had to give it a go. That is not to say I would not accept a well paid job with slightly more structure to help pay my way. However, the books flowed out of me. It was an incredibly exciting end to the year. Learning how to write and structure Reconfigure, how to package and build the ebook and the print version. How to release and then try and promote it. I have learned so much doing it that helps me personally, helps my business and also will help in any consulting work I do in the future. I realised too that the products of both Reconfigure and Cont3xt are like a CV for me. They represent a state of the virtual world, virtual reality, augmented reality and Internet of Things industry, combined with the coding and use of game tech that comes directly from my experiences, extrapolated for the purpose of story telling.

Write what you know, and that appears to be the future, in this case the near future.

This year I have also been continuing my journey in Choi Kwang Do. This time with a black suit on as a head instructor. It has led me to give talks to schools on science and why it is important, with a backdrop of Choi Kwang Do as a hook for them. I am constantly trying to evolve as a teacher and a student. Once again the reflective nature of the art was woven into the second book Cont3xt. I did not brand any of the martial arts action in the book as Choi Kwang Do as that may mis-represent the art and I don’t want to do that, but it did influence the start of the story with its more reflective elements, later on a degree of poetic licence kicked in, but the feelings of performing the moves is very real.

I have continued my pursuit of the unusual throughout the year. The books as a product provide, rather like the Feeding Edge logo has in the past, a vehicle to explore ideas.

I still really like my Forza 6 book branded Lambo, demonstrating the concept of digital in world product placement.

If you have read the books, and if not why not? they are only 99p, you will know that Roisin like Marmite. Why not ? I like Marmite, again write what you know. It became a vehicle and an ongoing thread in the stories, and even a bit of a calling card. It is a real world brand, so that can be tricky, but I think I use it in a positive way, as well as showing that not everyone is a fan. So the it is just another real world hook to make the science fiction elements believable. So I was really pleased when i saw that Marmite had a print your own label customisation. It is print on demand Marmite, just as my books are print on demand. It uses the web and the internet to accept the order and the then there is physical delivery. I know its a bit meta but thats the same pattern Roisin uses, just the physical movement of things is a little more quirky 🙂

I have another two jars on the way. One for Reconfigure and one for Roisin herself.

I am sure she will change her own twitter icon from the regular jar to one of these later as @axelweight Yes she does have a Twitter account, she had to otherwise she would not have been able to accidentally Tweet “ls -l” and get introduced to the World changing device @RayKonfigure would she?

All this interweaving of tech and experience, in this case related to the books, is what I do and have always done. I hope my ideas are inspirational to some, and one day obvious to others. I will keep trying to do the right thing, be positive and share as much as possible.

I am available to talk through any opportunities you have, anytime. epredator at feedingedge.co.uk or @epredator

Finally, last but not least, I have to say a huge thank you to my wife Jan @elemming She has the pressure of the corporate role, one that she enjoys but still it is the pressure. She is the major breadwinner. You can imagine how many 99p books you have to sell make any money to pay anything. She puts up with the downs whilst we at for the ups. Those ups will re-emerge, this year has shown that too me. No matter how bleak it looks, something happens to offer hope. I have some new projects in the pipeline, mostly speculative, but with all these crazy ideas buzzing around something will pop one day.

As we say in Choi Kwang Do – Pil Seung! which means certain victory. Happy lucky 7th birthday Feeding Edge 🙂

It’s alive – Cont3xt – The follow up to Reconfigure

On Friday I pressed the publish button on Cont3xt. It whisked its way onto Amazon and the KDP select processes. The text box suggested it might be 72 hours before it was life, in reality it was only about 2 hours, followed by a few hours of links getting sorted across the stores. I was really planning to release it today, but, well, it’s there and live.

I did of course have to mention it in a few tweets, but I am not intending to go overboard. I recorded a new promo video, and played around with some of the card features on YouTube now to provide links. I am just pushing a directors cut version. The version here is a 3 minute promo. The other version is 10 minutes explaining the current state of VR and AR activity in the real industry.

As you can see I am continuing the 0.99 price point. I hope that encourages a few more readers to take a punt and then get immersed in Roisin’s world.

Cont3xt is full of VR, AR and her new Blended Reality kit. It has some new locations and even some martial arts fight scenes. Peril, excitement and adventure, with a load of real World and future technology. Whats not to like?

I hope you give it a download and get to enjoy it as much as everyone else who has read it seems to.

This next stage in the journey has been incredibly interesting and I will share some of that later. For now I just cast the book out there to see whether people will be interested now there is the start of a series 🙂

Microsoft Minecraft, Space and Semantic Paint

It is interesting looking, spotting, patterns and trends and extrapolating the future. Sometimes things come in and get focus because they are mentioned a lot on multiple channels and sometimes those things converge. Right at the moment it seems that Microsoft are doing all the right things in the emerging tech space, game technology and general interest space.

Firstly has been the move my Microsoft to make Minecraft even easier to use in schools.

They are of course following on and officially backing the sort of approach the community has already taken. However a major endorsement and drive to use things like Minecraft in education is fantastic news. The site is education.minecraft.net

And just to resort to type again, this is, as the video says, not about goofing around in a video game. This is about learning maths, history, how to communicate online. These are real metaverse tools that enable rich immersive opportunities, on a platform that kids already know and love. Why provide them with a not very exciting online maths test when they can use multiple skills in an online environment together?

Microsoft have also supported the high end sciences. Announcing teaming up with NASA to use the blended/mixed/augmented reality headset Hololens to help in the exploration of Mars. This is using shared experience telepresence. Bringing mars to the room, desk, wall that several people are sharing in a physical space. The Hololens and the next wave of AR are looking very exciting and very importantly they have an inclusion approach to the content. Admittedly each person needs a headset, but using the physical space each person can see the same relative view of the data and experience as the rest of the people. The don’t have to of course :), there is not reason for screens not to show different things in context for each user. However standard VR is in immersed experience without much awareness of others. A very important point I feel.

Microsoft have also published a paper and video about using depth scanning and live understanding and labelling of real world objects. Something that things like Google Tango will be approaching.

Slightly better than my 2008 attempt (had to re-use this picture again of the Nokia N95)

The real and virtual are becoming completely linked now. Showing how the physical universe is actually another plane alongside all the other virtual and contextual metaverses.

It is all linked and not about isolation, but understanding and sharing of information, stories and facts.

Untethering Humans, goodbye screens

We are on the cusp of a huge change in how we as humans interact with one another, with the world and with the things we create for one another. A bold statement, but one that stands up, I believe, by following some historical developments in technology and social and work related change.

The change involves all the great terms, Augmented Reality, Virtual Reality, Blended Reality and the metaverse. It is a change that has a major feature, one of untethering, or unshackling us as human beings from a fixed place or a fixed view of the world.

Here I am being untethered from the world in a freefall parachute experience, whilst also being on TV 🙂

All the great revolutions in human endeavour have involved either transporting us via our imagination to another place or concept, books, film, plays etc. or transporting us physically to another place, the wheel, steam trains, flight. Even modern telecommunications fit into that bracket. The telephone or the video link transport us to a shared virtual place with other people.

Virtual worlds are, as I may have mentioned a few times before, ways to enhance the experience of humans interacting with other humans and with interesting concepts and ideas. That experience, up to know has been a tethered one. We have evolved the last few decades becoming reliant on rectangular screens. Windows on the world, showing us text, images, video and virtual environments. Those screens have in evolved. We had large bulky cathode ray tubes, LED, Plasma, OLED and various flat wall projectors. The screens have always remained a frame, a fixed size tethering us to a more tunnel vision version. The screen is a funnel through which elements are directed at us. We started to the untethering process with wi-fi and mobile communications. Laptops, tablets and smartphones gave us the ability to take that funnel, a focused view of a world with us.

More recent developments have led to the VR headsets. An attempt to provide an experience that completely immerses us by providing a single screen for each eye. It is a specific medium for many yet to be invented experiences. It does though tether us further. It removes the world. That is not to say the Oculus Rift, Morpheus and HTC Vive are not important steps but they are half the story of untethering the human race. Forget the bulk and the weight, we are good at making things smaller and lighter as we have seen with the mobile telephone. The pure injection of something into our eyes and eyes via a blinkering system feels, and is, more tethering. It is good at the second affordance of transporting is to places with others, and it is where virtual world and the VR headsets naturally and obviously co-exist.

The real revolution comes from full blended reality and realities. That plural is important. We have had magic lens and magic mirror Augmented Reality for a while. Marker based and markerless ways to use one of these screens that we carry around or have fixed in out living rooms to show us digital representations of things places in out environment. They are always fun. However they are almost always just another screen in our screens. Being able to see feel and hear things in our physical environment wherever we are in the world and have them form part of that environment truly untethers us.

Augmented Reality is not new of course. We, as in the tech community, has been tinkering with it for years. Even this mini example on my old Nokia N95 back in 2008 starts to hint at the direction of travel.

The devices used to do this are obviously starting at a basic level. Though we have the AR headsets of Microsoft Hololens, Google’s Magic Leap to start pushing the boundaries. They will not be the final result of this massive change. With an internet of things world, with the physical world instrumented and producing data, with large cloud servers offering compute power at the end of an wireless connection to analyse that data and to be able to visualize and interact with things in our physical environment we have a lot to discover and to explore.

I mentioned realities, not just reality. There is no reason to only have augmentation into a physical world. After all if you are immersed in a game environment or a virtual world you may actually choose, because you can, to shut out the world, to draw the digital curtains and explore. However, just as when you are engaged in any activity anywhere, things come to you in context. You need to be able to interact with other environments from which ever one you happen to be in.

Take an example, using Microsoft Hololens, Minecraft and Skype. In todays world you would have minecraft on you laptop/console/phone be digging around, building sharing the space with others. Skype call comes in from someone you want to talk to. You window away from Minecraft and focus on Skype. It is all very tethered. In a blended reality, as Hololens has shown you can have Minecraft on the rug in front of you and skype hanging on the wall next to the real clock. Things and data placed in the physical environment in a way that works for you and for them. However you may want to be more totally immersed in Minecraft and go full VR. If something can make a small hole in the real world for the experience, then it can surely make an all encompassing hole, thus providing you with only Minecraft. Yet, if it can place Skype on your real wall, then it can now place it on your virtual walls and bring that along with you.

This is very much a combination that is going to happen. It is not binary to be either in VR or not, in AR or not. It is either, both or neither.

It is noticeable that Facebook, who bought heavily into Oculus Rift have purchased Surreal Vision last month who specialize in using instrumentation and scanning kit to make sense of the physical world and place digital data in that world. Up until now Oculus Rift, which has really led the VR charge since its kickstarter (yes I backed that one!) has been focussed on the blinkered version of VR. This purchase shows the intent to go for a blended approach. Obviously this is needed as otherwise Magic Leap and Hololens will quickly eat into the Rifts place in the world.

So three of the worlds largest companies, Facebook, Google and Microsoft have significant plays in blended reality and the “face race” as it is sometimes called to get headsets on us. Sony have Project Morpheus which is generally just VR, yet Sony have had AR applications for many years with PS Move.

Here is Predlet 2.0 enjoying the AR Eyepet experience back in 2010 (yes five years ago !)

So here it is. We are getting increasingly more accurate ways to map the world into data, we have world producing IOT data streams, we have ever increasing ubiquitous networks and we have devices that know where they are, what direction they are facing. We have high power backend servers that can make sense of our speech and of context. On top of that we have free roaming devices to feed and overlay information to us, yes a little bit bulky and clunky but that will change. We are almost completely untethered. Wherever we are we can experience whatever we need or want, we can, in the future blend that with more than one experience. We can introduce others to our point of view or keep our point of view private. We can fully immerse or just augment, or augment our full immersion. WE can also make the virtual real with 3d printing!

That is truly exciting and amazing isn’t it?

It makes this sort of thing in Second Life seem an age away, but it is the underpinning for me and many others. Even in this old picture of the initial Wimbledon build in 2006 there is a cube hovering at the back. It is one of Jessica Qin’s virtual world virtual reality cubes.

That cube and other things like it provided an inspiration for this sort of multiple level augmentation of reality. It was constrained by the tethered screen but was, and still is, remarkable influential.

Here my Second Life avatar, so my view of a virtual world is inside 3d effect that provides a view, a curved 3d view not just a picture, or something else. It is like the avatar is wearing a virtual Oculus rift it that helps get the idea across.

This is from 2008 by way of a quick example of the fact even then it could work.

So lets get blending then 🙂

Amiga kickstarter book, breaking mirror worlds & VR

A few days ago my copy of the book “Commodore Amiga: a visual Commpendium book” that I backed in Kickstarter arrived. The book is by Sam Dyer through BitmapBooks. It came with a load of extra goodies from my backing and my name along with my fellow backers vanity printed in the appendix. The only slight problem was that unlike all the other Kickstarter campaigns I wasn’t “allowed” to have a credit as epredator as it made the list look untidy unless we had normal names. That is the authors choice of course 🙂

My computer owning history went ZX81, Commodore 64 then Amiga 500 (and later 1200). The Amiga was 1987 and became my main machine for most of my polytechnic/university time. It caused me to get an overdraft for the first time to buy an external hard drive for a piece of work I was doing (that and to play the later cinemaware games that needed two floppy disk drives to work).

It was the machine I coded my final year project, which was a mix of hardware and software but also had to work on the much larger and more expensive Apollo computers we had.

It is the machine I spent ours with sellotaped together graph paper planning my SimCity builds and mapping Bards Tale Dungeons.

It is also the machine I first experience proper network gaming on with a null modem cable and F/A-18 flight simulator. Not only was that the first proper LAN party gaming but it forged the idea that machines do not have to have a consistent shared view of the world just because they are connected. The F/A-18 simulator let my good friend Wal and I fly around shooting at one another in a shared digital space. It was the early days of having a printer and being able to do “desktop publishing” aka DTP. I even produced a poster for our little event.

When we played we had different terrain packs running on each Amiga as we had different versions of the game. There was no server this was really peer to peer. The terrain was local to each Amiga, but the relative location of one another in that space was shared. Each machine was doing its own collision detection. It meant if I saw mountains I needed to avoid them, yet on the other machine that same local space might be flat desert. We all perceive reality differently anyway, but here we were forced to perceive and act according to whatever the digital model threw at us. In reality we kept to the sky and forcing your opponent into their own scenery was considered unsporting (though occasionally funny and much needed).

This set the precedent for me that whilst mirror worlds, virtual worlds that attempt to be 100% like a real place, have a reason to exist we do not have to play by the same physical rules of time and space in virtual environments.

Other things of note about the Amiga. Well I coded as predator on the Commodore 64 and that moved across to the Amiga too. The e was a later addition on the front by the principles are the same.

My wife also discovered gaming on my Amiga. Getting completely wrapped up and in the zone on Sim City and realising it was 4am. Later it would be Lemmings that caught her attention. Hence she is now elemming on twitter.

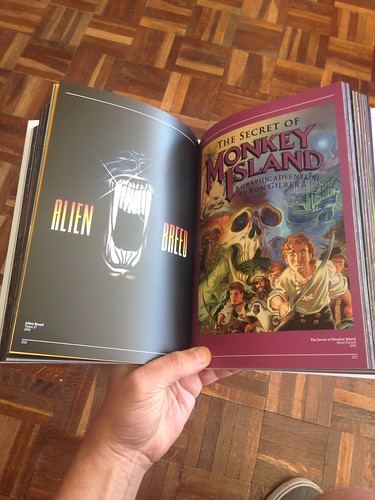

The book is full of classic images nearly all of which I have some sort of memory of that is more than yes I recognise that picture.

Games like Alien Breed (a gauntlet like top down shooter) and The Secret Of Monkey Island (a classic point and click humorous adventure) on their own rack up considerable hours of entertainment for very different reasons

Whilst fondly reminiscing and remembering things that impact how I think and work today I was also at the same time in current and future mode. Right next door on the table was my copy of Games(TM)

As I tweeted at the time My life history in 1 picture #nearly #amiga #vr #metaverse.

When we put on a headset, a total immersion one, we get a view of a world that is instantly believable. Something fed directly to our eyes and linked to the direct we are looking becomes a convincing reality. In a shared virtual world we will assume that we are all seeing the same thing. That does not have to be the case, as with the F/A-18 example. We can have different experiences yet share the same world. To help think about that consider the game Battleships. Each player has the same map, the same relative grid references on a piece of paper or on plastic peg board. yet on that map you can only see your own boats and any pieces of information you have gained through playing. When considering a mirror world or a virtual world build it can be harder to consider this. Yet many games and environments already have a little dollop of this behaviour with personal displays of health, ammo, speed etc in a Heads up Display. Those HUDs are an augmented reality display in a virtual world.

When we now consider the blended view headsets like the HoloLens and the MergeVR we are taking the real world as the server in effect. It is a fixed environment. We are then placing and enhancing what we see in that world with 3D overlays. Convincing the viewer the digital stuff is real.

Unlike the F/A-18 terrain the real world is there for each person. If there is a table in the middle of the room, even if you make it look like it is not for a headset wearer with object removal and clever visuals they will still trip over it. However the other way around can make for an interesting dynamic. headset wearers made to think there are obstacles and things in their way that they have to move around, but its different for each headset wearer. Just a little though experiment in perception. I didn’t even throw in anything about 3D printers actually making the new obstacles in the real world. That’s a bit much for a monday morning.

Anyway, the Amiga book is great. It was a fantastic period in games and in home technology, but we have many more exciting times coming.

A great week for science and tech, games, 3d printing and AR

There is always something going on in science and emerging technology. However some weeks just bring a bumper bundle of interesting things all at once. Here in the UK the biggest event had to been the near total eclipse of the Sun. We had some great coverage on the the TV with Stargazing live sending a plane up over the Faroe islands to capture the total eclipse. I was all armed and ready with a homemade pinhole camera.

This turned out great but unfortunately we were quite overcast here so it was of little use as a camera. I also spent the eclipse at Predlet 2.0 celebration assembly. They had the eclipse on the big screen for all the primary school kids to see. Whilst we had the lights off in the hall it did not get totally dark, but it did get a bit chilly. It was great that the school keyed into this major event that demonstrates the motion of the planets. So rather like the last one in 1999 I can certainly say I will remember where I was and what we were doing.(a conversation I had with @asanyfuleno on Twitter and Facebook)

This brings me on to our technological change and the trajectory we are on. In 1999 I was in IBM Hursley with my fellow Interactive Media Centre crew. A mix of designers, producers and techies and no suits. It was still the early days of the web and we were building all sorts of things for all sorts of clients. In particular during that eclipse it was some more work for Vauxhall cars. We downed tools briefly to look out across Hursley park to see the dusk settle in and flocks of birds head to roost thinking it was night.

It does not seem that long ago but… it is 16 years. When we were building those quite advanced websites Amazon was just starting, Flickr was 6 years away, Twitter about 7 years away, Facebook a mere 5 (but with a long lead time) and we were only on Grand Theft Auto II, still a top down pac man clone. We were connected to lots of our colleague son instant messaging but general communications were phone and SMS and of course email. So we were not tweeting and sharing pictures, or now as people do live feeds on Meerkat. Many people were not internet banking, trust in communications and computers was not high. We were pre dot.com boom/bust too. Not to mention no one really had much internet access out and about or at home. Certainly no wi-fi routers! We were all enthralled by the still excellent Matrix movie. The phone in that, the slide down communicator style Nokia being one of the iconic images of the decade.

NB. As I posted this I saw this wonderful lego remake of the lobby scene so just had to add it in this post 🙂

It was a wild time of innovation and one many of us remember fondly I think. People tended to leave us alone as we brought in money doing things no managers or career vultures knew to jump on. So that eclipse reminds me of a time I set on a path of trying to be in that zone all the time. I was back then getting my first samples from a company that made 3d printers as I was amazed at the principle, and I was pondering what we could do with designers that knew 3d and this emerging tech. We were also busy playing Quake and Unreal in shared virtual worlds across the LAN in our downtime so I was already forming my thoughts on our connection to one another through these environments. Having experiences that I still share today in a newer hi tech world where patterns are repeating themselves, but better and faster.

That leads me to another movie reference and in the spirit of staying in this zone. This footage of a new type of Terminator T-1000 style 3d manufacturing. 3D printers may not be mainstream as such but many more people get the concept of additive manufacture. Laying down layer after layer of material such as plastic. It is the same as we made coil clay pots out of snakes of rolled clay when we were at school. A newer form of 3D printing went a little viral on the inter webs this week from carbon3d.com. This exciting development pulls an object out of a resin. It is really the same layering principle but done in a much more sophisticated way. CLIP (Continuous Liquid Interface Production) balances exposing the resin to molecules to oxygen or to UV light. Oxygen keeps it as a liquid (hence left behind) and targeted UV light causes the resin to become solid, polymerization. Similar liquid based processes use lasers to fire into a resin. This one though slowly draws the object out of the resin. Giving it a slightly more ethereal or scifi look to it. It is also very quick in comparison to other methods. Whilst this video is going faster than actual speed it is still a matter of minutes rather than hours to create objects.

Another video doing the round that shows some interesting future developments is one from Google funded Magic Leap. This is a blended reality/augmented reality company. We already have Microsoft moving into the space with Hololens. Much of Magic Leap’s announcements have not been as clearly defined as one might hope. There is some magic coming and it is a leap. Microsoft of course had a great pre-release of Hololens, some impressive video but some equally impressive testimonials and articles from journalist and bloggers who got to experience the alpha kit. The video appeared to be a mock up but fairly believable.

Magic Leap were set to do a TED talk but apparently pulled out at the last minute and this video appeared instead.

It got a lot of people excited, which is the point, but it seems even more of a mock up video than any of the others. It is very ell done as the Lord of the Rings FX company Weta Workshop have a joint credit. The technology is clearly coming. I don’t think we are there yet in understanding and getting the sort of precise registration and overlays. We will, and one day it may look like this video. Of course it’s not just the tech but the design that has to keep up. If you are designing a game that has aliens coming out of the ceiling it will have a lot less impact if you try and play outside or in an atrium with a massive vaulted ceiling. The game has to understand not just where you are and what the physical space is like but how to use that space. Think about an blended reality board game, or an actual board game for that matter. The physical objects to play Risk, Monopoly etc require a large flat surface. Usually a table. You clear the table of obstructions and set up and play. Now a project board game could be done on any surface, Monopoly on the wall. It could even remove or project over things hung on the wall, obscure lights etc. It is relying on a degree of focus in one place. A fast moving shooting game where you walk around or look around will be reading the environment but the game design has to adjust what it throws at you to make it continue to make sense. We already have AR games looking for ghosts and creatures that just float around. They are interesting but not engaging enough. Full VR doesn’t have this problem as it replaces the entire world with a new view. Even in that there are lots of unanswered questions of design, how stories are told, cut scenes, attracting attention, user interfaces, reducing motion sickness etc. Blending with a physical world, where that world could be anywhere or anything is going to take a lot more early adopter suffering and a number of false starts and dead ends. It can of course combine with rapid 3d printing, creating new things in the real world that fit with the game or AR/BR experience. Yes thats more complexity, more things to try and figure out. It is why it is such a rich and vibrant subject.

Just bringing it back a little bit to another development this week. The latest in the Battlefield gaming franchise Battlefield Hardline went live. This, in case you don’t do games, is a 3d first person shooter. Previous games have been military, this one is cops and robbers in a modern Miami Vice tv style. One of the features of Battlefield is the massive online combat. It features large spaces and it makes you feel like a small spec in the map. Other shooters are more close in like Call of Duty. The large expanse means Battlefield can focus on things like vehicles. Flying helicopters and driving cars. Not just you though, you can be a pilot and deliver your colleagues to the drop zone whilst you gunner gives cover.

This new game has a great online multiplayer mode called hotwire that apps into vehicles really well. Usually game modes are capture the flag or holding a specify fixed point to win the game. In hotwire you grab a car/lorry etc and try and keep that safe. It means that you have to do some mad game driving weaving and dodging. It also means that you compatriots get to hand out of the windows of the car trying to shoot back at the bad guys. It is very funny and entertaining.

What also struck me was the 1 player game called “episodes”. This deliberately sticks with a TV cop show format as you play through the levels. After a level has finished the how you did page looks like Netflix with a next episode starts in 20 seconds down in the bottom right. If you quite a level before heading to the main menu it does a “next time in Battlefield Hardline” mini montage of the next episode. As the first cut scenes player I got a Miami Vice vibe which the main character then hit back by referencing it. It was great timing, and in joke, but one for us of a certain age where Miami Vice was the show to watch. Fantastic stuff.

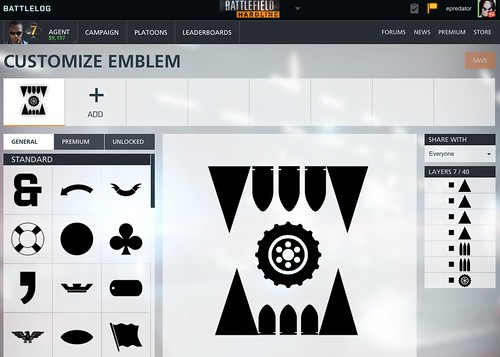

I really like its style. It also has a logo builder on the website so in keeping with what I always do I built a version of the Feeding Edge logo in a Hardline style.

I may not be great at the game, as I bounce around looking for new experiences in games, but I do like a good bit of customisation to explore.

MergeVR – a bit of HoloLens but now

If you are getting excited and interested, or just puzzling what is going on with the Microsoft announcement about Hololens and can’t wait the months/years before it comes to market then there are some other options, very real, very now.

Just before christmas I was very kindly sent a prototype of new headset unit that uses an existing smartphone as its screen. It is called MergeVR. The first one like this we saw was the almost satirical take on Oculus Rift that Google took with Google Cardboard. A fold up box that let you strap your android to your face.

MergeVR is made of very soft, comfortable spongy material. Inside are two spherical lenses that can be slid in and out lateral to adjust the divergence of you eyes and get a comfortable feel.

Rather like the AntVR I wrote about last time, this uses the principle of one screen, split into two views. The MergeVR uses you smart phone as the screen and it slides comfortably into the spongey material at the front.

Using an existing device has its obvious advantages. The smartphones already have direction sensors in them, and screens designed to be looked at close up.

MergeVR is not just about 3d experience of Virtual Reality (One where the entire view is computer generated). It is, by its very name Merge, about augmented reality. In this case it is an augmented reality taking your direct view of the world and adding data and visuals to it. This is knows as a magic lens. You look through the magic lens, and see things you would not normally be able to see. As opposed to a magic mirror which you look at a fixed TV screen to see the effects of a camera merging with the real world.

The iPhone (in my case) camera has a slot to see through on the in the MergeVR. This makes it very difference from some of the other Phone On Face (POF – made up acronym) devices. The extra free device I got with the AntVR, the TAW is one of these non pass through POF’s. It is a holder, and lenses with a folding mechanism to adjusts to hold the phone in place. With no pass through it is just to watch 3d content.

AntVR TAW

Okay so the MergeVR is able to let you use the camera, see the world, and then you can watch the screen close up without holding anything The lenses make you left eye look at the right half and the right eye at the left half. One of the demo applications is instantly effective and has a wow factor. Using a marker based approach a dinosaur is rendered in 3d on the marker. Marker based AR is not new, neither is iPhone AR, but the stereoscopic hands free approach where the rest of the world is effectively blinkered for you adds an an extra level of confusion for the brain. Normally if you hold a phone up to a picture marker, the code will spot the marker, the orientation of the marker and relative position in the view then render the 3d model on top. So if you, or the marker moves the model is moved too. When holding the iPhone up you can of course still see around it, rather like holding up a magnifying glass (magic lens remember). When you POF though your only view of the actual world is the camera view of the phone. So when you see something added and you move your body around it is there in your view. It is only the slight lag and the fact the screen is clearly not the same resolution or same lighting as the real world that causes you to not believe it totally.

The recently previewed microsoft Hololens and the yet to be seen Google funded Magic Leap are a next step removing the screen. They let you see the real world, albeit through some panes of glass, and then use project tricks near to the eye, probably very similar to peppers ghost, to adjust what you see and how it is shaded, coloured etc. based on a deep sensing of the room and environment. It is markerless room aware blended reality. Using the physical and the digital.

Back to the MergeVR. It also comes with a bluetooth controller for the phone. A small hand held device to let you talk to the phone. Obviously the touch screen when in POF mode means you can’t press any buttons 🙂 Many AR apps and examples like the DinoAR simply use your head movements and the sensors in the phone to determine what is going on. Other things though will need some form of user input. As the phone can see, it can see hands, but not having a Leap motion controller or a kinect to sense the body some simpler mechanism can be employed.

However, this is where MergeVR gets much more exciting and useful for any of us techies and metaverse people. The labs are not just thinking about the POF container but the content too. A Unity3d package is being worked on. This provides camera prefabs (Rather like the Oculus Rift one) that splits the Unity3D view into a Stereo Camera when running into the right shape and size, perspective etc for the MergeVR view. It provides extra access to the bluetooth controller inputs too.

This means you can quickly build MergeVR 3d environments and deploy to the iPhone (or Droid). Combine this with some of the AR toolkits and you can make lots of very interesting applications, or simply just add 3d modes to existing ones you have. With the new unity3d 4.6 user interfaces things will be even easier to have headsup displays.

So within about 2 minutes of starting Unity I had a 3d view up on iPhone on MergeVR using Unity remote. The only problem I had was using the usb cable for quick unity remote debugging as the left hand access hole was a little too high. There is a side access on the right but the camera need to be facing that way. Of course being nice soft material I can just make my own hole in it for now. It is a prototype after all.

It’s very impressive, very accessible and very now (which is important to us early adopters).

Lets get blending!

(Note the phone is not in the headset as I needed to take the selfie 🙂

Use Case 2 – real world data integration – CKD

As I am looking at a series of boiled down use cases of using virtual world and gaming technology I thought I should return to the exploration of body instrumentation and the potential for feedback in learning a martial art such as Choi Kwang Do.

I have of course written about this potential before, but I have built a few little extra things into the example using a new windows machine with a decent amount of power (HP Envy 17″) and the Kinect for Windows sensor with the Kinect SDK and Unity 3d package.

The package comes with a set of tools that let you generate a block man based on the the join positions. However the controller piece of code base some options for turning on the user map and skeleton lines.

In this example I am also using unity pro which allows me to position more than one camera and have each of those generate a texture on another surface.

You will see the main block man appear centrally “in world”. The three screens above him are showing a side view of the same block man, a rear view and interestingly a top down view.

In the bottom right is the “me” with lines drawn on. The kinect does the job of cutting out the background. So all this was recorded live running Unity3d.

The registration of the block man and the joints isn’t quite accurate enough at the moment for precise Choi movements, but this is the old Kinect, the new Kinect 2.0 will no doubt be much much better as well as being able to register your heart rate.

The cut out “me” is a useful feature but you can only have that projected onto the flat camera surface, it is not a thing that can be looked at from left/right etc. The block man though is actual 3d objects in space. The cubes are coloured so that you can see join rotation.

I think I will reduce the size of the joints and try and draw objects between them to give him a similar definition to the cutout “me”.

The point here though is that game technology and virtual world technology is able to give a different perspective of a real world interaction. Seeing techniques from above may prove useful, and is not something that can easily be observed in class. If that applies to Choi Kwang Do then it applies to all other forms of real world data. Seeing from another angle, exploring and rendering in different ways can yield insights.

It also is data that can be captured and replayed, transmitted and experienced at distance by others. Capture, translate, enhance and share. It is something to think about? What different perspectives could you gain of data you have access to?