It’s been a while since I posted anything for lots of reasons, that’s an offline conversation. However, this weekend something appeared on my feeds that was just too exciting not to do a quick test with and then share, which I already have on Twitter, LinkedIn, Mastodon and Facebook plus a bit of instagram. So, many channels to look at!

Back in August 2022 I dived into using Midjourney’s then new GenAI for images from text. It was a magical moment in tech for me of which there have been few over the 50+ years of being a tech geek (34 of those professionally). The constant updates to the GenAI and the potential for creating things digitally, both in 2D, movies and eventually 3D metaverse content has been exciting and interesting but there were a few gaps in the initial creation and control, one of which just got filled.

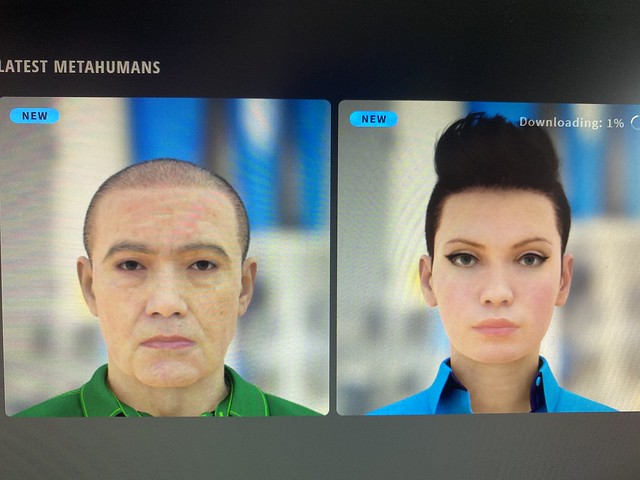

Midjourney released its character constant reference approach to point a text prompt at a reference image, in particular of a person, and to then use that person as a base for what is generated in the prompt. Normally you ask it to generate an image and try and describe the person in text or by a well known person, but accurately describing someone starts to make for very long prompts. Any gamers who have used avatar builders with millions of possible combinations will appreciate that a simple sentence is not going to get these GenAI’s to get the same person in two goes. This matters if you are trying to tell a narrative, such as, oh I don’t know… a sci fi novel like Reconfigure? I had previously written about the fun of trying to generate a version of Roisin from the book in one scene and passing that to Runway.ml where she came alive. That was just one scene, and to try any others would not have given me the same representation of Roisin in situ.

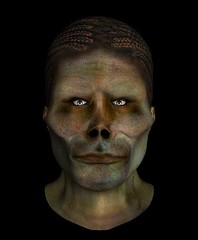

The image above was the one I used to then push to runway.ml to see what would happen, and I was very suprised how well it all worked. However, on Friday I pointed the –cref tag in midjourney to this image and asked for some very basic prompts related to the book. This is what I got

As you can see it is very close to being the same person, same clothing, different styles and these were all very short prompts. With more care, attention and curation these could be made to be even closer to one another. Obviously a bit of uncanny valley may kick in, but as a way to get a storyboard, sources for a genAi movie or create a graphic novel this is great innovation to help assist my own creative process in ways that there is no other way for me to do this without some huge budget from a Netflix series or a larger publisher. When I wrote the books I saw the scenes and pictures vividly in my head, these are not exactly the same, but they will be in the spirit of those.