With my metaverse evangelist hat on I have for many years, in presentations and conversations, tried to help people understand the value of using game style technology in a virtual environment. The reasons have not changed, they have grown, but a basic use case is one of being able to experience something, to know where something is or how to get to it before you actually have too. The following is not to show off any 3d modelling expertise, I am a programmer who can use most of the tool sets. I put this “place” together mainly to figure out Blender to help the predlets build in things other than minecraft.With new windows laptop, complementing the MBP, I thought I would document this use case by example.

Part 1 – Verbal Directions

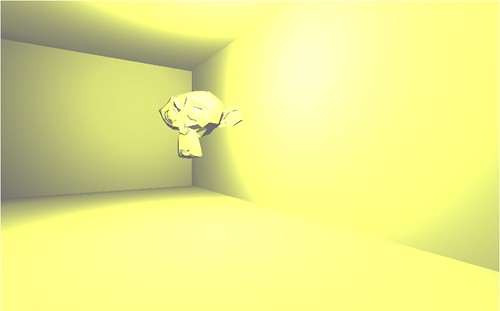

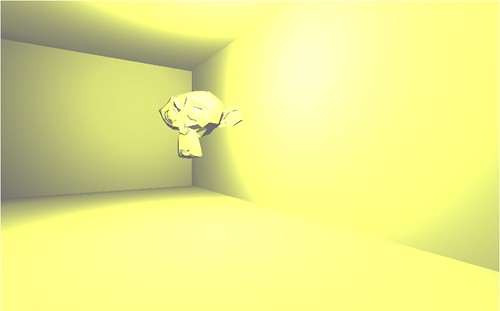

Imagine you have to find something, in this case a statue of a monkey’s head. It is in a nice apartment. The lounge area has a couple of sofas leading to a work of art in the next room. Take a right from there and a large number of columns lead to an ante room containing the artefact.

What I have done there is describe a path to something. It is a reasonable description, and it is quite a simple navigation task..

Now lets move from words, or verbal description of placement to a map view. This is the common one we have had for years. Top down.

Part 2 – The Map

A typical map, you will start from the bottom left. It is pretty obvious where to go, 2 rooms up and turn right and keep going and you are there. This augments the verbal description, or can work just on its own. Simple, and quite effective but filters a lot of the world out in simplification. Mainly because maps are easy to draw. it requires a cognitive leap to translate to the actual place.

Part 3 – Photos

You may have often seen pictures of places to give you a feel for them. They work too. People can relate to the visuals, but it is a case of you get what you are given.

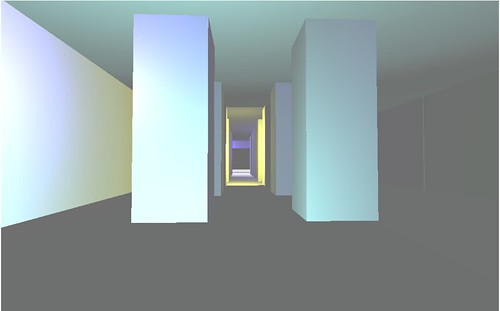

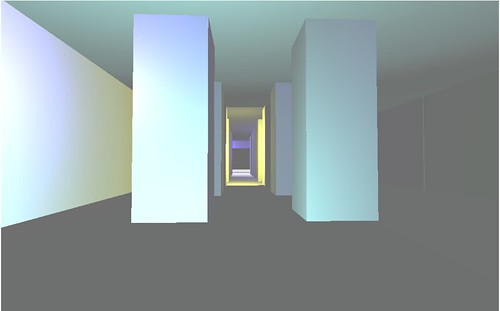

The entrance

The lounge

The columned corridor

The goal.

Again in a short example this allows us to get quite a lot of place information into the description. “A picture paints a thousand words”. It is still passive.

A video of a walkthrough would of course be an extra step here, that is more pictures one after the other. Again though it is directed.You have no choice how to learn, how to take in the place.

Part 4 – The virtual

Models can very easily now be put into tools like Unity3d and published to the web to be able to be walked around. If you click here, you should get a unity3d page and after a quick download (assuming you have the plugin 😉 if not get it !) you will be placed at the entrance to the model, which is really a 3d sketch not a full on high end photo realistic rendering. You may need to click to give it focus before walking around. It is not a shared networked place, it is not really a metaverse, but it has become easier than ever to network such models and places if sharing is an important part of the use case (such as in the hospital incident simulator I have been working on)

The mouse will look around, and ‘w’ will walk you the way you are facing (s is backwards a,d side to side). Take a stroll in and out down to the monkey and back.

I suggest that now you have a much better sense of the place, the size, the space, the odd lighting. The columns are close together you may have bumped into a few things. You may linger on the work of art. All of this tiny differences are putting this place into you memory. Of course finding this monkey is not the most important task you will have today, but apply the principle to anything you have to remember, conceptual or physical. Choosing your way through such a model or concept is simple but much more effective isn’t it? You will remember it longer and maybe discover something else on the way. It is not directed by anyone, your speed your choice. This allows self reflection in the learning process which re-enforces understanding of the place

Now imagine this model, made properly, nice textures and lighting, a photo realistic place and pop on a VR headset like the Oculus Rift. Which in this case is very simple with Unity3d. You sense on being there is even further enhanced and only takes a few minutes.

It is an obvious technology isn’t it? A virtual place to rehearse and explore.

Of course you may have spotted that this virtual place whilst in unity3d to walk around provided the output for the map and for the photo navigation. Once you have a virtual place you can still do things the old way if that works for you. Its a Virtual virtuous circle!