I just got back from my trip to Washington to the very excellent 3DTLC conference. This was certainly one of the most productive, interesting and stimulating conferences we have had over the last 3 years. Primarily it was because it was not a conference to convince people of the value of virtual worlds, but to look at ways to move everything forward from that point. The focus being Training, Learning and Education took some of the more traditional focus of “hey how do we turn a fast buck from this” (a.k.a ROI) from the discussions and hit us all with mix of case studies of things done both in enterprise and academia with some future thinking and discussion.

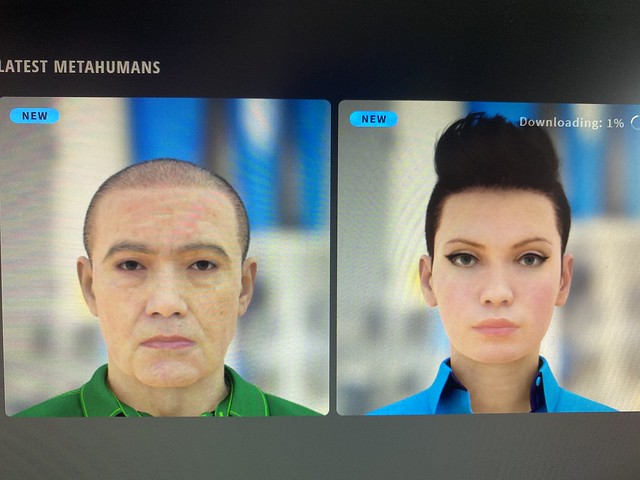

The entire conference was also heavily powered by the twitter back channel #3dtlc was getting a lot of traffic. This should be no suprise from virtual world experts used to attending virtual events, but maintaining an engaged buzz through ongoing discussion. (Apologies to all those not embroiled in this world who happened to experience this all via any facebook flood feeds). So whilst we had no avatars textured or untextured (as above) we certainly all had a virtual presence. The conference was not being streamed to the web as audio or video, but as a considerably set of 140 character conversations crowdsourced and minuting the proceedings.

The location was Gaulledet University, a 99 acre site about 2 miles from Capitol Hill. A very grand campus, though contrasted outside by a slightly different area that many taxi’s seemed unwilling to come to.

However that did not detract from the content.

Tony O’Driscoll had done a great job of getting the agenda together and inviting people. It was Tony’s request that had persuaded me to spend some of Feeding Edge’s travel budget to get over to Washington.

Analyze, Leverage, Teach, Learn, Design, Explore, Improve, Connect, Extend, Convince where the topic subjects for each panel or interview.

The first panel Analyze had a set of positioning statements about where were are as an industry. Kicked off by Erica Driver from ThinkBalm. The general consensus was that we were still at the early stages. Steve Prentice of Gartner re-iterated the important fact that this is all about people, unlike many other technology adoption curves, and that there was more than one adoption curve interacting here. Several other themes emerged that may or may not be considered a distraction.

1. What do we call this all – Everyone has a name for this, metaverse, immersive, 3.0 etc. Though as Erica pointed out we started with e-commerce, then moved to e-business now no one really talks about it as a thing anymore it is just part of general business. The same will happen to the thread so this immersive revolution.

2. The G word. There was a polarization into whether or not anything or anyone should ever use the word GAME. This again highlighted the point above on clarification of what “this” is. In some cases using games and games technology makes sense, in others it is not going to get anywhere in the adoption curve for some of the purse string holders. There is no one answer. Though I must say that when I started in the IT industry 20 years ago it was frowned upon, even as a software engineer to have the word Game on your CV. Now it is very prominent on mine an part and parcel of any discussions and work I do. That does not mean every solution to every problem has to be a game based one though.

The Leverage section was an interview with Joe Little from BP. Joe has managed to carve out a good official and respected position with BP providing a whole set of examples of how they have used both virtual world and visualization technology in a host of applications. An interesting one was around building a team of students up as a team before going on a physically demanding exploration to the antarctic. The virtual world elements let people get to know one another first, something a few of us have done so we know its not an isolated case. Joe’s examples were not all one platform or one example but a spread, and for any doubters in the audience (though they were an experience bunch) it provided a safe grounding.

Teach, saw a fantastic set of panelists who had between them enormous experience as educators and virtual world experts in the field doing, trying, observing adjusting and reporting what they do. Pedagogy started to be mentioned alot. Challenging the fact that education has up to know been restrictive in its approach, and that simply replicating boring broadcast lectures in a virtual world will not work any better. Students asleep at a real desk will still be asleep at a virtual desk. The famous Sarah Robbins a.k.a Intellagirl told a fantastic story around letting students get to safely understand social exclusion and discrimination through Second Life. In here example she explained how she set her students a task to go to a Second Life night club, but they all had to be as outsized round spherical avatars (Kool-aid men). Their individual experiences did not really include much societal diversity. They re-appeared form the club 5 minutes after going. Intellagirl was not sure why they were so quick, but it turned out that they all turned up, and were very quickly politely ejected for not fitting the social norm of the venue. Each of the students was able to explain their feelings of exclusion and appreciation of the potential problems that attitudes can have on people. As she pointed out it would be very hard to compare that educational experience with any other way of doing it quite so safely. Hence the ongoing conversational them about “justify why a virtual world” is often used as a weapon to stop change usually by trying to make a comparison to things we can already do. This being an example of something we cant do usually, but had clear benefit in discovering this way of working.

Design as a panel showed some interesting ways of getting things done in virtual worlds. I missed some of the panel but did see Boris Kizelshteyn of Popcha! get a admiring round of applause for diving into a live Second Life demo of their presentation toolset and also bootlegging the conference by using video streaming from his webcam back into Second Life. That rounded the day off nicely from the main conference.

The evening for me was mostly spent at a ThinkBalm event with lots of the drinks bought by Erica and Sam Driver. The conversation was varied and very interesting. We talked lots about Augmenting Reality in various forms.

Day 2 started with the panel I was speaking on. Explore. With America clearly in a mood for change and for the future the main focus of the session was explaining the Virtual Worlds Roadmap. (An excuse to use a photo of the capitol building) Sibley had managed to get to the venue after some major knee surgery and was sporting not only his trademark hat, but a very impressive leg brace. Koreen was our MC, and set us off on our way.

Day 2 started with the panel I was speaking on. Explore. With America clearly in a mood for change and for the future the main focus of the session was explaining the Virtual Worlds Roadmap. (An excuse to use a photo of the capitol building) Sibley had managed to get to the venue after some major knee surgery and was sporting not only his trademark hat, but a very impressive leg brace. Koreen was our MC, and set us off on our way.

Sibley explained the premise of the roadmap, that many of the things we are doing today with virtual worlds, we could have done a long time ago. (For me this resonates with the comments from the first session where there are multiple adoption curves and people are a major factor in all this). The roadmap, as Sibley pointed out, is there to break things into industry verticals, to evaluate what we can and can’t do to meet those needs and then work out how to get the gaps plugged.

Next up on intro’s was me, I first of all made it clear that I was still epredator, but I was not still with IBM, just in case anyone had not heard the news. I made three points about the future.

1. I explained that we were washing away cave paintings, that up until now everything we have done has been broadcast media, that telling people where the hunting grounds were, where the dangers were etc was replicated in how we do books, tv, film etc. Its also how we do education in a classroom (Linking back to the teach panel). It was a 30 second version of the keynote from ACE in ireland.

2. There is no one platform to solve all the problems, nor is there likely to be. It is not a case of sticking to or picking one deployment platform and then standing back. I mentioned the difference in need in something like The Coaches Centre that doing a classroom education of some standard texts requires very different technology to the fluid dynamics to coach swimming, or the physics in tennis, or the physiological models to teach doctors. Its not one simulation, not one virtual world, not one platform as much as its not one industry vertical.

3. Assuming the above is the case, things will need to interoperate in ways that suit us as people. We need ways to describe and combine education and training plans that span all types of media and place. This is not simply a case of can x import model from y. It is more about the meta description of how we combine the appropriate levels of simulation, interaction and content from the right places. I added that we need to think how we not only augment the physical world with the virtual but how we augment the virtual worlds with other elements of the virtual.

John then talked about his vision of high end simulations, where and why Intel are interested and also as a co-founder with Sibley, reiterated the depth of the work in the roadmap and that it was for everyone to join in and sort out.

Eiliff then also introduced the deeper elements needed for people to be able to learn by exploration, once again re-iterating the elements of learning dont just come from re-replicating the classroom and that we can take this further than ever now.

The rest of the panel time was in various discussions that built on and around these points. I put it to the panel and audience that I think that the platform and tech discussions will get blown away when people from the games industry (with their budgets and huge infrastructures) figure out that benefits in business bring a whole new dimension and scale to this. Sibley pointed out they probably would not as they were comfortable where they were. I agreed, but suggested that a drop in gaming revenue, or some industry pressures might make them look elsewhere, or the proliferation of easy to get middleware and game creation tools such as Unity3d might make lots of game related startups adjust their focus.

At some point I found myself singing the praises of IBM’s Sametime 3D and in particular how in the adoption of some of this way of working just putting it into the normal workflow of everyday life just makes the challenges and barriers melt away. The full on product development cycle merging enterprise instant messaging so that it can, as needed, allowing anyone to spark up an opensim room so say “let me show you” action some things and then close and move on needs someone like IBM to deliver it.

Next up was Improve. A panel led by Erica Driver. Once again this was real projects getting to be explained. From J&J recruiting, to SAP’s approach to total integration with physical assets (Shaspa got a good few mentions here), to Robin Williams from Sun explaining the multitude of good work in both SL and Wonderland (that we are all praying does not get killed by the Oracle monolith) and finally ending with Kevyn Renner from Chevron who won the award for the sparkiest most dynamic delivery of the whole show. Chevron using all sorts of 3d for years, but increasingly using collaborative immersive 3d real world training and safety issues. Twitter was particularly alight with surprise as he got off stage and wandered around with a glass in his hand to demonstrate a “thing” whilst having a pop at the Australians (being from New Zealand). It was a great pitch and the panel also dealt with lots of questions. Robin was particularly on form with very similar response to the typical questions that saw me nodding profusely.

Connect was the next panel up. Sam Driver was leading, first up was Robert Broomfield from Metanomics fame. He explained much of how he fell into being the chat show star in Second Life, how the show grew and how now with Treet.tv it is growing further and being injected into more environments in more ways. I like this point a lot, as it fitted with my panel’s conversations around not just having one environment.

Next up Joanne Martin, President of the IBM Academy of Technology. Joanne explained the AoT and the fact she is in one of the few elected positions in the company. The Academy is IBM’s technically focused leading thinkers. The case study was one that Joanne admitted when she started she knew nothing about virtual worlds (I must have missed one in my conversions 🙂 ) but that when the great and good from IBM had their massive yearly conference cancelled almost at the last minute due to money concerns they had to come up with something quick. The AoT meeting was the first foray with the original behind the firewall solution with Linden Lab and Second Life. Joanne pointed out they used lots of other online connections, webcasts, wiki’s etc, but that the Virtual world had a massive benefit of serendipitous conversations and mingling with fellow attendees. This whole thing is the case study written up by IBM and LL recently. I had always known the AoT would be key in adoption.

The recipe

1.Take a bunch of talented, but sometimes stuck in their ways old hands with a hint of the mad inventor in them.

2. Take away a long cherished physical gathering due to budget.

3. Put some new ways of working in front of them all.

4. If it works completely, the key influencers will say why did we not do this before

5. If it works a little bit, the key influencers will say, why could we not do this better?

6. If it breaks, they will say heck we are IBM surely we can make this work, get out of the way let me try.

End result, adoption, products, industry.

Of course for them even to get to that point I know full well it needed demand generation and evangelizing in the lead up, otherwise things like a behind the firewall virtual world would not have even got a look in. To think some people told me there was no business value in virtual worlds, and now the president of the AoT was on stage saying precisely the opposite.

Claire from Cisco told a similar tale, slightly different technologies used sometimes (they do own webex). Of course again this was interesting that my fellow opposite (though slightly higher) number Christian Renaud had shepherded Cisco to a similar point.

Jaque divided the audience with a delivery about a volunteer virtual police and security force initially in Activeworlds but spanning into other places too. The questions raised of who watches the watchmen? Whether it is ok to need a police force or if we are all self governing certainly caused a ripple on twitter. All the things Jaque said I think I have experienced, and in some cases had to do. Talk down a potential griefer etc. Yet at the same time, a self appointed set of police seemed odd. A subject that will certainly keep on getting attention.

The penultimate panel was Extend. Tony was the moderator. There was quite a mix on the panel from the extremely touching and emotive work from the US Holocaust Museum, to the outreach to industry analysts on products from Sun, to KPMG’s recruiting fairs initially in Second Life then more lately in Unisfair and finally Tim from Pearson talking about his customer work with ECS as a partner for The Coaches Centre.

The Coaches Centre is something that I am very close too especially with my background with sports and virtual worlds with Wimbledon, so it was interesting hearing a partner/provider talk about it when ideally it should have been TCC themselves. The basics of TCC is to improve the quality and experience of sports coaches at all levels using new and immersive technology. Currently the pilot with Pearson/ECS as content providers is running in Canada, but it is just the first step in something much grander that I can see will blossom from this, and as I mentioned in my panel an extend across many platforms and could aggregate and augment all sorts of elements of social and simulation technology.

The final panel was Convince. A body of fellow metaverse evangelists sharing their insights into how to get buy in where needed. Karen Keeter from IBM spoke and as a fellow colleague it was very familiar 🙂 Karen also showed Sametime 3d. John from Intel, Emily from Cisco and Brian form Etape also spoke.

John Hengeveld was also on my panel. His main tips were that each project has an impact so make sure you capture it (positive or negative)

Emily was indicating her sales force just would not for anything that was not bullet proof and obvious to use.

Brian rounded up the discussion by saying how he came at this from customer need, not out of a passion for the ideas. This was a great matter of fact pitch. They needed something, we tried virtual worlds, and wow we got so much more than we bargained for.

The conference then wrapped up, with a big round of applause for the organization and content.

A few of us twitterers then retired to an Irish Pub for Guiness and chat, but thats another post and this was way too long.