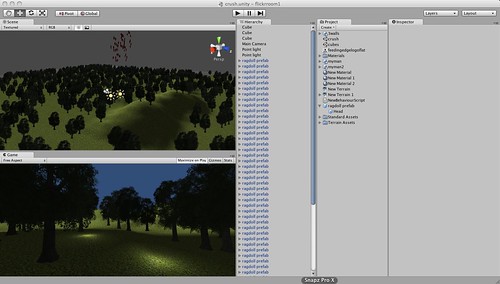

I have been doing a lot of Unity3d with data flying around, but I am still amazed at just how easy it is to get things to work.

For the non-techies out there this should still make sense as its all drag and drop and a little bit of text.

So you need a web based walk around some of your flickr pictures?

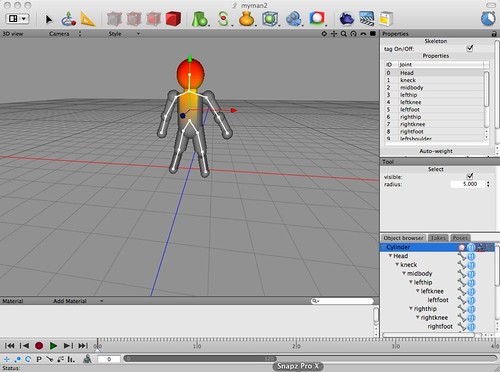

Unity3d makes it very easy to say “create scene”, “add terrain”, “add light”, “add first person walker (the thing that lets you move around” and finally to say “create cube”.

It is as simple as any other package to create things, just like adding a graph in a spreadsheet, or rezzing cubes in Second Life.

Once the Scene is created is it very easy to add a behaviour to an object in this case this script is types in and dragged and dropped onto each cube in the scene. (its in the help text too)

var url = "http://farm3.static.flickr.com/2656/4250994754_6b071014d4_s.jpg";

function Start () {

// Start a download of the given URL

var www : WWW = new WWW (url);

// Wait for download to complete

yield www;

// assign texture

renderer.material.mainTexture = www.texture;

}

Basically you tell it a URL of an image, 3 lines of code later that image is on the surface of your object running live.

The other thing that is easy to do is drag a predefined behaviour of “DragRigidBody” on the cube(s). Then you get the ability to move the cubes when they are running by holding onto them with the mouse and left click.

Now the other clever piece (for the techies and programmers amongst us) is that you can create an object of collection of objects and bring them out of the scene and create a “prefab”. This prefab means that you can create lots of live versions of an object in the scene. However if you need to change them, add a new behaviour etc you simply change the root prefab and the changes are inherited by all the prefabs in the scene. You are also able to override settings on each prefab.

So I have a cube prefab, with the “Go get an image” script on it.

I drag a few of those into the scene and for each one I can individually set the URL they are going to use. All good Object Orientated stuff.

This is not supposed to be a state of the art game :), but you can see how the drag and drop works and moving around using live images I drag in from my Flickr in this sample

Click in the view and W and A moves back and forwards, left click and hold the mouse over a block to move it around.

Downward gravity is reduced (just a number) and of course the URL’s could be entered live and change on the fly. I only used the square thumbnail URLs form my Flickr photos so they are not hi quality 🙂

This is also not a multiuser example (busy doing lots of that with Smartfox server at the moment) but it is just so accessible to be able to make and publish ideas.

The code is less complicated that the HTML source of this page I would suggest. Its also free to do!