Once again I am back on the trail of a good voice solution to use in Unity3d to use in multiple users situations. Having done a good few projects using a variety of solutions, mainly with an adjusted version of the now defunct Uspeak (as in removed from the assets store but replaced with Daiken Forge voice) combined with self hosted Photon server I think it must be time to find a more scalable solution. Very often the pressures of voice lead to a swamping of any server that is also dealing with position and data model synchronisation across the network. This clearly makes sense as there are only a few bytes of message for something moving a long distance, yet an audio stream is a constant collection of data flowing in and out of a server.

Whilst there is huge focus on VR for the eyes, being able to communicate needs more than one channel but voice is one of those.

A voice connection can be 100Kbps per person so without a different tuning or set of priorities the regular multiplayer data messages, even if they are reliable, will get lost on the noise of an open mic.

My projects up to now have ended up with a push to talk or even my scarily complicated dial, ring answer/engaged phone simulator in order to cut down on the amount of voice. Raising a hand to speak works in many formal situations but not in social collaboration.

So whilst we can squeeze voice packets onto the same socket server as the rest of the “stuff” it feels like it should be a separate service and thread.

Oddly there is not a solution just sitting there for Unity3d? It seems that many of the existing voice clients for games and related services just don’t play well in the Unity3d/c# environment.

Unfortunately this seems to be down to the generic nature of Unity3d (which is a major strength) compared with the specifics of PC architecture and also in how to deal with shared memory and pipes.

There are a lot of voice servers out there for games. Mumble/Murmur is a major one. it is open source and appears to bolt on top of many games even supporting a 3d positional audio.

Many games are of course closed code bases. So Mumble has to piggy back on top of the game or have a little bit of help form the game developers to drop some hooks in. The main problem seems to be the use of shared memory versus a more closed boundary of applications. Obviously a shared memory space to communicate can bring problems and hacks. Strangely it was shared memory and various uses of it that let us do the early client server work back in the 90’s scraping from a terminal screen to make a legacy CICs application work with a fancy GUI!. Initially I figured that there would be a c# client for mumble that any of us could drop into Unity3d and away we go. However there are a few unfinished or implementations being attempted. Connecting to mumble and dealing with text is fine but voice and the various implementations of encoding seems to be a sticking point. Mumble also has a web interface implemented through something called ICE which is another Remote Procedure Call set of interfaces. It seems to be again focussed on building with C++ or PHP. Whilst ICE for .Net exists it does not work on all platforms. I am still looking at this though as surely if a web interface works we can get it to work on Unity3d. Of course the open source world is wide and diverse so there is always a chance I am missing something?

If a Mumble, or similar client can exists in a Unity3d context then we have our solution. It is nice to have the entire interface you need inside Unity3d not side loaded or overlayed. However it may be that a solution is to have implement the web interfaces next door to a unity interface and use plugin to webpage communication as the control. This is fraught with errors, browsers, DOM’s, script fails etc. Just a nice drop in mumble client hooked u to the shiny new UI interfaces.

I looked at the others in the area, Teamspeak is one of the biggest but incurs licensing charges so is not very open source minded. Ventrillo and Vivox seem to have fallen away.

Then of course there are the actual phone voip packages and standards. Maybe they offer the answer. I used Freeswitch on Opensim to provide a voice channel. Maybe I need to look at those again.

Or another solution could be trying to persuade the socket server applications like Photon than they need to talk to more than one server. At the moment the assumption is one server connection (though the load balancing may occur further on the server itself). If the client knew to talk to a different configure machine and network that was happy to deal with the firehose of audio but the normal movements talked to the server configure to deal with normal movements then we might have a solution.

Either way, its not straight forward and hasn’t been totally solved yet, or if it has everyone is keep quiet (Yes thats ironic when talking about VOIP!)

Let me know if you do have some great opensource or open sourceable solution.

unity3d

Unreal Engine – an evolution

As you may have noticed I am a big fan of Unity3d. It has been the main go to tool for much of my development work over the years. A while back I had started to look at Unreal Engine too. It would be remiss of me to say Unity is the way I have always done things so I will stick with that 😉 My initial look at Unreal Engine though left me a little cold. This was a few years ago when it started to become a little more available. As a programmer, and with a background in C++ I was more than happy to take on the engineering challenge. However it was almost too much being thrown at me in one go. There was a great deal of focus on the graphics side of things. It felt, at the time, more like a visual designers tool with code hidden away. This was different from Unity3d that seemed to cross that workflow boundary offering either simple code, complicated graphics, or vice versa.

The new version of Unreal Engine, now fully free unless you make a lot of money building something with it, in which case you pay seems a much more friendly beast now. It has clearly taken onboard the way Unity3d does things. That initial experience and the packaging of various types of complexity allowing you to unwrap and get down to the things you know, but not get in the way on the things you don’t.

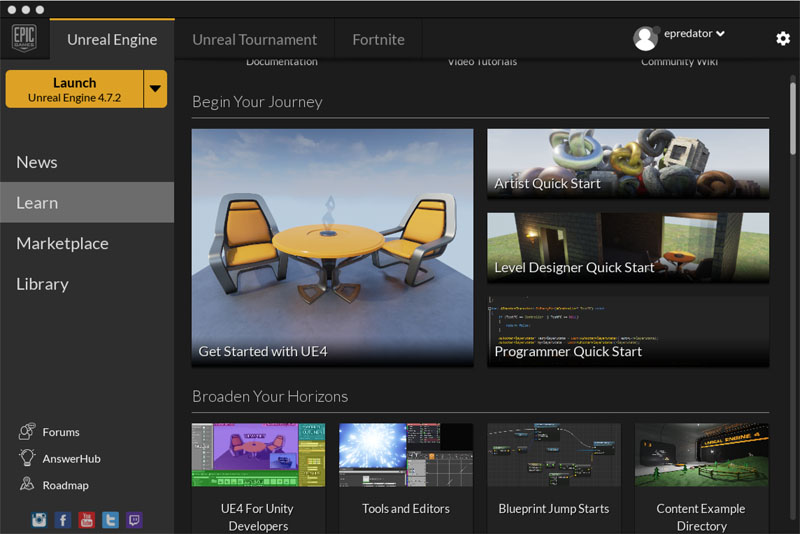

The launcher has access to the tool but also acts as a hub for other information and to the marketplace. I can’t remember this from the last time I looks at Unreal, but it is very obvious now.

The one document that leapt out is UE4 for Unity developers. This is addresses the differences and similarities between the two environments. Some of it is obviously a bit of “this is why we are better” and in some cases not strictly correct, particularly on object composition. However it is there and it does help. It recognises how huge Unity3d is rather the that slightly more arrogant stance that the toolset seemed to have as “we know best”. That is just a personal opinion and a feeling. That may seem odd for a software dev tool but when you work with these things you have a sense of who they are for and what they want to do. Unity3d has demo from humble beginnings as an indie tool grown to a AAA one. Unreal Engine, obviously had to start somewhere but was an in house toolkit that grew and grew making AAA titles then burst out into the world as a product. They both influence one another, but here it is the influence of Unity3d on Unreal Engine I am focussing on.

Also on this launcher are the quickstarts. Showing the roles of Artists, Level designer and programmer as different entry points. Another good point, talking the right language at the start.

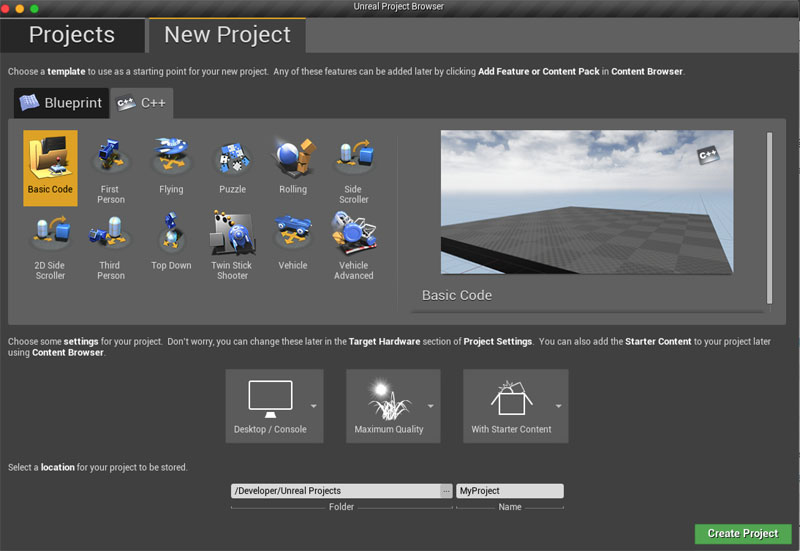

Unity has a lot of sample projects and assets, some great tutorials. Unreal Engine now has this set of starter projects in a new project wizard. It is easy for more experience developers to sniff at these, but as a learning tool, or a prototyper being able to pick from these styles of project is very handy.

I had a number of “oh that’s how unreal works!” moments via these. First person, puzzle, 2d side scroller, twin stick shooter etc are all great. Unity3d does of course have 2D or 3D as a starting point for a project. Though I have always found 2D a bit strange in that environment, as I have build 2d in a 3d environment anyway.

The other interesting thing here is the idea of a C++ version or a Blueprint version. Blueprint is Unreal Engine providing a visual programming environment. Behavours and object composition is described through a programming facade. The blueprint can mix and match with C++ and shares some similarity with a Unity3d prefab, though it has more interactions described in visual composition than just exposing variable and functions/events in a prefab. Whilst blueprints may help people who don’t want to type c++. like many of the visual programming environments it is still really programming. You have to known what is changing, what is looping, what is branching etc. It is a nice feature and option and the fact it is not exclusively in that mode makes it usable.

Unreal Engine also seems to be happy to work on a Mac, despite much of the documentation mentioning Visual Studio and Windows it does play well with Xcode. It has to really to be able to target iOS platforms. So this was another plus in the experience.

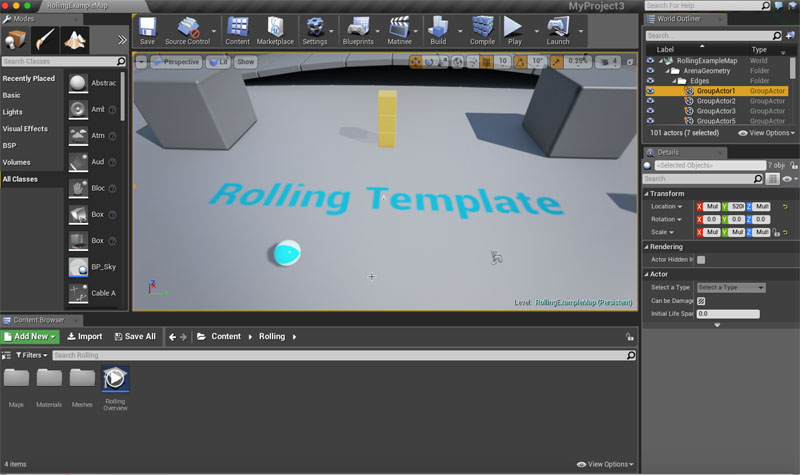

The main development environment layout by default is similar to Unity3d too. All this helps anyone to have a look at both and see what works for them.

I am not a total convert yet. I still need to explore the multiplayer/server side of things. The ability to interface with other systems (which all my work ends up needing to do). Though I am not quite so turned off by it now. It seems a real contender in my field. So just like all these things you have to give it a go and see how it feels.

Number 5 is alive (Unity3d 5.0)

Yesterday at GDC Unity3d 5.0 got released. As a long term, long time user of it this is an interesting time. Many of the fancier features around how graphic designers can make things look nice are not that high on my list of things I needed, however its all very welcome.

As a pro licence holder I also now seem to get some extra discounts in the store and a few perks which is nice 🙂

I had tried the beta of 5.0, but I was mostly on 4.6 as this is the version that every project I have worked in and also introduced the shiny new user interface pieces of code.

Before wrecking any projects with imports and checking all the old stuff worked I thought I would just throw together some things with the new basic prefabs and assets.

There is a nice ready rigged car and also a couple of planes in the kit bag now.

So…

I created a terrain, imported some SpeedTree trees from the asset store. Asked it to mass place the trees for me. I added a wind zone to make the trees blow around.

I then dropped the car prefab not the scene, made it blue. (That gave me the car motion on keys, sounds, skids etc.)

The camera I moved to attach to the car.

Just for fun I dropped a few physics objects in it. Spheres and blocks.

So now there is a car world to raz around in knocking things over.

All this took about 15 minutes. It is here (if you have a unity plugin) Make sue you click to give the world focus then the arrows work to drive around.

OK it is not quite Forza Horizon but as a quick dive into Unity3d it was a good test 🙂

I then built an HTML5 version. This is slightly more clunky as it is early days for this rather than the player. It is also 200mb as opposed to the 30mb of the player version. So rather than sit and look at a blank screen the version is here on dropbox. That makes sense as of course the HTML5 version has to bring its own player type scripts.

Even with Unreal engine now out there for free Unity3d seems much more accessible as a techy.

I am looking forward to exploring some of the new stuff like the mixing desk for audioscapes. I also seem to have Playmaker free to download as part of “level 11” on the shop. That lets you create more flow chart based descriptions of camera pans etc.

The extra elements in the animation system also look intriguing.

As Unity3d covers the entire world of work when it comes to games it is hard to be great at everything, but it does let anyone, techie, designer, musician, animator etc all have a look at one another worlds.

I think the next project, in 5.0 will be the most interesting yet.

MergeVR – a bit of HoloLens but now

If you are getting excited and interested, or just puzzling what is going on with the Microsoft announcement about Hololens and can’t wait the months/years before it comes to market then there are some other options, very real, very now.

Just before christmas I was very kindly sent a prototype of new headset unit that uses an existing smartphone as its screen. It is called MergeVR. The first one like this we saw was the almost satirical take on Oculus Rift that Google took with Google Cardboard. A fold up box that let you strap your android to your face.

MergeVR is made of very soft, comfortable spongy material. Inside are two spherical lenses that can be slid in and out lateral to adjust the divergence of you eyes and get a comfortable feel.

Rather like the AntVR I wrote about last time, this uses the principle of one screen, split into two views. The MergeVR uses you smart phone as the screen and it slides comfortably into the spongey material at the front.

Using an existing device has its obvious advantages. The smartphones already have direction sensors in them, and screens designed to be looked at close up.

MergeVR is not just about 3d experience of Virtual Reality (One where the entire view is computer generated). It is, by its very name Merge, about augmented reality. In this case it is an augmented reality taking your direct view of the world and adding data and visuals to it. This is knows as a magic lens. You look through the magic lens, and see things you would not normally be able to see. As opposed to a magic mirror which you look at a fixed TV screen to see the effects of a camera merging with the real world.

The iPhone (in my case) camera has a slot to see through on the in the MergeVR. This makes it very difference from some of the other Phone On Face (POF – made up acronym) devices. The extra free device I got with the AntVR, the TAW is one of these non pass through POF’s. It is a holder, and lenses with a folding mechanism to adjusts to hold the phone in place. With no pass through it is just to watch 3d content.

AntVR TAW

Okay so the MergeVR is able to let you use the camera, see the world, and then you can watch the screen close up without holding anything The lenses make you left eye look at the right half and the right eye at the left half. One of the demo applications is instantly effective and has a wow factor. Using a marker based approach a dinosaur is rendered in 3d on the marker. Marker based AR is not new, neither is iPhone AR, but the stereoscopic hands free approach where the rest of the world is effectively blinkered for you adds an an extra level of confusion for the brain. Normally if you hold a phone up to a picture marker, the code will spot the marker, the orientation of the marker and relative position in the view then render the 3d model on top. So if you, or the marker moves the model is moved too. When holding the iPhone up you can of course still see around it, rather like holding up a magnifying glass (magic lens remember). When you POF though your only view of the actual world is the camera view of the phone. So when you see something added and you move your body around it is there in your view. It is only the slight lag and the fact the screen is clearly not the same resolution or same lighting as the real world that causes you to not believe it totally.

The recently previewed microsoft Hololens and the yet to be seen Google funded Magic Leap are a next step removing the screen. They let you see the real world, albeit through some panes of glass, and then use project tricks near to the eye, probably very similar to peppers ghost, to adjust what you see and how it is shaded, coloured etc. based on a deep sensing of the room and environment. It is markerless room aware blended reality. Using the physical and the digital.

Back to the MergeVR. It also comes with a bluetooth controller for the phone. A small hand held device to let you talk to the phone. Obviously the touch screen when in POF mode means you can’t press any buttons 🙂 Many AR apps and examples like the DinoAR simply use your head movements and the sensors in the phone to determine what is going on. Other things though will need some form of user input. As the phone can see, it can see hands, but not having a Leap motion controller or a kinect to sense the body some simpler mechanism can be employed.

However, this is where MergeVR gets much more exciting and useful for any of us techies and metaverse people. The labs are not just thinking about the POF container but the content too. A Unity3d package is being worked on. This provides camera prefabs (Rather like the Oculus Rift one) that splits the Unity3D view into a Stereo Camera when running into the right shape and size, perspective etc for the MergeVR view. It provides extra access to the bluetooth controller inputs too.

This means you can quickly build MergeVR 3d environments and deploy to the iPhone (or Droid). Combine this with some of the AR toolkits and you can make lots of very interesting applications, or simply just add 3d modes to existing ones you have. With the new unity3d 4.6 user interfaces things will be even easier to have headsup displays.

So within about 2 minutes of starting Unity I had a 3d view up on iPhone on MergeVR using Unity remote. The only problem I had was using the usb cable for quick unity remote debugging as the left hand access hole was a little too high. There is a side access on the right but the camera need to be facing that way. Of course being nice soft material I can just make my own hole in it for now. It is a prototype after all.

It’s very impressive, very accessible and very now (which is important to us early adopters).

Lets get blending!

(Note the phone is not in the headset as I needed to take the selfie 🙂

An interesting game tech workshop in Wales

Last week I took a day out from some rather intense Unity3d development to head off to North Wales to Bangor. My fellow BCS Animation and Games Dev colleague Dr Robert Gittins invited me to keynote at a New Computer Technologies Wales event on Animation and Games 🙂

It is becoming an annual trip to similar events and it was good to catch up with David Burden of Daden Ltd again as we always both seem to be there.

As I figured that many of the people there were going to be into lots of games tech already I did not do my usual type of presentation, well not all the way through anyway. I decided to help people understand the difference between development in a hosted virtual world like Second Life and developing from scratch with Unity3d. This made sense as we had Unity3d on the agenda and there were also projects from Wales that were SL related so I though it a good overall intro.

I have written about the difference before back here in 2010 but I thought I could add a bit extra in explaining it in person and drawing on the current project(s) without sharing too much of things that are customer confidential.

I did of course start with a bit about Cool Stuff Collective and how we got Unity3d on kids TV back on the haloween 2010 edition. This was the show that moved us from CITV to ITV prime saturday morning.

I added a big slide of things to consider in development that many non game developers and IT architects will recognise. Game tech development differs in content to a standard application, the infrastructure is very similar. The complication is in the “do something here” boxes of game play and the specifics of real time network interaction between clients. Which is different to many client server type applications (like the web)

After that I flipped back from tech to things like Forza 5 and in game creation of content, Kinect and Choi Kwang Do, Project Spark and of course the Oculus Rift. I was glad I popped that in as it became a theme throughout the pitches and most people mentioned it in some way shape of form 🙂

It was great to see all the other presentations too. They covered a lot of diverse ground.

Panagiotis Ritsos from Bangor University gave some more updates on the challenges of teaching and rehearsing language interpretation in virtual environments with EVIVA/IVY, the Second Life projects and now the investigations into Unity3d.

Llyr ap Cenydd from Bangor University shared his research on procedural animation and definitely won the prize for the best visuals as he showed his original procedural spider and then his amazing Oculus Rift deep sea experience with procedural generated animations of Dolphins.

Just to help in case this seems like gobbledegook. very often animations have been “recorded” either by someone or something being filmed in a special way that takes their movements and makes them available digitally as a whole. Procedural generation uses a sense and respond to the environment and the construction of the thing being animated. Things are not recorded but happen in real time because they have to. An object can be given an push or an impulse to do something, the rest is discovered but he collection of bits that make up the animated object. It is very cool stuff!

Just before the lunch break we had Joe Robins from Unity3d, the community evangelist and long term member of the Unity team show us some of the new things in Unity 5 and have a general chat about Unity. He also did a session later that afternoon as a Q&A session. It was very useful as there is always more to learn or figure out.

We all did a bit of a panel, quite a lot of talk about education of kids in tech and how to just let them get on with it with the teachers, not wait for teachers to have to become experienced programmers.

After lunch it was Pikachu time, or Pecha Kucha whatever it is called 🙂 http://www.pechakucha.org 20 slides each of 20 seconds in a fast fire format. It is really good, covers lots of grounds raises lots of questions.

David Burden of Daden Ltd went first. VR the Second Coming of Virtual Worlds exploring the sudden rise of VR and where it fits in the social adoption and tech adoption curves. A big subject, and of course VR is getting a lot of press as virtual worlds did. It is all the same, but different affordances of how to interact. They co-exist.

Andy Fawkes of Bohemia Interactive talked about the Virtual Battlespace – From Computer Game to Simulation. His company has the Arma engine that was originally used for Operation Flashpoint, and now has a spin of with the cult classic Day Z. He talked about the sort of simulations in the military space that are already heavily used and how that is only going to increase. An interesting question was realised about the impact of increasingly real simulations, his opinion was that no matter what we do currently we all still do know the difference and that the real effects of war are drastically different. The training is about the procedures to get you through that effectively. There has been concern that drone pilots, who are in effect doing real things via a simulation are to detached from the impact they have. Head to the office, fly a drone, go home to dinner. A serious but interesting point.

Gaz Thomas of The Game HomePage than gave a sparky talk on How to entrain 100 million people from your home office. Gaz is a budding new game developer. He has made lots of quick fire games, not trained as a programmer he wanted to do something on the web, set up a website but then started building games as ways to bring people to his site. This led to some very popular games, but he found he was cloned very quickly and now tries to get the mobile and web versions released at the same time. It was very inspirational and great to see such enthusiasm and get up and go.

Ralph Ferneyhough of newly formed Quantum Soup Studios talked about The New AAA of Development – Agile, Artistic, Autonomous. This was a talk about how being small and willing to try newer things is much more possible and needed that the constant churn in the games industry of the sequel to the sequel of the sequel. The sums of money involved and sizes of projects leads to stagnation. It was great to hear from someone who has been in the industry for a while branching out from corporate life. A fellow escapee, though from a different industry vertical.

Chris Payne of Games Dev North Wales gave the final talk on Hollywood vs VR:The Challenge Ahead. Chris works in the games industry and for several years has been a virtual camera expert. If you have tried to make cameras work in games, or played one where it was not quite right you will appreciate this is a very intricate skill. He also makes films and pop videos. It was interesting to hear about the challenges that attempting to do 360 VR films is going to have for what is a framed 2d medium. Chris showed a multi camera picture of a sphere with lenses poking out all around it, rather like the star wars training drone on the Millennium Falcon that Luke tries his light sabre with. This new camera shoots in all directions. Chris explain though that it was not possible to build one that was stereoscopic. The type of parallax and offsets that are needed can only really be done post filming. So a lot has to be done to make this giant 360 thing able to be interacted with in a headset like the rift. However that is just the start of the problems. As he pointed out, the language of cinema, the tricks of the trade just don’t work when you can look anywhere and see anything. Sets can’t have crew behind the camera as there is no behind the camera. Story tellers have to consider if you are in the scene and hence acknowledged or a floating observer, focus pulls to gain attention don’t work. Instead game techniques to attract you to the key story elements are needed. Chris proposed that as rendering gets better it is more likely that the VR movies are going to be all realtime CGI in order to be able to get around the physical problems of filming. It is a fascinating subject!

So it was well worth the 4am start to drive the 600 miles round trip and back by 10pm 🙂

It’s got the lot – metaverse development

My current project has kept me pretty busy with good old fashioned hands on development. However, sometimes it is good to step back and see just how many things a project covers. I can’t go into too much detail about what it is for but can share the sort of development coverage.

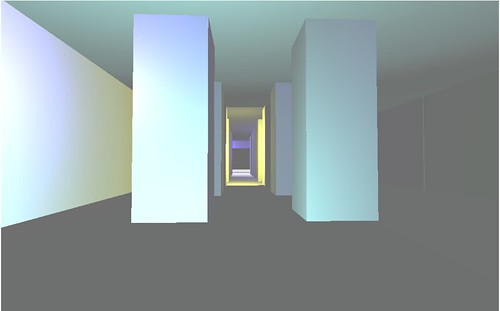

(*update 11/6/14 Just adding this picture from a later post that describes this environment)

It is a unity3d multi-user environment with point and click. It works on the web so it needs a socket server to broker the network communications. So it has a Photon Server. That Photon Server is not on their cloud but on a separately hosted box with a major provider. So that needs my attention sys-admin wise configuring and keeping it up to date.

The unity3d system needs to be logged into and to record things that have happened in the environment. So I had to build a separate set of web pages and php to act as the login and the API for the unity3d web plugin to talk to. This has to live on the server of course. As soon as you have login and data, users etc you need a set of admin screens and code to support that to.

The unity3d system also needs voice communication as well as text chat. So that’s through Photon too.

The actual unity3d environment has both regular users and an admin user in charge. So there are lots of things flowing back and forth to keep in sync across the session and to pass to the database. All my code is in c# though sometimes a bit of js will slip in. WE have things like animations using the animation controller and other unity goodies like Navmesh in place too.

I am working with a 3d designer so this is a multi person project. So I have had to set up mercurial repositories and hosting the repo on bitbucket. We sync code and builds using Atlassian SourceTree which is really great. I also have an error tracking system with Atlassian so we have a JIRA. It means when I check code in and push the repository I can specify the JIRA reference number for the issue and it appears logged on the issue log. That combined with all the handy notifications to all concerned.

As I have a separate server component running I had to set up another repository to enable me to protect and synchronise any server changes, the server has its own repository ID so it can pull the unity3d builds to the server too.

There are complications in doing a database communication as Unity will only talk to the server that is is served from using the www classes. So it makes local testing of multiuser a little tricky. The unity dev environment is able to emulate the server name but the built versions can’t so there is a lot of testing bypass code needed.

Oh I forgot to mention, this is all in Arabic too. There is nothing wrong with that except I don’t know the language. Also Arabic is a right to left language so things have to be put in place to ensure that text, chat etc all flows correctly.

A few little problems arose with this. Unity has an excellent Arabic component that allows you to apply right to left to any output text, however it does not work on input fields. That is a bit tricky when you need text chat, typing in questions and responses etc. So I have ended up writing a sort of new input field, I use a text label but capture the keys pass it to the Arabic fixer component which then returns the right to left version that is displayed in the label. I do of course loose things like cursor and focus as the label is an output device but needs must.

In order to support Arabic in html and in the database I had to ensure that the text encoding of everything is UTF-8, there is also a directive tag dir=rtl that helps browsers know what to do with things. However I have found that this works with HTML input fields but seems to not work with password fields. My password field will not let me type Arabic into it. The keyboard language chooser on the mac reverts to uk and Arabic is greyed out. This cause me a lot of confusion on logging in.

There is also the confusion of what to type, it is relatively easy to cut and paste translated Arabic labels into strings, but when testing a chat system or user names I needed to know what english keystrokes generated what Arabic phrase (that’s not a translation thats a how do I type something meaningful in Arabic and see it come up on the screen).

Luckily my good friend Rob Smart came to my aid with “wfhp hgodn” which equates to صباح الخير which is a variant of good morning. It helped me see where and when I was getting the correct orientation. Again this is not obvious when you start this sort of thing 🙂

Anyway its back to layering and continuos improvement. Fixing bugs, adding function. It is pretty simply on paper but the number of components and systems, languages and platforms that this crosses is quite full on.

The project is a 3 person one, Project manager/producer, graphic designer and me. We all provide input to the project.

So if you need any help or work doing with unity3d, c#, photon,html, php, MySQL, rtl languages, cloud servers, bitbucket, mercurial, sourcetree, JIRA then I am more than slightly levelled up though there is always more to learn.

Phew!

Use Case 2 – real world data integration – CKD

As I am looking at a series of boiled down use cases of using virtual world and gaming technology I thought I should return to the exploration of body instrumentation and the potential for feedback in learning a martial art such as Choi Kwang Do.

I have of course written about this potential before, but I have built a few little extra things into the example using a new windows machine with a decent amount of power (HP Envy 17″) and the Kinect for Windows sensor with the Kinect SDK and Unity 3d package.

The package comes with a set of tools that let you generate a block man based on the the join positions. However the controller piece of code base some options for turning on the user map and skeleton lines.

In this example I am also using unity pro which allows me to position more than one camera and have each of those generate a texture on another surface.

You will see the main block man appear centrally “in world”. The three screens above him are showing a side view of the same block man, a rear view and interestingly a top down view.

In the bottom right is the “me” with lines drawn on. The kinect does the job of cutting out the background. So all this was recorded live running Unity3d.

The registration of the block man and the joints isn’t quite accurate enough at the moment for precise Choi movements, but this is the old Kinect, the new Kinect 2.0 will no doubt be much much better as well as being able to register your heart rate.

The cut out “me” is a useful feature but you can only have that projected onto the flat camera surface, it is not a thing that can be looked at from left/right etc. The block man though is actual 3d objects in space. The cubes are coloured so that you can see join rotation.

I think I will reduce the size of the joints and try and draw objects between them to give him a similar definition to the cutout “me”.

The point here though is that game technology and virtual world technology is able to give a different perspective of a real world interaction. Seeing techniques from above may prove useful, and is not something that can easily be observed in class. If that applies to Choi Kwang Do then it applies to all other forms of real world data. Seeing from another angle, exploring and rendering in different ways can yield insights.

It also is data that can be captured and replayed, transmitted and experienced at distance by others. Capture, translate, enhance and share. It is something to think about? What different perspectives could you gain of data you have access to?

A simple virtual world use case – learning by being there

With my metaverse evangelist hat on I have for many years, in presentations and conversations, tried to help people understand the value of using game style technology in a virtual environment. The reasons have not changed, they have grown, but a basic use case is one of being able to experience something, to know where something is or how to get to it before you actually have too. The following is not to show off any 3d modelling expertise, I am a programmer who can use most of the tool sets. I put this “place” together mainly to figure out Blender to help the predlets build in things other than minecraft.With new windows laptop, complementing the MBP, I thought I would document this use case by example.

Part 1 – Verbal Directions

Imagine you have to find something, in this case a statue of a monkey’s head. It is in a nice apartment. The lounge area has a couple of sofas leading to a work of art in the next room. Take a right from there and a large number of columns lead to an ante room containing the artefact.

What I have done there is describe a path to something. It is a reasonable description, and it is quite a simple navigation task..

Now lets move from words, or verbal description of placement to a map view. This is the common one we have had for years. Top down.

Part 2 – The Map

A typical map, you will start from the bottom left. It is pretty obvious where to go, 2 rooms up and turn right and keep going and you are there. This augments the verbal description, or can work just on its own. Simple, and quite effective but filters a lot of the world out in simplification. Mainly because maps are easy to draw. it requires a cognitive leap to translate to the actual place.

Part 3 – Photos

You may have often seen pictures of places to give you a feel for them. They work too. People can relate to the visuals, but it is a case of you get what you are given.

The entrance

The lounge

The columned corridor

The goal.

Again in a short example this allows us to get quite a lot of place information into the description. “A picture paints a thousand words”. It is still passive.

A video of a walkthrough would of course be an extra step here, that is more pictures one after the other. Again though it is directed.You have no choice how to learn, how to take in the place.

Part 4 – The virtual

Models can very easily now be put into tools like Unity3d and published to the web to be able to be walked around. If you click here, you should get a unity3d page and after a quick download (assuming you have the plugin 😉 if not get it !) you will be placed at the entrance to the model, which is really a 3d sketch not a full on high end photo realistic rendering. You may need to click to give it focus before walking around. It is not a shared networked place, it is not really a metaverse, but it has become easier than ever to network such models and places if sharing is an important part of the use case (such as in the hospital incident simulator I have been working on)

The mouse will look around, and ‘w’ will walk you the way you are facing (s is backwards a,d side to side). Take a stroll in and out down to the monkey and back.

I suggest that now you have a much better sense of the place, the size, the space, the odd lighting. The columns are close together you may have bumped into a few things. You may linger on the work of art. All of this tiny differences are putting this place into you memory. Of course finding this monkey is not the most important task you will have today, but apply the principle to anything you have to remember, conceptual or physical. Choosing your way through such a model or concept is simple but much more effective isn’t it? You will remember it longer and maybe discover something else on the way. It is not directed by anyone, your speed your choice. This allows self reflection in the learning process which re-enforces understanding of the place

Now imagine this model, made properly, nice textures and lighting, a photo realistic place and pop on a VR headset like the Oculus Rift. Which in this case is very simple with Unity3d. You sense on being there is even further enhanced and only takes a few minutes.

It is an obvious technology isn’t it? A virtual place to rehearse and explore.

Of course you may have spotted that this virtual place whilst in unity3d to walk around provided the output for the map and for the photo navigation. Once you have a virtual place you can still do things the old way if that works for you. Its a Virtual virtuous circle!

Talking heads – Mixamo, Unity3d and Star Wars

High end games have increased peoples expectations of any experience that they take part in that uses game technology. Unity3d lets any of us build a multitude of applications and environments but also exposes us to the breadth of skills needed to make interesting engaging environments.

People, avatars and non player characters are one of the hardest things to get right. The complexity of building and texturing a mesh model of a person is beyond most people. Once built the mesh then has to have convincing bone articulation to allow it to move. That then leads to needing animations and program control of those joints. If it is complicated enough with a body then it gets even more tricky with the face. If a character is supposed to talk in an environment up close then the animations and structure required are even more complex. Not only that but if you are using audio, the acting or reading has to be convincing and fit the character. So a single avatar needs design, engineering, voice over and production skills all applied to it. Even for people willing to have a go at most of the trades and skills that is a tall order.

So it is great that companies like Mixamo exist.They already have some very good free rigged animatable people in the unity store, that help us small operators get to some high end graphic design quickly. They have just added to their portfolio of cool things though with Mixamo Face Plus

They have a unity plugin that can capture realtime face animation using video or a web cam. So now all techies have to do is figure out the acting skills and voice work in order to make these characters come alive. I say all 🙂 it is still a mammoth task but some more tools in the toolbox can’t hurt.

They have created a really nice animated short film using Unity which shows this result of this technology, blended with all the other clever things you can do in Unity 3d. Mind you take a look at the number of people in the credits 🙂

Even more high end though is this concept video using realtime high quality rendered characters and live performance motion capture in the Star Wars universe.

The full story is here direct quotes from Lucasfilm at a Technology Strategy Board meeting at BAFTA in London. So maybe I will be able to make that action movie debut after all. There is of course a vector here to consider for the interaction of humans across distances mediated by computer generated environments. (Or virtual worlds as we like to call them 😉 )

Jumping into LEAP

I had originally thought I would not bother with a LEAP controller. However new technology has to be investigated. That is what I do after all 🙂

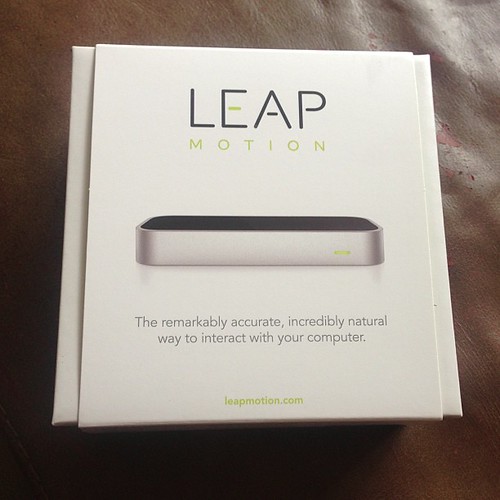

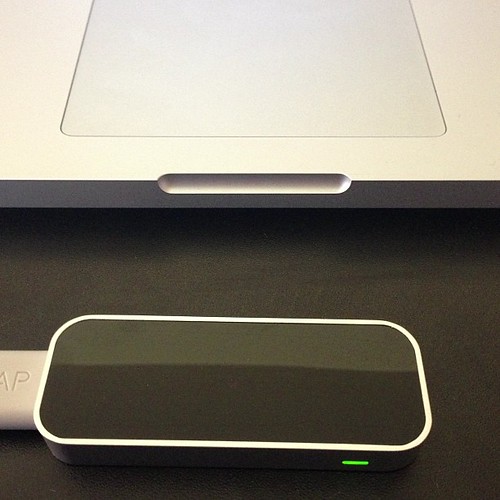

I ordered the LEAP from Amazon, it arrived very quickly. So whilst I might not have been an early adopter, and was not on the developer programme it is not hard to get hold of one now. It is £69.99 but that is relatively cheap for a fancy peripheral.

It is interesting the self proclamation on the box. “The remarkably accurate, incredibly natural way to interact with your computer”. My first impressions are that it is quite accurate. However, as with all gesture based devices as there is no tactile feedback you have to sort of feel you way through space to get used to where you are supposed to be.

However the initial setup demonstration works very well giving you a good sense for how it is going to work.

It comes with a few free apps via Airspace and access to another ecosystem to buy some more.

The first one I clicked on was Google Earth, but it was a less than satisfying experience as it is not that obvious how to control it so you end up putting the world into a Superman style spin before plunging into the ocean.

I was more impressed with the nice target catching game DropChord (which has DoubleFine’s logo on it). This has you trying to intersect a circle with a chord and hit the right targets to some blasting music and glowing visuals. It did make my arms ache after a long game of it though!

What was more exciting for me was to download the Unity3d SDK for LEAP. It was a simple matter or dropping the plugin into a unity project and then importing a few helper scripts.

The main one Leap Unity Bridge can be attached to a game object. You then configure it with a few prefabs that will act as fingers and palms, press run (and if you have the camera point the right way) you see you objects appear as your fingers do.

Many of the apps on Airspace are particle pushing creative expression tools. So creating an object that is a particle generator for fingers immediately gives you the same effect.

It took about 10 minutes to get it all working (6 of those were downloading on my slow ADSL).

The problem I can see at the moment is that pointing is a very natural thing to do, that works great, though of course the pointing it relative to where the LEAP is placed. So you need to have a lot of visual feedback and large buttons (rather like Kinect) in order to make selections. Much of that is easier with touch or with a mouse.

Where it excels though is in visualisation and music generation where you get a sense of trying to master a performance and get to feel you have an entire space to play with, not limiting yourself to trying to select a button or window on a 2d screen which is a bit (no) hit and miss.

I spent a while tinkering with Chordion Conductor that lets you play a synth in various ways. The dials to adjust settings are in the top row and you point and twirl your finger on the dials to make adjustments. It is a fun and interesting experience to explore.

Just watch out where you are seen using the LEAP. You are either aggressively pointing at the screen, throwing gang signs or testing melons for ripeness in a Carry on Computing style.

I am looking forward to seeing if I can blend this with my Oculus Rift and Unity3d when it arrives though 🙂