I had originally thought I would not bother with a LEAP controller. However new technology has to be investigated. That is what I do after all 🙂

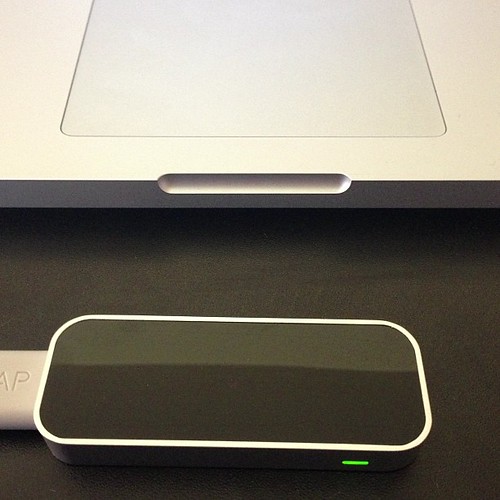

I ordered the LEAP from Amazon, it arrived very quickly. So whilst I might not have been an early adopter, and was not on the developer programme it is not hard to get hold of one now. It is £69.99 but that is relatively cheap for a fancy peripheral.

It is interesting the self proclamation on the box. “The remarkably accurate, incredibly natural way to interact with your computer”. My first impressions are that it is quite accurate. However, as with all gesture based devices as there is no tactile feedback you have to sort of feel you way through space to get used to where you are supposed to be.

However the initial setup demonstration works very well giving you a good sense for how it is going to work.

It comes with a few free apps via Airspace and access to another ecosystem to buy some more.

The first one I clicked on was Google Earth, but it was a less than satisfying experience as it is not that obvious how to control it so you end up putting the world into a Superman style spin before plunging into the ocean.

I was more impressed with the nice target catching game DropChord (which has DoubleFine’s logo on it). This has you trying to intersect a circle with a chord and hit the right targets to some blasting music and glowing visuals. It did make my arms ache after a long game of it though!

What was more exciting for me was to download the Unity3d SDK for LEAP. It was a simple matter or dropping the plugin into a unity project and then importing a few helper scripts.

The main one Leap Unity Bridge can be attached to a game object. You then configure it with a few prefabs that will act as fingers and palms, press run (and if you have the camera point the right way) you see you objects appear as your fingers do.

Many of the apps on Airspace are particle pushing creative expression tools. So creating an object that is a particle generator for fingers immediately gives you the same effect.

It took about 10 minutes to get it all working (6 of those were downloading on my slow ADSL).

The problem I can see at the moment is that pointing is a very natural thing to do, that works great, though of course the pointing it relative to where the LEAP is placed. So you need to have a lot of visual feedback and large buttons (rather like Kinect) in order to make selections. Much of that is easier with touch or with a mouse.

Where it excels though is in visualisation and music generation where you get a sense of trying to master a performance and get to feel you have an entire space to play with, not limiting yourself to trying to select a button or window on a 2d screen which is a bit (no) hit and miss.

I spent a while tinkering with Chordion Conductor that lets you play a synth in various ways. The dials to adjust settings are in the top row and you point and twirl your finger on the dials to make adjustments. It is a fun and interesting experience to explore.

Just watch out where you are seen using the LEAP. You are either aggressively pointing at the screen, throwing gang signs or testing melons for ripeness in a Carry on Computing style.

I am looking forward to seeing if I can blend this with my Oculus Rift and Unity3d when it arrives though 🙂

Leave a Reply