On The Cool Stuff Collective this week, that just aired. I got to talk a little about AR. Whilst we did not show any of the more traditional camera/marker overlay for various reasons I think we covered a lot of AR ground from marker triggering with fiducial markers and creating magic mirrors all the way to the Vtech kids video camera that acts as a live AR magic lens. Then mentioning the display contact lenses as a future way of delivering information to us in physical world.

It was particularly cool for me to be able to use the Junaio triggering Royal Mail stamps that kick in a video of Bernard Cribbins.

Its a real bind getting a video feed out of some of these smartphones, this was a Droid, but the Iphone is also very awkward. So whilst we did connect and did trigger the video and it really does work it was better for Archie Productions to edit in the actual video.

I think we may end up with some more AR content down the line though as there are some really good products and demos like the ones we got to see in Finland OEM 2010 using Total immersion D’Fusion.

For the program I had created a Junaio channel for Feeding Edge which you get a floating Cool Stuff text model floating above the Cool Stuff TV logo if you point at it. Its all very doable! Just did not come out on the screen too well without that pesky video feed and screen shots don’t do AR justice.

Also the website going live with some Uk only video did mean I could pop into SL and do some virtual world augmented reality.

Epredator getting to see G33K me streaming into a media texture talking about another virtual world the brilliant Ledo Universe Online which goes live in a few days. You have to love the meta loop !

Also great to see the CYGLO tyres running on the TV more on those at http://www.nightbrighttyre.com/

unity3d

Unity3d Version 3.0 is live and looking awesome

I have been having a look at the beta of Unity3d 3.0 for a while, but nothing is as good as it going gold and live. It comes packaged with a wonderful looking demo called Bootcamp. There is a version of the demo (with a bit of an in game cutscene before getting to the 3rd person part.

The simplicity both of getting Unity3d running, i.e. its nicely self contained means you can just dive in and make things. There are some new scripts to help like a 3rd person script and a new demo character with animations of a construction worker. It really could not simpler, yet there are tonnes of features for the more pro focussed game programmer and graphic artist.

The demo uses a very large terrain with lots of detail and debris. The player character is using a locomotion system to animate over and around the obstacles. Physics is in full effect if you enter the derelict building and start shooting at windows and cans. Things deform, break and fly around.

Its all just sitting there on your hard drive ready to explore and see how its all created.

There are some handy hooks into MonoDevelop to allow editing of code, breakpoints and inspection as we have got used to on other development platforms. In true Unity style it just works.

If you have not already downloaded the free version, and you are in anyway a techie or designer go and get it now !

It really should be something in every school IT lesson too. The ability to make things happen with real programming behind it will make more kids get to understand programming and the sciences behind that.

I really wish we had had this when I was starting out, so now I want everyone to go have a go look.

Practicing 3d Scanning

I have borrowed a NextEngine 3d scanner for the Cool Stuff Collective shoot on friday. It belongs to a professional movie animator and puppeteer Craig Crane who lives very near to here. It was amazing to see all his home setup kit and hear about the work he does with larger scanners scanning in entire film set locations and them meshing them up for CGI special effects. He has a personal project too here.

The scanner software is windows only and it seemed safer to bootcamp my Mac Book Pro just to make sure I got the performance. I have VMware and Parallels, bit good but when hardware and intensive gfx are needed direct windows seems better.

The scanner comes with a motor driven turn table. You place the item on that a fixed distance away from the scanner and tell it how many frames to do min is 4. It then scans away, passing the lasers over the item

As you can see I tried a few things

It is also very clever in the fact it generally stitches all these meshes together, allows you to trim and polish the mesh. If there are some things it could not see like the top of a head you simply turn the object 90 degrees and ask it to scan again then align that mesh to fill in the gap. I did not do too much of that as I need to do a basic scan to show the principle. The tidying and improving that a talented designer with a keen eye does not drop into my coder art remit 🙂

The models are very detailed even on the low res scan. I had some success with the texturing too. It takes 2d photos and then maps them on the object.

A very impressive piece of kit, I am not sure I can justify buying it for myself, though with the new mesh support arriving in Second Life and the ability to drop these meshes into Unity3d (with a bit of decimation first) it would be a very useful tool.

Unity3d, F1, Google Maps mashup

A very neat application surfaced this weekend via tweets by @robotduck. An official Vodafone F1 application lets you build the profile of a track you race from the roads on google maps.

Its called Hometown as they clearly have spotted most people will razz around racetracks near their own homes.

Not only that they let you add extra furniture to the tracks, landmarks and barriers, including yout own text banners.

It is very cool and uses unity3d

Fiducial Markers and Unity 3d

I was looking around for a quick way to use the wonderful Reactivision camera based marker tracking. Ideally I really wanted a fully working Reactable, but without a projector and the music and light software and the physical elements like a glass table I cant really do what I want to do in the time I need to do it.

I bumped into a project from a few years ago the tangiblaptop that was aimed at using the laptop screen rather than a projector to display the things that happen to the markers that are also placed on that surface but picked up by the camera. It looked good but I thought I needed something with a bit more variety.

Then I saw this Uniducial . A small library and and couple of scripts to drop into Unity3d.

Within seconds I had objects appearing and disappearing based on the markers they could see in the camera view.

(I have done a few things with these before as did Roo back in the day

However I think the unity3d gadget is going to be very useful indeed 🙂

Grab an EvolverPro fully rigged model free before Aug 17th

Over at EvolverPro the guys are having a special offer that lets you download a model for free. Usually the fully rigged model (that you can use in things like unity) is $39.

I took the opportunity of both putting some trousers one of my avatars and downloading the rig whilst wearing my Feeding Edge Tshirt.

So I now have 2 fully rigged and poseable Avatars that I can use in 3d packages and in my Unity3d Demos.

To quote Tim Blagden from Evolver “one free character to anyone who enters the coupon code pro817 and clicks add between now and August 17th. Help yourself to a character and spread the word.”

Rezzable, Unity3d, Opensim FTW

The team at rezzable have a live demo up and running using Unity3d talking to Opensim and getting some of the geometry and packages from the opensim server.

It is good to see this sort of experimentation happening and will lead to yet more people trying things out I think. I know there are more out there too so maybe there is some sort of unity3d coalition or opensource style federation that could get together and share to drive this forward?

As I have written before there are all sort of options(my most read post ever so it must be of interest) for how this can me mixed and matched, some of it is smoke an mirrors for user experience but none the less immersive.

The key is to consider other ways to achieve the goals of a particular virtual world expereince, from immersion to whether things need to be user created or not.

So well done Rezzable, and thankyou for sharing it so far 🙂

Opensim/Second Life Vs Unity3d

I actually get asked a lot about how Unity3d stacks up against Opensim/Second Life. This question is usually based on wanting to use a virtual world metaphor to replicate what Opensim/Second Life do but with a visually very rich browser based client such as Unity3d.

There is an immediate clash of ideas here though and a degree of understanding that Unity3d is not comparable in the usual sense with SecondLife/OpenSim.

At its very heart you really have to consider Opensim and Second Life as being about being a server, that happens to have a client to look at it. Unity3d is primarily a client that can talk to other things such as servers but really does not have to to be what it needs to be.

Now this is not a 100% black and white description but it is worth taking these perspectives to understand what you might want to do with either type of platform.

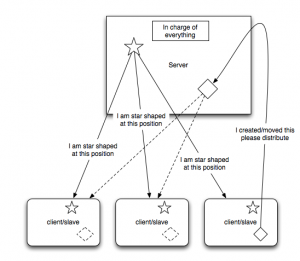

Everything from an Opensim style server is sent to all the clients that need to know. The shapes, the textures, the position of people etc. When you create things in SL you are really telling a server to remember some things and then distribute them. Clearly some caching occurs as everything is not sent every time, but as the environment is designed to be constantly changing in every way it has to be down to the server to be in charge.

Now compare this to an “level” created in Unity3d. Typically you build all the assets into the unity3d file that is delivered to the client. i.e. its a stand alone fully interactive environment. That may be space invaders, car racing, a FPS shooter or an island to walk around.

Each person has their own self contained highly rich and interactive environment, such as this example. That is the base of what Unity3d does. It understands physics, ragdoll animations, lighting, directional audio etc. All the elements that make an engaging experience with interactive objects and good graphic design and sound design.

Now as unity3d is a container for programming it is able to use network connectivity to be asked to talk to other things. Generally this is brokered by a type of server. Something has to know that 2,3 or many clients are in some way related.

The simplest example is the Smartfox server multiplayer island demo.

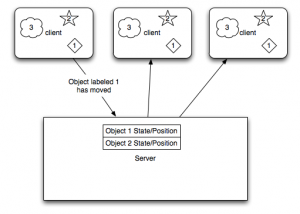

Smartfox is a state server. It remembers things, and knows how to tell other things connected to it that those things have changed. That does not mean it will just know about everything in a unity3d scene. It its down to developers and designer to determine what information should be shared.

In the case above a set of unity clients all have objects numbered 1, 2 and 3 in them. It may be a ball, a person and a flock of birds in that order.

When the first client moves object number 1 smartfox on your own remote web server somewhere in the ether is just told some basic information about the state of that ball. Its not here now its here. Each of the other unity clients is connected to the same context. Hence they are told by the server to find object number 1 and move it to the new position. Now in gaming terms each of those clients might be a completely different view of the shared system. The first 2 might be a first person view, the thirds might be a 2d top down map view which has no 3d element to it at all. All they know is the object they consider to be object number 1 has moved.

In addition object number 3 in this example never shares any changes with the other clients. The server does not know anything about it and in the unity3d client it claims no network resources.

This sort of game object is one that is about atmosphere, or one that has no real need to waste network sending changes around. In the island example form unity3d this is a flock of seagulls on the island. They are a highly animated, highly dynamic flock of birds, with sound, yet technically in each client they are not totally the same.

(Now SL and Opensim use principle this for things such as particles and clouds but that is designed in)

For each user they merely see and hear seagulls, they have a degree of shared experience.

Games constantly have to balance the lag and data requirements of sending all this information around versus things that add to the experience. If multiplayer users need to have a common point of reference and it needs to be precise then it needs to be shared. e.g. in a racing game, the track does not change for each person. However debris and the position of other cars does.

In dealing with a constantly changing environment unity3d is able to be told to dynamically load new scenes and new objects in that scene, but you have to design and decide what to do. Typically things are in the scene but hidden or generated procedurally. i.e. the flock of seagulls copies the seagull object and puts it in the flock.

One of the elements of dealing the network lag in shuffling all this information around is interpolation. Again in a car example typically if a car is travelling north at 100 mph there if the client does not hear anything about the car position for a few milliseconds it can guess where the car should be.

Very often virtual worlds people will approach a game client expecting a game engine to be the actual server packaged, like wise game focused people will approach virtual worlds as a client not a server.

Now as I said this is not black and white, but opensim and secondlife and the other virtual world runnable services and toolkits are a certain collection of middleware to perform a defined task. Unity3d is a games development client that with the right programmers and designers can make anything, including a virtual world.

*Update (I meant to link this in the post(thanks Jeroen Frans for telling me 🙂 but hit send too early!)

Rezzable have been working on a unity3d client with opensim, specifically trying to extract the prims from opensim and create unity meshes.

Unity3d and voice is another question. Even in SL and Opensim voice is yet another server, it just so happens than who is in the voice chat with you is brokered by the the main server. Hence when comparing to unity3d again, you need a voice server, you need to programatically hook in what you want to do with voice.

As I have said before though, and as is already happening to some degree some developers are managing to blend thing such as the persistence of the opensim server with a unity3d client.

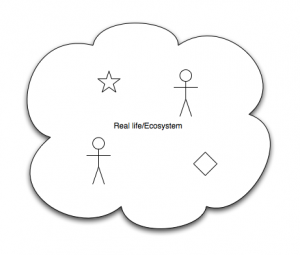

Finally in the virtual world context in trying to compare a technology or set of technologies we actually have a third model of working. A moderately philosophical point, but in trying use virtual worlds to create mirror worlds at any level will suffer from the model we are basing it on, name the world. The world is not really a server and we are not really clients. We are all in the same ecosystem, what happens for one happens for all.

Getting caught out by c# in Unity3d and Smartfox

Warning a moderately techie post with no pictures.

I have been a programmer since I was 14 and I have used an awful lot of programming languages and danced around many a syntax error. When you do spend all day everyday in a particular environment the basics tend to just flow, when you chop and change from code, to architecting to explaining and sharing with people, sometimes the context switch gets more tricky. Todays tech world is also full of many flavours of component that we wrangle and combine. There were two in particular that were causing me hassle and I needed to look up more than once so I figured if I am having the problem then so will someone else 🙂

Up to now most of the things I have done in Unity3d with various experiments use the basic examples and provided scripts or variations on them. These are all in Javascript or the .js deriviative that Unity uses.

However, now that I am talking to the Smartfox server pieces it’s become more useful to to do .cs (C#).

The first is calling a script in a function on another object in the scene.

In .js its straight forward

GetObject("RelevantObject").GetComponent("AScript").DoWork("passingingvalue");

Where there is a .js file called AScript with a function DoWork in it accepting a string. Attached to the object “RelevantObject”

It is just regular dot notation method access

(I know that it is no efficient to go and find the objects and components everytime but it is easier to illustrate the point in a single line)

In .cs its nearly the same but….

BScript someScript = GameObject.Find("RelevantObject").GetComponent

someScript.DoWork("passingingvalue");

Where there is a BScript.cs file attached to “RelevantObject” but being c# is is not just the file name but a proper class definition.

So going from the quick lets just get this done of .js you have to be a bit more rigorous though it all makes sense 🙂

using UnityEngine;

using System.Collections;

public class BScript : MonoBehaviour {

// Use this for initialization

void Start () {

}

// Update is called once per frame

void Update () {

}

public void DoWork(string sval){

//do something with sval

}

}

The other problem I had was more related to using Smartfox server.

I was attempting to set Room Variables. These are server side containers of information that when they change will generate an event to clients that have registered an interest. i.e. very handy to preserve the state of something and send it to multiple clients. The main smartfox Unity Island multiplayer demo uses more direct message to send position transforms for players.

It worked setting the variables, and I could see them in the Admin client. I also got the change message and the name of the variable(s) that had altered, but I kept getting exceptions thrown in the getting of the variables.

After a lot of hacking around it seemed that

room.GetVariable("myname"); //doesn't work

room.GetVariables()["myname"]; //does work

i.e. Get the whole hashtable with GetVariables and reference it directly versus getting the GetVariable function to do that indexing for you.

Now this may just be a wrong version of an imported DLL somewhere. I have not built the entire Smartfox Unity3d plugin again. It does work, so this may be a work around for anyone who is having problems with Room variables. It works enough for me not to worry about it anymore and get on with what I needed to do

Other than that its all gone very smoothly. Smartfox Pro (demo) does what I need and then lots more. I have had conversations and meetings with people in my own Amazon cloud based instantiations of unity powered clients. Now I am over any brain hiccups of syntax again its onwards and upwards.

Look just how simple things can be. Unity3d accessing the web

I have been doing a lot of Unity3d with data flying around, but I am still amazed at just how easy it is to get things to work.

For the non-techies out there this should still make sense as its all drag and drop and a little bit of text.

So you need a web based walk around some of your flickr pictures?

Unity3d makes it very easy to say “create scene”, “add terrain”, “add light”, “add first person walker (the thing that lets you move around” and finally to say “create cube”.

It is as simple as any other package to create things, just like adding a graph in a spreadsheet, or rezzing cubes in Second Life.

Once the Scene is created is it very easy to add a behaviour to an object in this case this script is types in and dragged and dropped onto each cube in the scene. (its in the help text too)

var url = "http://farm3.static.flickr.com/2656/4250994754_6b071014d4_s.jpg";

function Start () {

// Start a download of the given URL

var www : WWW = new WWW (url);

// Wait for download to complete

yield www;

// assign texture

renderer.material.mainTexture = www.texture;

}

Basically you tell it a URL of an image, 3 lines of code later that image is on the surface of your object running live.

The other thing that is easy to do is drag a predefined behaviour of “DragRigidBody” on the cube(s). Then you get the ability to move the cubes when they are running by holding onto them with the mouse and left click.

Now the other clever piece (for the techies and programmers amongst us) is that you can create an object of collection of objects and bring them out of the scene and create a “prefab”. This prefab means that you can create lots of live versions of an object in the scene. However if you need to change them, add a new behaviour etc you simply change the root prefab and the changes are inherited by all the prefabs in the scene. You are also able to override settings on each prefab.

So I have a cube prefab, with the “Go get an image” script on it.

I drag a few of those into the scene and for each one I can individually set the URL they are going to use. All good Object Orientated stuff.

This is not supposed to be a state of the art game :), but you can see how the drag and drop works and moving around using live images I drag in from my Flickr in this sample

Click in the view and W and A moves back and forwards, left click and hold the mouse over a block to move it around.

Downward gravity is reduced (just a number) and of course the URL’s could be entered live and change on the fly. I only used the square thumbnail URLs form my Flickr photos so they are not hi quality 🙂

This is also not a multiuser example (busy doing lots of that with Smartfox server at the moment) but it is just so accessible to be able to make and publish ideas.

The code is less complicated that the HTML source of this page I would suggest. Its also free to do!