One of the greatest things about the web is that whilst there may be the ability for anyone to share anything that is truly rubbish, there is also the ability to share things that for a niche audience they will appreciate and love. One such thing is this very clever, but very geek related parody of the Katy Perry/Snoop California song.

You will see if you click through ti YouTube Skyway Flyer has put the transcript of the lyrics too, in case anyone who is not so geeky has to look up Flux Capacitor.

I bumped into this one though on Buzzfeed. Yes another service to help us thread and aggregate interesting things.

It was here Buzzfeed (thanks to Rita King and Jerry Paffendorf for pointing me at it) lets you rate and badge content from elsewhere and then spread the word.

I have been dabbling with a few things on there as epredator

It is sort of categorized into LOL and GEEKY etc. For these geek parodies though I suspect they will fill up the feed very quickly 🙂 If they are as good as this one and the previous New Dork one then I am happy.

Nerdcore as a music form seems to be ever on the increase, creative people who are also tech geeks taking a tongue in cheek look at life and sharing it over the very medium they are spoofing.

Maybe I need to give it a go to. I have a smattering of musicality (though only a smattering), lots of kit and a world audience to find a few people who might like it. If nothing else the ballad of the metaverse may be a better way to share a story than an old school book. Though…. that may have to be a geek opera rather than a youtube short 🙂

Wimbledon Time again – Google subtlety

Yes it thats time of year again, the Wimbledon Tennis Championships is about to kick off and as I have written before (was it really a year since I wrote this!) for me and anyone who has had the pleasure and stress of working the event its a special time. I think the allegiance to it as an institution and to the team of people never goes away. In our life equivalent of gamer achievements we probably have some very unusual badges to share.

All this means I am still interested in how people attach themselves to a significant event, there are a lot people jokying for digital rights and “official” sponsorship. However some of it is just publicly owned association to the event, i.e. support.

I was looking at google street view the other day and noticed a change I had not seen before. The little drag and drop man around Wimbledon has changed to a tennis player. Even more cute/clever when you drag him around he changes into a tennis server gesture.

So well done Google. I wonder if this is some sort of rabbit hole for a Google/Wimbledon Alternate Reality Game? 🙂

Anyway good luck to all my friends at Wimbledon from the old firm and associated groups. You do a cracking job.

The official site is here remember there is no t in the word Wimbledon my US friends 🙂

Natal to Kinect, TV to 3DTv, E3, ergonomics

With the E3 conference in full swing bringing us heaps of great game announcements it is interesting to different rapidly emerging technologies start to combine and cause interesting opportunities and problems.

Project Natal from Microsoft has been renamed to Kinect. Its a soon to arrive add on to the 360 that does some very clever things to detect people and their body movements. It is way past the WiiMote, which Sony have gone closer too with their magic wand Move device (a glowing ball on a stick).

All the Kinect demos and release film showed variations of gameplay that require your entire body to be the controller for the game. In exercise, sports and dance games this makes a great deal of a difference. Knowing where limbs are rather than dance mats has great scope. Though it does lead to an ergonomic problem of needing space around you in your living room/games room.

In driving games such as Forza3 they showed how you sit with your arms outstretched holding an imaginary wheel. This looks like it will be painful, I suppose there is nothing to stop us holding on to some sort of device as I suspect prolonged play will get tricky. What is great is the head tracking though. Being able to look into a corner and the view changes.

There is also a leap and drive towards 3D. The TV’s are starting to appear in stores, Sky is broadcasting the world cup in 3D too. This again is interesting because of our need to have to wear overlay spectacles (in most cases at the moment) in order to experience the effect. Games are starting to be “3d enabled” or be built to take advantage of the 3D tv. So we have a slight crossover here. Kinetic relieves us of our controls, free to move about, but the TV is re-enforcing the need to sit in the right place and wear a device in order to experience it correctly.

So what happens when the large comfortable, easy fit glasses of passively watched 3d TV meet an energetic bounce around body controlled Kinetic game on the 360.

I am sure we will end up with a range of sports glasses and attachments to help play the various games, but it is something to think about.

I am really looking forward to the blend of 3d visuals with gesture controls and the creative process of building in virtual worlds, with the addition of being able to print out and create through 3d printers new peripherals to hold onto and enhance the experiences.

Exciting times!

Lego AR in the wild

It was fitting that the last day of the holiday wandering around Disney Downtown we ended up in the Lego Innovations store. For some reason I nearly missed seeing the Augmented Reality kiosk that lets you see whats inside your lego box.

I have seen this in videos before, but it was good to have a go with what is an ar Magic Mirror in a commercial environment.

Certain lego boxes are available near the kiosk and you just hold it up and away it goes. It animates the contents of the box.

There were a few peoples who did not notice it either, once I started using it a queue formed 🙂 Dad’s were busy video it and kids were busy going wow look at that.

Maybe these bodes well for the next gen tech they will start using in the theme parks as we saw lots of 3d (with glasses) of course and a location based service using a mobile for a treasure hunt.

Epcot did have this

An energy sweeping game. It used what looked like a metal detector or round shuffle board stick to move virtual energy “pucks” that had to be croupier pushed to various stations. It was by Siemens, and was OK but a bit laggy.

All change, all the same

There is one thing about the virtual world industry. Every day brings something new, some new piece of tech, of content, of gossip or direction. None of which is really every related to an end or a death of it.

Companies in all industries (including growing ones) come and go. Companies grow and shrink and restructure all the time.

I do feel for all the people hit as part of the Linden restructuring, whether that is a knee jerk need due to cashflow or simply a focussing effort in a company that expanded wildly it does not matter. Those going will be entering a market that is growing, new platforms and businesses are emerging. Those staying can focus and keep Second Life on track.

Being in the tech industry it is clear that more than ever it is possible to work and grow without being part of larger company. There are lots of opportunities out there.

Linden has been restructuring for some time as we have all noticed. It is because of the direct connection many people/residents/users of Second Life have with the entire company that this sort of move becomes so visible. In most other firms a reshuffle or closure makes little or no difference to any customers. Its just another faceless job swap, with a both survivor guilt for those remaining and mixed emotions for those sent out into the wold once more.

Many companies should learn from LL’s ability to let everyone interact at all levels, equally it should make sure that it does not lose that spirit as it becomes more “regular” in its approach as business.

It is not the end of anything, but a next chapter and new beginning. (Though I am sure the press will write otherwise).

If you are an ex-linden and have some downtime/gardening leave/breathing space. Opensim, Unity3d, vastpark and a few others are worth looking at.

If I can help anyone I will, there are lots of interesting projects.

Photo Realism, Augmented Reality blend away

Lots of things have crossed my mind here on holiday around the Florida Theme parks.

The first most relevant was at the Monsters Inc laughter show at the Magic Kingdom. This at first glance looks like a standard film show, file into a theatre and get thrilled, wet or blown at in various ways. Instead though it turns out to be a live comedy show, but featuring computer animated characters. The characters appear on stage and talk to the audience. The animation and puppetry is great, and fast thinking comedians working the audience make you forget the animated characters and they become very real.

The characters are still cartoon like, but they are powered by real people, though they are not people who we actually know as they are playing a character. This works so well. We often worry about identity and photo realism but both are basically trumped by good narrative and human like qualities in the interaction. The audience and performer bond becomes the important part. The tech helps make it feel different.

***Update today at Epcot we saw another live digital puppet example with Crush the talking turtle from Finding Nemo. Another very impressive and expressive avatar.

This applied to the playhouse disney show which was rod controlled puppets of Mickey, Handy Manny, Little Einsteins and tigger. Puppets are obviously physical beings but given energy by human movement and expression.

I also just saw this video of the AR magician Marco Tempest doing a projection demonstration.

In this the character he creates is only a stick man, yet with movement and expression and no talking he manages to create a magical show.

The point of all this, and of all the attractions here at disney and universal is to reach people, to tell stories, to spark imagination. Atmosphere can be created with the simplest and easiest of techniques.

I wrote some about the Simpson ride back in 2008 over on eightbar. Pasted below for completeness

“The Simpsons ride really takes a whole load of ingredients to fool and entertain the brain. We often say in virtual world circles that nothing beats real life. The Simpsons (for those who have not followed such things) take the TV program into a giant domed screen, but pairs a crazy cgi rendered experience with a whole load of physical tools. The prime one is the hydraulic cockpit. These seem to be able to generate a whole load of unusual movements that fool the brain. That is why of course they are used in flight sims. The Simpsons has an open carriage, which allows for a greater immersion, and for things like dry ice to be thrown into the mix.

You are also experiencing this with other people. Only a few in a car at a time to give the feeling you are a family on with the Simpsons, plus technically its harder to throw lots of people around in one car. They do of course have more than one running on the giant screen but you attention cannot see those.

At the dawn of cinema people were only able to experience films in a purpose built facility, as time has gone on we have added more sensory elements to home installations. Vibrating joypads on consoles etc. By combining what we currently have for 3d immersion and adding some extra layers of the physical world whilst we may not be able to do the justice to the Simpsons ride we should be able to immerse entertain and inform people in an even richer fashion. We may even be able to locally manufacture some of the physical elements needed for an “experience” using 3d printers? It still feels we are chained to these laptop screens and qwerty keyboards…. I guess thats more for tomorrows visionaries panel.

BTW my favourite Homer line from the ride “Doh I hate chain reactions”

A clever mix of tech, good story and of surprising the human brain seems to be the way to reach us as people. Not just one huge photo realistic identity verified online experience.

Surrealism, Immersion and the Metarati

Yesterday was the Immersion 2010 conference at the very central London BIS conference centre. It was a packed room for the entire day. There were a huge range of topics covered and it was particularly interesting as there was clearly a blend of the non gaming virtual world metarati (the usual crowd of us) and game related highly influential academia (Prof Richard Bartle), TV and media Simon Nelson (from the BBC) and Ian Livingstone Eidos/Square Enix (Gaming royalty!) as well as investors and angels. We often don’t have such a mix. The audience were from all over the place too from startups to civil service.

The discussion between Ian Livingstone and Simon Nelson on free vs AAA paid for content with intriguing to see played out. Also Richard Bartle’s views on the sort of education for the games industry was good to hear. A drive needed to do quality education not just games as media studies to attract paying students.

I was on a panel with David Burden (Daden), Ron Edwards (Ambient) and chaired by Mike Dicks (BleedinEdge) Yes we had to have two similar company names, great minds 😉

We talked about the future technology. The room had already had a dip into virtual worlds from the other metarati there with Dave Taylor (Imperial College), Justin Bovington (Rivers Run Red), Michael Schumann (Second Interest) and moderated by Rob Edmonds (SRI).

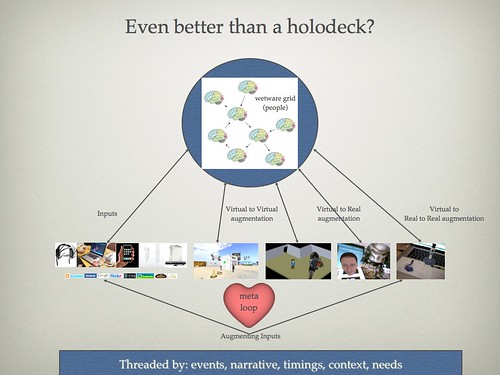

This meant that when I went last in the little 10 min slots we had I decided to push things a little further forward with the ideas I talked about at metameets in Dublin. Boiling down to this picture.

It shows the entire eco system that is really our real and virtual worlds all merged into a collective experience. The point still stands that we should not just replicate the world (though that is part of the equation). We have a whole host of ways to connect and feed one another ideas to show our thoughts and experience them.

I paraphrased Dali, the great surrealist. He stated he wanted to create a dream that was also a living room. A concept that resonates with how I want us to be able to express our ideas and bring understanding to one another powered by our ability to collect and share what we think on the web, to augment wherever we are with more of those ideas. Not restricting ourselves to one layer of AR on top of one physical world. This is a multilayer experience that changes as we need it to. It also encompasses the ability to physically augment our world not just with pixels but with atoms from 3d printers and alike. It is not about a single device or web vs mobile vs games vs outside vs inside. It is everything.

We have already started on this journey, and bearing this picture in your mind or people being truly connected in ways that suit them sits nicely on top of all that is already there on the web and in games and virtual worlds and existing communication technology.

With that post I will head of to a “real” “virtual world” and a family holiday to disney and universal. (You see once you get over making a differentiation and realise its all real life gets a lot simpler 🙂

3d Printing Good enough for Ironman2

There are some great articles buzzing around about the use of the Objet 3d printers in the production of IronMan2 special effects.

Check out the video description here. Also pay attention to the length of time they have been using it and the fact that this is what the “legacy effects department” does 🙂

More is here

Basically they printed some of the film props and armour based on a quick scan of Robert Downey Jr.

Remember that at the moment this is about CAD models and the specifics of their construction, but we move lots of 3d content around to one another in virtual worlds every day. Simply combine the 2.

HP are moving into 3d printing, and the Object Alaris 30 is a desktop office printer.

Opensim/Second Life Vs Unity3d

I actually get asked a lot about how Unity3d stacks up against Opensim/Second Life. This question is usually based on wanting to use a virtual world metaphor to replicate what Opensim/Second Life do but with a visually very rich browser based client such as Unity3d.

There is an immediate clash of ideas here though and a degree of understanding that Unity3d is not comparable in the usual sense with SecondLife/OpenSim.

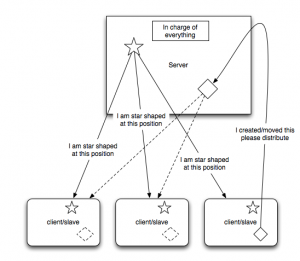

At its very heart you really have to consider Opensim and Second Life as being about being a server, that happens to have a client to look at it. Unity3d is primarily a client that can talk to other things such as servers but really does not have to to be what it needs to be.

Now this is not a 100% black and white description but it is worth taking these perspectives to understand what you might want to do with either type of platform.

Everything from an Opensim style server is sent to all the clients that need to know. The shapes, the textures, the position of people etc. When you create things in SL you are really telling a server to remember some things and then distribute them. Clearly some caching occurs as everything is not sent every time, but as the environment is designed to be constantly changing in every way it has to be down to the server to be in charge.

Now compare this to an “level” created in Unity3d. Typically you build all the assets into the unity3d file that is delivered to the client. i.e. its a stand alone fully interactive environment. That may be space invaders, car racing, a FPS shooter or an island to walk around.

Each person has their own self contained highly rich and interactive environment, such as this example. That is the base of what Unity3d does. It understands physics, ragdoll animations, lighting, directional audio etc. All the elements that make an engaging experience with interactive objects and good graphic design and sound design.

Now as unity3d is a container for programming it is able to use network connectivity to be asked to talk to other things. Generally this is brokered by a type of server. Something has to know that 2,3 or many clients are in some way related.

The simplest example is the Smartfox server multiplayer island demo.

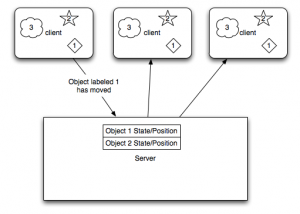

Smartfox is a state server. It remembers things, and knows how to tell other things connected to it that those things have changed. That does not mean it will just know about everything in a unity3d scene. It its down to developers and designer to determine what information should be shared.

In the case above a set of unity clients all have objects numbered 1, 2 and 3 in them. It may be a ball, a person and a flock of birds in that order.

When the first client moves object number 1 smartfox on your own remote web server somewhere in the ether is just told some basic information about the state of that ball. Its not here now its here. Each of the other unity clients is connected to the same context. Hence they are told by the server to find object number 1 and move it to the new position. Now in gaming terms each of those clients might be a completely different view of the shared system. The first 2 might be a first person view, the thirds might be a 2d top down map view which has no 3d element to it at all. All they know is the object they consider to be object number 1 has moved.

In addition object number 3 in this example never shares any changes with the other clients. The server does not know anything about it and in the unity3d client it claims no network resources.

This sort of game object is one that is about atmosphere, or one that has no real need to waste network sending changes around. In the island example form unity3d this is a flock of seagulls on the island. They are a highly animated, highly dynamic flock of birds, with sound, yet technically in each client they are not totally the same.

(Now SL and Opensim use principle this for things such as particles and clouds but that is designed in)

For each user they merely see and hear seagulls, they have a degree of shared experience.

Games constantly have to balance the lag and data requirements of sending all this information around versus things that add to the experience. If multiplayer users need to have a common point of reference and it needs to be precise then it needs to be shared. e.g. in a racing game, the track does not change for each person. However debris and the position of other cars does.

In dealing with a constantly changing environment unity3d is able to be told to dynamically load new scenes and new objects in that scene, but you have to design and decide what to do. Typically things are in the scene but hidden or generated procedurally. i.e. the flock of seagulls copies the seagull object and puts it in the flock.

One of the elements of dealing the network lag in shuffling all this information around is interpolation. Again in a car example typically if a car is travelling north at 100 mph there if the client does not hear anything about the car position for a few milliseconds it can guess where the car should be.

Very often virtual worlds people will approach a game client expecting a game engine to be the actual server packaged, like wise game focused people will approach virtual worlds as a client not a server.

Now as I said this is not black and white, but opensim and secondlife and the other virtual world runnable services and toolkits are a certain collection of middleware to perform a defined task. Unity3d is a games development client that with the right programmers and designers can make anything, including a virtual world.

*Update (I meant to link this in the post(thanks Jeroen Frans for telling me 🙂 but hit send too early!)

Rezzable have been working on a unity3d client with opensim, specifically trying to extract the prims from opensim and create unity meshes.

Unity3d and voice is another question. Even in SL and Opensim voice is yet another server, it just so happens than who is in the voice chat with you is brokered by the the main server. Hence when comparing to unity3d again, you need a voice server, you need to programatically hook in what you want to do with voice.

As I have said before though, and as is already happening to some degree some developers are managing to blend thing such as the persistence of the opensim server with a unity3d client.

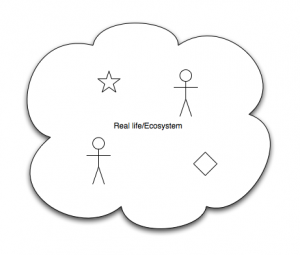

Finally in the virtual world context in trying to compare a technology or set of technologies we actually have a third model of working. A moderately philosophical point, but in trying use virtual worlds to create mirror worlds at any level will suffer from the model we are basing it on, name the world. The world is not really a server and we are not really clients. We are all in the same ecosystem, what happens for one happens for all.

Time to get immersed : Serious Games Conference

This coming monday is a day of conference speaking and listening, swapping some more business cards and catching up with yes more metarati. It’s Martine Parry’s Immersion (formerly Apply Serious Games) 24th May at the DTI/BIS conference center.

I will be on the panel speaking about “What are the Game-Changer Technologies? How are New Techniques Opening Opportunities for the Future? Augmented reality. 3D technologies. Intelligent Systems. Player Matching.”

So for those that missed metameets, or want to hear some of us talk about a highly likely, highly really and almost scary future come on over 🙂

As usual if there are some things you want to ask on this subject, please comment, tweet, SL etc me.

If you see me at the event come say hi 🙂 I will of course be in my usual jacket and with my recent experimental feedingedge tshirt print.

I should also be able to see you all now, as new glasses are in full working order.

All in all should be a good day.