A quarter of a century ago I started my first full time job at IBM. August 13th 1990. I had a sandwich year there doing the same job but this had more permanence about it. Indeed it was pretty permanent as I stayed at that same company for 19 years.

The tech world was very different back then. It was much simpler to just be a programmer or just be a systems administrator etc.

We worked on green screen terminals like this.

They were dub terminals, no storage, just a command line CRT display. Everything was run and compiled remotely on the main machines. That doesn’t seem strange now as we have the cloud as a concept, but when you had cut your teeth as I had on home computers it was a strange experience. Scheduling batch jobs to compile code over night, coming in int he morning to find out if you had typed the thing correctly.

We had intercompany messaging, in a sort of intranet email system, but it was certainly not connected to the outside world and neither were we. We did have access to some usenet style forums internally and they were great sources of information and communal discovery. There were a few grumpy pedants but it was not a massive trollfest

As a programmer you had very little distraction or need to deal with things that were above your pay grade. The database admin and structure for instance, well that someone else’s role. Managing the storage, archiving, backup etc all different specialist roles.

The systems we built were huge, lots of lines of code but in reality we were just building stock control systems. They were mission critical though.

After writing some code, a module to meet a specific requirement we had code inspections. Groups of more experience people sat and read printed out listing of the code, checking it for consistency and formatting even before a compile job was run. It was also a time to dry run the code but, sitting with a pen and paper working out hat a piece of code did and how to make it better or catch more errors.

we wrote in PL/1 a heavily typed non fancy language. It changed over time but there were not many trick to learn, not funny pointers and reflection of variables. No pubs messaging or hierarchy problems. It was a great way to learn the trade.

Very rapidly though this rigidity of operation changed. As we moved over the next few years to building client server applications where the newly arriving PC’s had things they could do themselves, drag and drop interfaces like OS/2 (IBM’s version go windows). I generally ended up in the new stuff as, well, that’s what I do!

We had an explosion of languages to choose from and to work with, lots of OO smalltalk was all the rage but we were busy also doing C and then C++ as well. Then before you knew it, or before I knew it too, we had the web to play with. From 1997 on everything changed. It started with a bit of email access but very soon we had projects with proper access to the full inter web.

Building and creating in this environment was almost the polar opposite of the early years of small units of work and code inspections. We all did everything, everywhere on every platform. Scripting languages, web server configs, multiple types of content management, e-commerce products, app server products, portal products. We had Java on the backend and front end but also lots of other things to write with, rules based languages and lots and lots of standards that were not yet standards. Browsers that worked with some things, corporate clients dictating a random platform over another which we then built to.

It was a very anarchic yet incredibly interesting time.

The DotCom bubble burst happened quite naturally, but that did more to destroy a lot of business models and over eager investment. For the tech industry it actually helped slow down some of the mad expansion so we could all get to grips with what we had, it led ultimately to people started to connect with one another and the power of the web for communication.

Of course now we are gathering momentum again, lots of things are developing really quickly. The exciting buzz of things that are nearly mass market, but not quite, or competing platforms and open source implementations all competing to be the best pre-requisite for your project.

Everyone who does any of this need to be Full Stack now. For those of us who grew with this and survived the roller coaster it is a bit easier I think. Though not having those simpler times to reflect on high actually be a blessing for this generation. Not think they are not supposed to be messing around with the database because that’s not their job might be good for them.

One thing is certain, despite some nice cross platform systems like Unity3d the rest of the tech has not got any easier. Doing any new development involves sparking up a terminal emulator working just like those green screens back in the 90’s. Some interesting but ultimately arcane incantations to change a path variable or attempt to install the correct pre-req packages still flourish under the covers. If thats what you came from then it just feel like home.

Doing these things, as I did today installing some node.js as a server side scripting and a maven web server and then some variants of a java SDK followed by some security key generation it certainly felt like this is still an engineering subject. You can follow the instructions but you do need to know why. The things we do are the same, input process output with a bit of validation and security wrapped around. There are just so many more ways to explore and express the technical solution now.

If I was me now, at 18 entering the workforce I wonder what I would be doing. I hope that I would have tinkered with all this stuff as much as ever, just had more access to more resources, more help on the web and probably known a lot more. I would have been a junior Full Stack developer rather than junior programmer. Oh and I wouldn’t have to wear a suit, shirt and tie to sit at the desk either 🙂

Pepper’s IGhost

A few days ago on Facebook I saw a post about build of a visual trick that makes a smartphone look like it has a hologram floating above it (props to Ann Cudworth for sharing it). It is of course not really a hologram but a version of Pepper’s Ghost using a trick of light passing through an angled piece of transparent material. This allows the eye to see an object floating or looking transparent and ethereal.

The video shows how this all work and how to build one.

I did have a go with an old CD case but I found the plastic shattered way too much so instead I used a slight more cut-able acetate sheet. I made a quick prototype that was a little bit wonky, but it worked still.

There are lots of these types of video out on youtube

There are some commercially available version of the such as the Dreamoc which works in a similar way but the pyramid is the other way up.

There are lots of other examples where a visual trick fools our brains into thinking something is truly 3D and floating in space. It’s all done with mirrors 🙂

Some of you may remember the time traveller game

This used a projection onto a bowl shaped mirror. This effect is also used in the visual trick you sometimes see in gadget and joke shops. Such as this one from optigone

There are some fascinating tricks and of course Microsoft Hololens, and Magic Leap will be using “near eye light fields”, which are slightly more complex arrangements than a bit of acetate on an iPhone, but we can appreciate some of the magical impact may have by looking at these simpler optical illusions.

Our ability to do more more light, and not just deal with the flat 2d plane of a TV screen or of a single photo is definitely advancing. The recent camera’s such as the Lytro which is a light-field camera treat multiple layers of light as important. Just as the Near Eye light fields bounce the light through multiple screens of colour and different angles to create their effect.

Whilst sometimes the use of the Hologram word is over used I think that what matters is how it makes us feel as a human when we look at something. The mental leap, or the trick of our brain that causes us to think something is really there is fascinating. If we think it is, well… it is.

At the moment we are still focussed (no pun intended) on altering the images that travel into our eyes and the way the eye works with its lenses and optic nerve to the brain. It is only a matter of time before we go deeper. Straight to the brain. Already there are significant advances being made in helping those with no eyesight or restricted eyesight to have a digital view of the world directed and detected in different ways. So it may be that our true blended and augmented reality is to further augment ourselves. Quite a few ethical issues and trust issues to consider there.

Anyway, back to amazing the predlets with Pepper’s IGhost, time to build a bigger one for the ipad!

**Update Just after posting I made a larger one for the ipad. The predlets enjoyed watching the videos in a darkened corner.

Then maybe an interactive unity3d live version.

Return of the camp fire – Blended Reality/Mixed Reality

Back in 2006 when we all got into in the previous wave of Virtual Reality (note the use of previous not 1st) and we tended to call them virtual worlds or the metaverse we were restricted to online presence via a small rectangular screen attached to a keyboard. Places like Second Life become the worlds that attracted the pioneers, explorers and the curious.

Several times recently I have been having conversations, comments, tweets etc about the rise of the VR headsets Oculus Rift et al, AR phone holders like Google Cardboard, MergeVR and then the mixed reality future of Microsoft Hololens, and Google Magic Leap.

In all the Second Life interactions and subsequent environments it has always really been about people. The tech industry has a habit of focusing on the version number, or a piece of hardware though. The headsets are an example of that. There may be inherent user experiences to consider but they are more insular in their nature. The VR headset forces you to look at a screen. It provides extra inputs to save you using mouse look etc. Essentially though it is an insular hardware idea that attempts to place you into a virtual world.

All out previous virtual world exploration has been powered by the social interaction with others. Social may mean educational, may mean work meetings or may mean entertainment or just chatting. However it was social.

Those of us who took to this felt as we looked at the screen we were engaged and part of the world. Those who did not get what we were doing only saw funny graphics on the screen. They did not make that small jump in understanding. There is nothing wrong with that, but that is essential what we were all trying to get people to understand. A VR headset provides a direct feed to the eyes. It forces that leap into the virtual, or at least brings it a little closer. Then ready to engage with people. Though at the moment all the VR is engaging with content. It is still in showing off mode.

We would sit around campfires in Second Life. In particular Timeless Prototype’s very clever looking one that adds seats/logs per person/avatar arriving. Shared focal points of conversation. People gathering looking at a screen in front of them but being there mentally. The screen providing all the cues and hints to enhance that feeling. A very real human connection. Richer than a telephone call, less scary than a video conference, intimate yet stand offish enough to meet most peoples requirements if they gave it a go.

It is not such a weird concept as many people get lost in books, films and TV shows. They may be immersed as a viewer not a participant or they may be empathising with characters. They are still looking at an oblong screen or piece of paper and engaged.

With a VR headset, as I just mentioned, that is more forced. They will understand the campfire that much quicker and see the people and the interaction. There is less abstraction to deal with.

The rise of the blended and mixed reality headset though change that dynamic completely. Instead they use the physical world, one that people already understand and are already in. The campfire comes to you. Wherever you are.

This leads to a very different mental leap. You can have three people sat around the virtual campfire in their actual chairs in an actual room. The campfire is of course replaceable with any concept, piece of information, simulated training experience. Those people each have their own view of the world and of one another but its added to with a new perspective, they see the side of the campfire they would expect.

It goes further though, there is no reason for the campfire not to coexist is several physical spaces. I have my view of the campfire from my office, you from yours. We can even have a view of one another as avatars placed into our physical worlds, or not. It’s optional.

When just keeping with that physical blended model it is very simple for anyone to understand and start to believe. Sat in a space with one another sharing an experience, like a campfire is a very human very obvious one. For many that is where this will sort of end. Just run of the mill information, enhanced views, cut away engineering diagrams, point of view understanding etc.

The thing to consider, for those who already grok the VW concept is that just as in Second Life you can move your camera around, see things from any point of view, for the other persons point, from a birds eye view, from close in or far away, the mixed reality headsets will still allow us to do that. I could alter my view to see what you are seeing on the other side of the camp fire. That is the star of something much more revolutionary to consider whilst everyone catches up the fact that this is all about people not just insular experiences.

This is the real metaverse, a blend of everything anywhere and everywhere. Connecting us as people, our thoughts dreams and ideas. There are huge possibilities to explore and more people will once that little hurdle is jumped to understand it’s people, its always been about people.

BBC Micro:bit – It’s taken a while but worth it

I have always been keen that kids learn to code early on. Not to make them all software engineers but to help find those that can be, and also to help everyone get an appreciation of what goes into the devices, games and things we all totally depend on now.

Way back in the last ever Cool Stuff Collective (in 2011 !) I did a round up of all things tech coming in the future. I begged kids to hassle their teachers to learn to program

It was looking as if the Raspberry Pi was going to be the choice for a big push into schools. It is probably even why our show was seen as too common to be allowed to show the early version. I was keen to have the Pi on but that didn’t work out. We had already done the Arduino on a previous show and that seemed to work really well.

So I was very pleased to see the BBC Micro:bit arrive on the scene last week. The Raspberry Pi is a great small computer, it is however quite a techie thing to get going as you have to be managing operating systems, drivers etc. (Though things like the Kano make that much much easier)

Arduino are great for being able to plug things into though it requires some circuitry and connections on breadboard to start to make sense of it. The programming environment allows a lot oa things to be achieved. It is a micro controller not a full working computer.

The new BBC micro:bit is a step in from the Arduino. It has an array of LED lights on it as its own display but also has bluetooth and a collection of sensors already onboard.

I was very pleased to see the involvement of Technology WilL Save Us in the design. I met and shared the stage with the co founder of this maker group Daniel Hirschman @danielhirschman at the GOTO conference in Amsterdam a couple of years ago. He knows his stuff and is passionate about how to get kids involved in tech so I know that this is a great step and a good piece of kit.

The plans to roll this out to every year 7 student is great, though predlet 1.0 is just moving up out of that year. So I will just have to buy one when we can.

Microsoft have a great video to describe it too.

I am sure STEMnet ambassadors in the filed of computing will be interested and hopefully excited too. This is important, as it is not just code it is physical computing, understanding sensors what can and can’t be done. It is an internet of things (IOT) device and it is powered by a web based programming language. It is definitely off the moment and the right time for it. Oh and its not just an “app” 🙂

Emotiv Insight – Be the borg

Many years ago a few of us had early access, or nearly early access, to some interesting brain wave reading equipment from Emotiv. This was back in 2005. I had a unity arrive at the office but then it got locked up in a cupboard until the company lawyers said it was OK to use. It was still there when I left but did make its way out of the cupboard by 2010. It even helped some old colleagues get a bit of TV action

It was not such a problem with my US colleagues who at least got to try using it.

I got to experience and show similar brain controlling toys on the TV with the NeuroSky mindflex gym. This allows the player to try to relax or concentrate to control air fans that blow a small foam ball around a course.

So in 2013 when Emotiv announced a kickstarter for the new headsets, in this case the insight I signed right up.

We (in one of our many virtual world jam sessions) had talked about interesting uses of the device to detect user emotions in virtual worlds. One such use was if your avatar and how you chose to dress or decorate yourself caused discomfort or shock to another person (wearing a headset), their own display of you could be tailored to make them happy. It’s a modern take on the emperors new clothes, but one that can work. People see what they need to see. It could have worked at Wimbledon this year when Lewis Hamilton turned up to the royal box without a jacket and tie. In a virtual environment that “shock” would mean any viewers of his avatar would indeed see a jacket and tie.

It has taken 2 years but the Emotiv Insight headset has finally arrived. As with all early adopter kit though there are some hiccups. Michael Rowe, one of my fellow conspirators from back in the virtual world hey day, has blogged about his unit arriving

Well here is my shiny boxed up version complete with t-shirt and badge 🙂

The unit itself feels very slick and modern. Several contact sensors spread out over your scalp and an important reference on the bone behind the ear (avoid your glasses) needs to be in place.

Though you do look a bit odd wearing it 🙂 But we have to suffer for the tech art.

I charged the unity up via the USB cable waited for the light to go green. Unplugged the device and hit the on switch (near the green light). Here I then thought it was broken. It is something very simple but I assumed, never assume, that the same light near the switch would be the power light. That was not the case. The “on” light is a small blue/white LED above the logo on the side. It was right under where I was holding the unit. Silly me.

I then set about figuring out what could be done, if it worked (once I saw the power light).

This got much trickier. With a lot of Kickstarters they all have their own way of communicating. When you have not seen something appear for 2 years you tend not to be reading it everyday, following every link and nuance.

So the unit connected with Bluetooth, but not any bluetooth, it is the new Bluetooth (BTLE). This is some low power upgrade to bluetooth that many older devices do not support. I checked my Macbook Pro and it seemed to have the right stack. (How on earth did NASA manage to get the probe to Pluto over 9 years without the comms tech all breaking !)

I logged into the Emotiv store and saw a control panel application. I tried to download it, but having selected Mac it bounced me to my user profile saying I needed to be logged on. It is worrying that the simplest of web applications is not working when you have this brain reading device strapped to your head which is extremely complex! It seems the windows one would download, but that was no good.

I found some SDK code via another link but a lot of that seemed to be emulation. It also turns out that the iPhone 8 updates mashed up the Bluetooth LTE versions of any apps they had so it doesn’t work on those now. Still some android apps seem to be operational.

I left it a few days, slightly disappointed but figured it would get sorted.

Then today I found a comment on joining the google plus group (another flashback to the past) and a helpdesk post saying go to https://cpanel.emotivinsight.com/BTLE/

As soon as I did that, it downloaded a plugin, and then I saw the bluetooth pair with the device with no fuss. A setup screen flickerd into life and it showed sensors were firing on the unit.

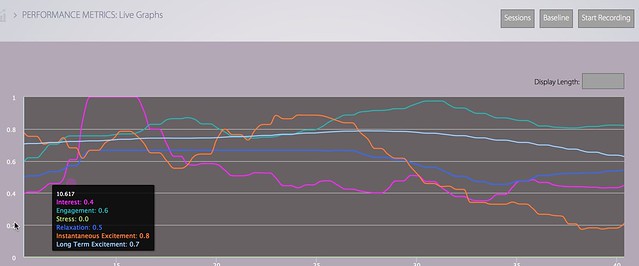

The webpage looked like it should then link somewhere but it turns out there is a menu option hidden in the top left hand corner, with a submenu “detections”. That let me get to a few web based applications that atlas responded. I did not have much luck training and using it yet as often the apps would lockup, throw a warning message and the bluetooth would drop. I did however get a trace, my brain is in there and working. I was starting to think it was not!

So I now have a working brain interface, I am Borg, it’s 2015. Time to dig up those ideas from 10 years ago!

I am very interested in what happens when I mix this with the headsets like Oculus Rift. Though it is a pity that Emotiv seem to want to charge loyal backers $70 for an Emotiv unity plugin, that apparently doesn’t work in Unity 5.0 yet ? I think they may need some software engineering and architecture help. Call me, I am available at the moment!

I am alos interested, give how light the unit is, to trace what happens in Choi Kwang Do training and if getting and aiming for a particular mind set trace, i.e. we always try and relax to generate more speed and power from things that instinct tells you to tense up for. Of course that will have to wait a few weeks for my hip to recover. The physical world is a dangerous place and I sprained my left hip tripping over the fence around the predpets. So it’s been a good time to ponder interesting things to do whilst not sitting too long at this keyboard.

As ever, I am open for business. If you need an understand what is going on out here in the world of new technology and where it fits, that what feeding edge ltd does, takes a bit out of technology so you don’t have to.

Space travel and fighting – Elite plus Mortal Kombat Predator

I occasionally like to flow between gaming experiences and think what they might be like as a whole. I have been playing more Elite Dangerous, this time on the early access version on Xbox One. Obviously I have talked about the game before and the kickstarter,and the oculus rift etc. Strangely though I am finding the “sofa version” even more engaging. There is a lot of sitting around in flying form place to place. It works really well on the joypad and the big TV too.

Yesterday one of the other game styles, the one on one fighting game finally got the character upgrade/DLC that I mistakingly thought was there are release. Mortal Kombat has been trickling out the DLC and now the Yautja/Predator set has arrived. Obviously I have quite an affinity with the character and the lore and I think they have done a really good job.

So I did this little xbox montage mashup showing one of characters in the story flying to a place to take part in a (gory comic book violence) fight. It was all recorded by just shouting at the kinect “xbox record that” then using upload studio in the xbox to stitch it together.

I used the training room for the predator vs predator fight, as it looks a bit like a docking bay. Its more of a short poem than a feature film, ideal for our internet attention spans. It shows of some of the nice predator work, both with and without facemask. Some plasma cannon action in there too.

Of course like all great expansive games like elite it would be great to be able to get out of the ship and do stuff like this. I guess that may come though No Man’s Sky may beat them to that 🙂

Microsoft Minecraft, Space and Semantic Paint

It is interesting looking, spotting, patterns and trends and extrapolating the future. Sometimes things come in and get focus because they are mentioned a lot on multiple channels and sometimes those things converge. Right at the moment it seems that Microsoft are doing all the right things in the emerging tech space, game technology and general interest space.

Firstly has been the move my Microsoft to make Minecraft even easier to use in schools.

They are of course following on and officially backing the sort of approach the community has already taken. However a major endorsement and drive to use things like Minecraft in education is fantastic news. The site is education.minecraft.net

And just to resort to type again, this is, as the video says, not about goofing around in a video game. This is about learning maths, history, how to communicate online. These are real metaverse tools that enable rich immersive opportunities, on a platform that kids already know and love. Why provide them with a not very exciting online maths test when they can use multiple skills in an online environment together?

Microsoft have also supported the high end sciences. Announcing teaming up with NASA to use the blended/mixed/augmented reality headset Hololens to help in the exploration of Mars. This is using shared experience telepresence. Bringing mars to the room, desk, wall that several people are sharing in a physical space. The Hololens and the next wave of AR are looking very exciting and very importantly they have an inclusion approach to the content. Admittedly each person needs a headset, but using the physical space each person can see the same relative view of the data and experience as the rest of the people. The don’t have to of course :), there is not reason for screens not to show different things in context for each user. However standard VR is in immersed experience without much awareness of others. A very important point I feel.

Microsoft have also published a paper and video about using depth scanning and live understanding and labelling of real world objects. Something that things like Google Tango will be approaching.

Slightly better than my 2008 attempt (had to re-use this picture again of the Nokia N95)

The real and virtual are becoming completely linked now. Showing how the physical universe is actually another plane alongside all the other virtual and contextual metaverses.

It is all linked and not about isolation, but understanding and sharing of information, stories and facts.

Hello Top Gear – An open application

Top Gear is looking for a new presenter to work with the already announced Chris Evans. They want someone interested in cars, obviously, and someone who can work on screen and deliver interesting things. I suspect they also need someone up for some more unusual stunts and gags.

At this point I raise my hand and say pick me! pick me!

Why?

I have always loved cars, watching them, driving them and as the technology has evolved, racing in simulations of them.

Being a tech evangelist and geek I think I am in a very good place to understand and share all the new advancements in how we propel ourselves around the planet too. The world is changing and the internal combustion engine, whilst amazing and fun, is on its way out.

On the TV show I presented on (oh yes I have some TV experience 😉 ) I shared the hydrogen fuel cell approach to moving things around. It is fascinating. I also got to do a piece on the Bloodhound world land speed record car.

Innovations in evaporation to power movement and even osmosis to generate electricity are appearing. Not to mention my other favourite subject 3d printing. The ability to make parts or entire vehicles from rapid fabrication is a game changer.

With all the things I share and evangelise I feel I have to be doing them, and living them to make sense. I recently said goodbye to my much loved Subuaru Impreza WRX in favour of the ultra hi tech all electric Nissan Leaf as I wrote about here.

There is also, as I mentioned at the start the entire gaming and simulation world of cars. On Cool Stuff Collective we visited a full on simulator. I jumped in and got pretty decent times despite being thrown around a lot.

Then there are the home platforms to race and custom cars. Forza, Project Cars et al.

I have been making custom paint jobs for these stunning models and driving them around at speed. Most recently in Project Cars but roll on Forza 6 and the new Trackmania.

I almost forgot. Being martial art instructor and black belt in Choi Kwang Do, a defensive martial art, we pledge to never misuse what we learn in class. Just in case that is relevant 😉

Anyway, my showreel(s) are here and more articles I have written are gathered here

It’s not all simulations and ideas, sometimes you just have to drive as here on a track day experience in a “Lamborghini LP640 Murcielago, all smiles.

I am available here, and on twitter @epredator

I will of course be looking forward to where and when formal audition process starts.

UPDATE 18/5/15 17:00

Here is the quick less than 30 second video as per the suggested rules. The official site will be up tomorrow for the world to apply, but it seems a pity to wait.

UPDATE 19/5/15

The rules were published this morning and they needed a landscape video, which made sense of course. I had gone for portrait to save any distractions in the background, but I reshot this morning and here is the new one 🙂

Good luck to whoever gets the gig I am sure it will be awesome.

Alone in a crowd – RPGs and MMORPGs

Free roaming role playing games are one of the most intriguing style of games and experiences to engage with IMHO. I use the word experience, not just games, in order to encapsulate the social environments and metaverse’s such as Second Life, Hi Fidelity et al. However I also did not just restrict that first sentence to include online massively multiplayer/multiuser online. There have been two significant release the past few weeks that are both huge expansive free roaming role playing games The Witcher III and Elder Scrolls online – Tamriel Unlimited. . I have been struck by a few experiences that I have had in these environments. Ones that reach past just playing a game or getting some points/gold/kudos/screenshots.

If you are not a gamer, or don’t engage with these sorts of things they may seem almost identical. They are certainly in a genre and have lots in common.

Witcher III – Xbox one

Elder Scrolls – Xbox One

As you can see in both you are able to take a break from any action and just appreciate the environment. This is not intended to be a graphic comparison though the slightly different styles, yet still aiming for the “real” is noticeable in these two examples.

Witcher III is a single player game. It is a story driven plot. A very long story it would seem too. Like all role playing games it rewards your play with new skills, levels and abilities as you level up. You gradually unlock the very expansive world with all its challenges and new types of monsters and bad guys as you progress through the main storyline. This generally involves traversing the world searching and fighting in various ways. It has a 3rd person style of free form combat. You choose the type of weapon and various potions and armour to give you a fighting chance against increasingly higher level creatures. You can just wander off the plot and the beaten track and see what you bump into. However this particular RPG reminds you constantly that whilst you might feel you have learned a lot there is still more levelling up to do as you arrive at a place in a world full of things that can cut you down with a single strike. Level systems in RPG’s generally appear as numbers on the display. When you have spent 20 hours playing and just reached level 7 and you wander into a clearing with a large beast that says level 35, where level progression is non linear and requires exponentially more effort and time per level you know you are in trouble. Witcher reminds you that no matter how heroic and skilled you thing you are, there is always something bigger and better. It has a grittiness to the entire game that brings a sense of foreboding, yet it is still entertaining. However you are very much alone and left to your own devices.

Elder Scrolls Online – Tamriel Unlimited is a very different feel and a very different experience. It is Skyrim (the one player RPG from a few years ago), reborn into a persistent Massive Multiple Player Online experience. Skyrim had been noted for it’s random generation of dungeons combined with its epic land size and the variation of things that you ended up doing in the virtual environment. Elder Scrolls has both a 3rd person and a 1st person view. The latter lets you see the world, and just your hands/ weapons. It lets you use each and on its own, weapon and shield combinations. The weapon can be ranged magic in one hand and a sword in the other. You travel the world finding missions from non player characters. Most of these involve collecting, running to and fro and just like the Witcher III putting things together and crafting items you need, potions, armour, magic, food etc.

So unlike Witcher III your character is in the environment with lots of other people. Just like the other giants of the MMORPG genre, World Of Warcraft and Eve Online. It is quite unusual for this sort of genre to make it to a console. Usually these platforms get multiplayer shooters where 16-32 people battle for a few minutes in an arena that is then destroyed and reformed for the next battle.

Here we have a world that we all share, all the time. Of course the technical detail of which server, or how anyone is actually in the “same” place is obscured and not entirely relevant to the experience. Also the world itself is generally a locally loaded place. The servers are there to broker player position, communication and hold certain shared features in the experience, but it is not really a full persistent world.

I have found it works really well as a game to be wandering around, getting engrossed in each task, gradually levelling up, choosing skill tree paths, working out what to carry, sell, deconstruct and to craft. Lots of the missions from the non player characters are actually there to help you learn the very complex system of crafting as much as forwarding any plot line points. Choosing missions chooses various guilds and organisations to which you belong.

I called this post “alone in the crowd” because it is a particular feature of this style of environment that you do see lots and lots of other people, milling around playing their own missions, but you do not have to interact with them if you don’t want to. Though sometimes it is unavoidable, or required to work in a team.

This is by no means a crowd shot! but the character not the right is just running off somewhere. They ran past me, I paid no attention to them, nor them to me other than we just both know we are part of the human noise of this virtual city.

Most things are not restricted based on numbers in the free roam areas. For example the blacksmith station is a object you have to walk up to, select and then take part in an inventory management/crafting dialogue. The things you are doing are entirely related to you and your experience, but we all have to gather around the anvil in order to do this. This is good when there are only a few people, but when it’s busy the room is full of lots of gormless characters standing around.

Passing one another in the world feels like something we normally do, it adds to the sense of a shared experience, but all standing around staring into space, or watching people traverse the land mostly by jumping and bounding around in order to get for a to b quickly starts to look like a complete mess of uncoordinated activity. Which of course, it is 🙂

This is a feature of most virtual world online experiences. The only time it is not is when people are more seriously role playing and rules are set to preserve the experience. This happens in some areas in Second Life where you are encouraged to be wearing the right clothes, speaking the right way to join in with the entertainment of acting. Rather like not turning at a real life roman re-enactment on a segway wearing a stetson.

That is not to detract from the game, or games like it. This is a genre where stuff like this has to happen due to the way the tech works, but also the way people work. It is funny, but it can be a jolt in the immersion.

Where this sharing of space does work though is in battles with things in the environment and things in it. You can be wandering around exploring and then be set upon by a pack of wolves. When you start fighting it becomes “your” battle. However other people passing by can also see and join in with that battle, or choose it ignore it. Those wolves become a shared experience. There are a lot more bad guys than wolves but it’s an easy example to use. For a short period of time, you and the others who just happen to be there at the time are no longer alone. You are working with the same problem. Now this could just be thought of as a few experience points of resources to add, share split, ignore but it has all sorts of extra dynamics.

If you see someone in trouble, do you help ? Does your helping actually hinder and annoy them? as they were challenging themselves and their character.

Sometimes you just have to join in in order to be able to get the things you need to proceed. In one particular example the bits of a monster were needed in order to escape an area. If someone else kills the monster before you get any sort of hit in then you are not credited with any part of the kill so do get the bits you need. This meant waiting, or searching for another one of the same type, waiting for a re-spawn and getting in there before the rest of the crowd. Game design and rules may have altered how many people might be competing for this single resource, and I did escape, but it was an interesting co-opetition dynamic.

This led also to another thought on timelines, on the immersive experience and how we are often reminded of our place in these virtual worlds. Having worked towards a small piece of the plot and defeated a particular boss, whereby a slight levelling occurred, new kit and a small buzz of excitement at having completed something, whilst standing near the same spot taking stock a new crowd arrived. Of in the distance I heard the bad guy arrive again, go through the same “No mr bond I expect you to die” style of dialogue. I watched from the distance as my fellow travellers and gamers fought the same battle, but in a different way. In my timeline, this battle was over. I was stood in what was attempting to be a coherent virtual experience being reminded I was not. It is not a problem, as such, but it is a thing.

It is reminder and a breaking of the story. Yet I think there may be something in this kind of feeling of immersion and then shocking back into reminders it is not real. It is an accident of the technology restrictions that cause this to happen. It is a technique used in other media when the action breaks the plane to the fourth wall. When a stage actor talks to the audience when previously the audience were passive. Some of the most chilling of these have been Kevin Spacey in House of Cards. The very occasional, to the point you think they have forgotten to write any in, turning to the audience and engaging in dialogue is very special. One of the most memorable being “Oh did you think I had forgotten about you?” still gives me goosebumps thinking about it.

It should not matter if we choose to form clans and take on multiplayer missions, or just act on our own in these worlds. The very mechanism we are using has the ability to reach back out to us and surprise us. I have felt this in games just a few times. It may be a party trick in some cases but reaching out to the human, through the role play, through the immersion may just be the most memorable and entertaining thing a virtual world can do.

Internet Of Things – Choi Kwang Do – Fitbit

This may be a bit late to one of the party but @elemming and I have got ourselves Fitbit Flex HR wearables. This little devices track all sorts of walking movement, sleep patterns and most importantly heart rate. The idea is you wear them all the time and aim to do 10,000 steps a day. I was more interested in the heart rate monitor though and what it meant to my Choi Kwang Do training. Whilst this may stray into exercise bore territory I though it worth showing our martial art in internet of things (IOT) terms with some nice graphs.

It is easy when devices and numbers are in place to over fixate on the numbers, but it makes sense to use instrumentation as a check and balance for your own personal experience.

The last few weeks I have ramped up my training at home, having practiced this art for 3 1/2 years I am still very much learning, but I do have a body of experience to draw upon and some degree of consistent feeling to how particular techniques are going to how much extra I can put into a pattern or speed drill. I have used heart rate monitors before. I used a chest strap one about 18 years ago when I had a little burst of getting fit. (That’s part of the article in may portfolio piece virtual athletes – Sports Technology and games). It was where I came to understand it the different heart rate zones based on age.

I had considered other sports wearables, the Apple Watch was a little to expensive, and other people we knew had fitbits so it added to the game side of things with leaderboard and comparing data.

Yesterday was the first half of a day with it, I had already done 1 hour of Choi in the morning so missed that session. Just before our full class at Basingstoke I did a 17 minute warmup and PACE drill at home.

This involved around 5-6 minutes of stretch. Then 2 minutes of moderate pace punching and kicking on BOB, 2 minutes rest, 1 minute faster harder pace kicking only, 1 minute rest and then 30 seconds flat out with everything. I then warmed down a little with my current combination.

” alt=”” />

” alt=”” />

The trace of the heart rate certainly fits the level of work and where I felt I was with my heart rate. PACE drills (Progressive Accelerated Cardio Exertion) is a high intensity short burst training method gradually extending and layering your range. We train for speed and power in short bursts as part CKD rather than long repetitive exercise.

The class session ended up as a very intense one too. We have a number of soon to be black belt students in their run up to a grading. So for the lesson I joined them to do our entire set of patterns. This is around 8-10 minutes of going through each coloured belt curriculum pattern. The pattern is a defined set of movements that build techniques up and up. We can all do this at a good steady pace to maintain the form and technique. After that we had a specific blackbelt recap and evaluation of our current belt. That involved current hand techniques, current kicks, combination and pattern. This then ended with a very high intensity PACE version of the speed drills. This are what they say, fast defined sets of moves. We each had a slightly different set but performed them at the same time. We did inaccessibly fast and multiple sets with a final full on blast at the end of one set flat out (when already feeling flat out). The class then went on with both shield drills and focus mits.

As you can see the initial ramp up is the start of the patterns with almost no heart rate increase, then as it progresses the heart rate starts to climb. The trough after 15 minutes is the rest period before the black belt sets. The plateau at 125 from just before 45 minutes is when the speed is and power are still the same but the body seems to accept this is going to happen. This is when techniques flow the best. It is also when the effort is in holding the equipment for others and encouraging them. It shows the benefit of an intense PACE session soothing into learning mode and technique efficiency.

My resting heart rate is showing at 64bpm after the exercise and sleep which is not too bad.

By contrast, and in not was worse is the walk to school and back

It shows how a nice steady activity, not over doing gets the heart rate in a comfortable fat but position.

So armed with this information, and now with a reference point I can use the device to reflect on various elements of training and hopefully make my techniques better and more effective.

With some improvements in the sensors that detect out body movement we should be abel to make some great strides in how specific body movements are delivered with great effectiveness but being mindful of the more pure nature of a martial art in just doing it and being in the moment.

Pil Seung!