I have been trying to drop in alteration one creative activity each day, sometimes its guitar, sometimes its drawing, sometimes its both. I had started to try and learn to draw a few years ago but now I have the time and inclination again I took up a subscription to 21draw.com. This was because the artist Mark Kistler whose excellent “You can draw in 30 Days” that gave me some instant enjoyment and techniques when I tried the last time has several series on 21 Draw. I had started back on that and will go back to it, but I got very interested in @Rodgon and his learn to draw anatomy in 21 days class. This class brought me an instant revelation, which is obvious, but of course they aren’t when you don’t know them. I had been trying to doodle Tai Chi moves as a way of contemplating and understanding them. I was looking at them as “photos” in my head and attempting the outline view, a drawe what you see approach. With this course it was about learning to draw people from the inside out, to consider the connecting points and rotation of joints and stretch of muscle groups. Tai Chi is more about inside than outside, when I use 3d models in game engines with is about kinematics and bones etc. Hence it should have been obvious that I should try and draw this way, not try and trace an outline, a silhouette of someone then “colour it in”. Boom ! A ping of enlightenment and a course to help. It is one of many but you have to start somewhere.

Not only is this an enlightening idea for me, but I have also realized that just as I have spent many years learning martial art approaches I should treat these other life skills in the same way, just a step at a time. Every bit of basic practice is as important as any of the fancy stuff. There are no easy fixes or paths to anything, but there are ways to find things to help and accelerate the right sort of learning. Learning things should be fun, even if difficult and challenging. It is not a case of no pain no gain. Anyway.. on with the learning.

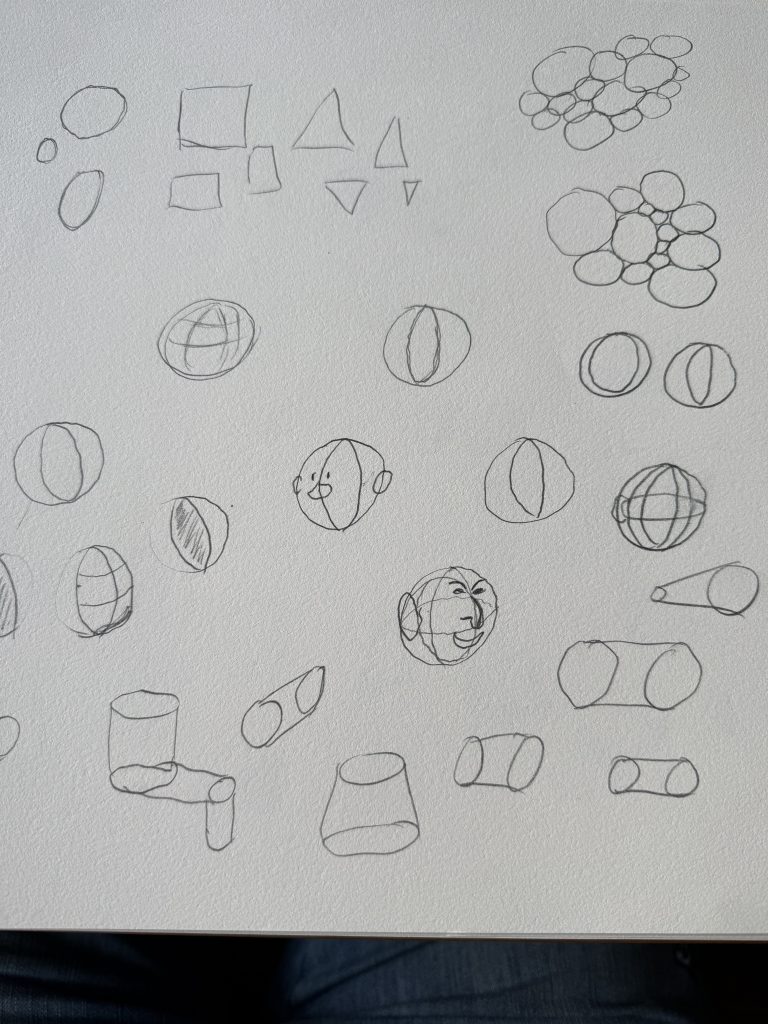

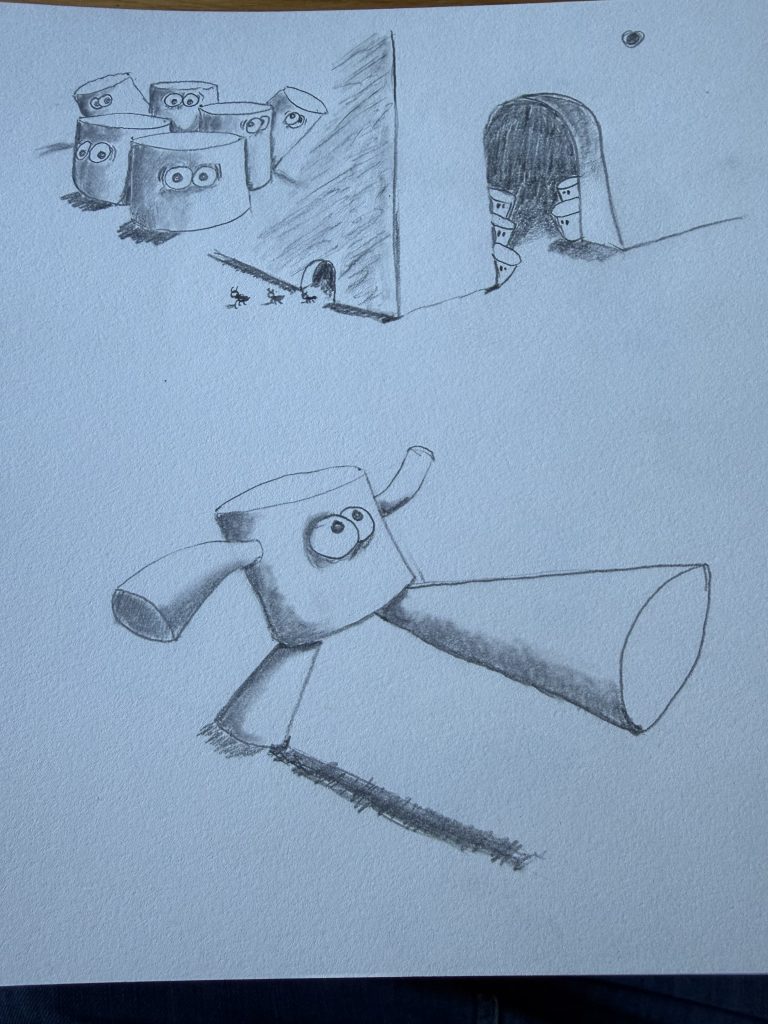

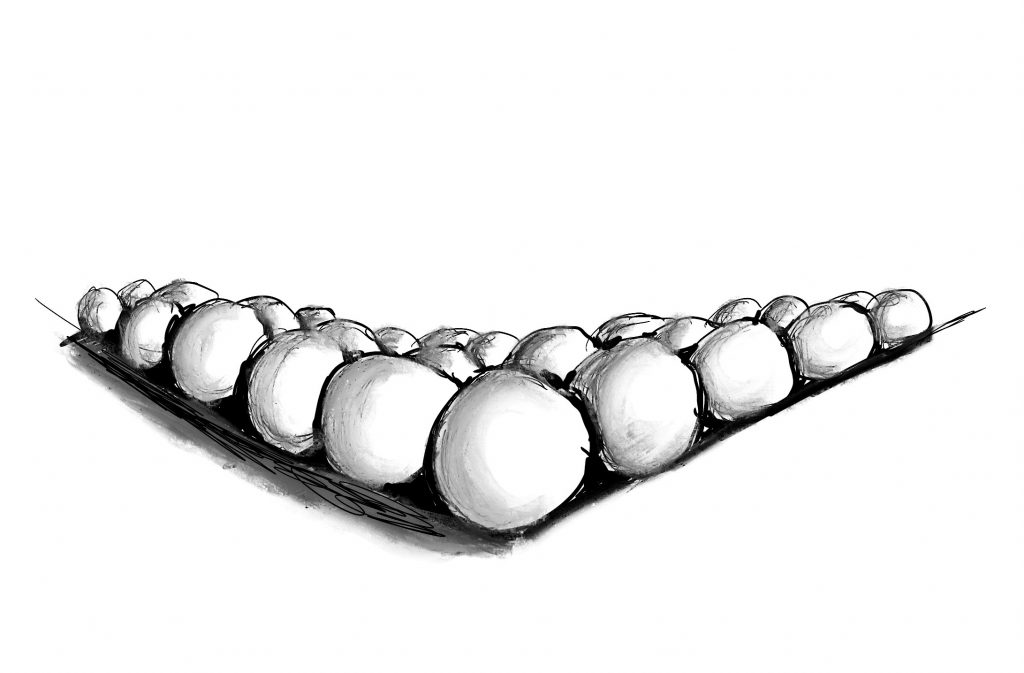

The course starts on very basic shapes, connecting circles in cylinders, making circles become spheres with a space and volume to consider. Connecting points on either side of a sphere becomes the basis of Rodgon’s approach to all body parts, but starts with heads 🙂

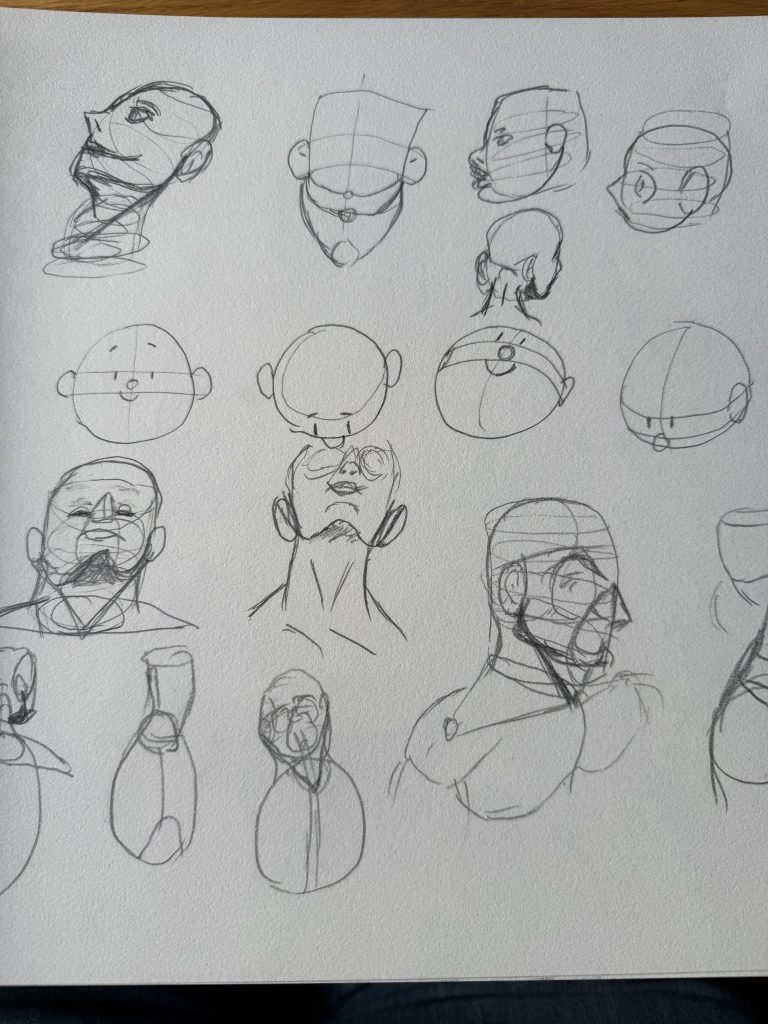

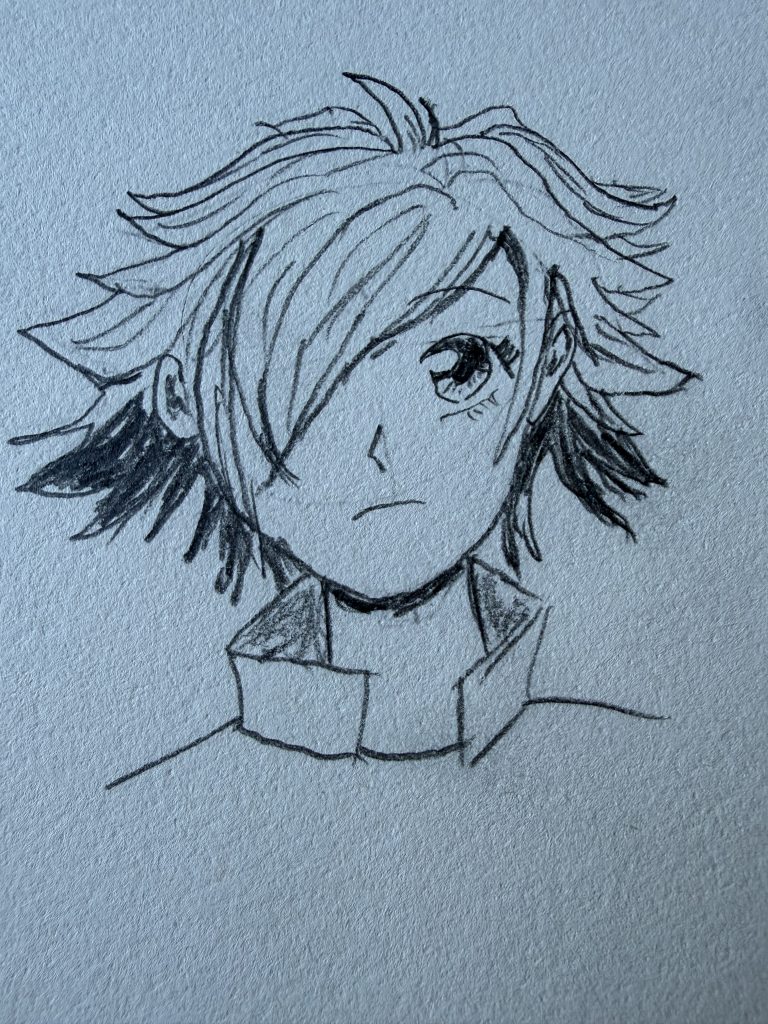

Before you know it you are exploring head and neck positions and shapes and its feeling rather good.

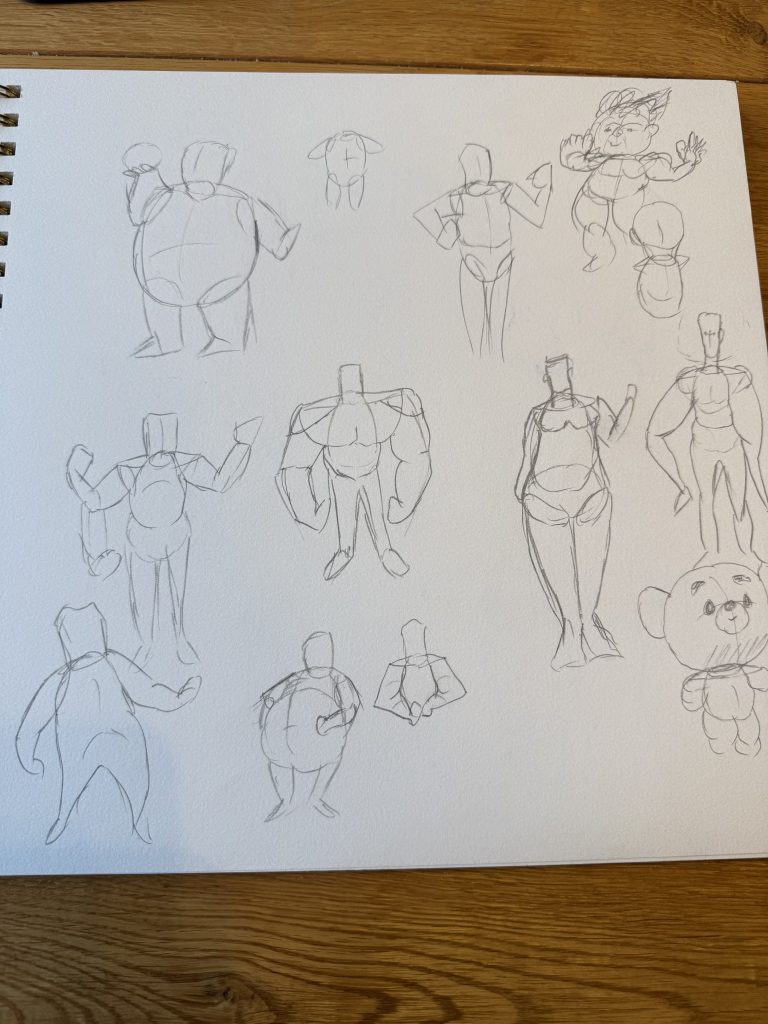

Body poses and more complex shapes soon start to appear.

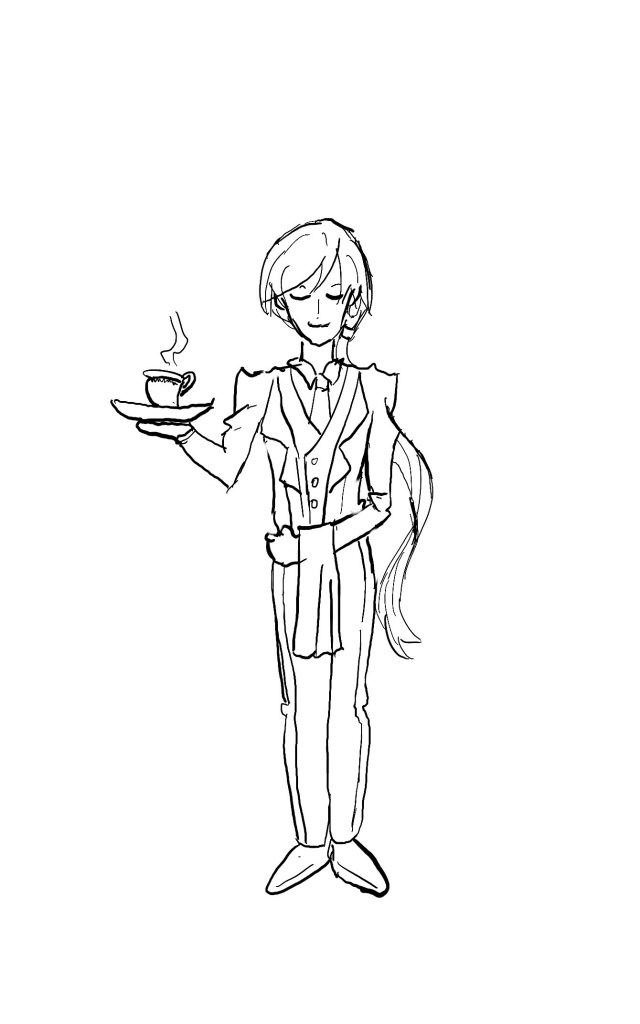

It’s all still sketches and scrappy lines but learning thing like his mantra of outside edge first then inside for volume when drawing arms and legs, combined with overlapping shapes is really fascinating. I am looking forward to using this and the other drawing techniques I m learning from a how to draw manga book, and the shading and styles of Mark Kistler to do some things that are going to help me learn more about Tai Chi and also storyboard my books. There are plenty more 21 draw courses to do too.

I am playing with both pencil and paper and also Procreate on the IPad.

It is odd that with after AI generation capability I have, I am feeling the need to keep it human and learn the craft, the art. I may just stick to these basics, but each time I doodle something there is a learning moment. Practice makes perfect 🙂

The Flickr album of my scrawls on this anatomy course are here

https://www.flickr.com/gp/epredator/3Tk65bdP53