As I am looking at a series of boiled down use cases of using virtual world and gaming technology I thought I should return to the exploration of body instrumentation and the potential for feedback in learning a martial art such as Choi Kwang Do.

I have of course written about this potential before, but I have built a few little extra things into the example using a new windows machine with a decent amount of power (HP Envy 17″) and the Kinect for Windows sensor with the Kinect SDK and Unity 3d package.

The package comes with a set of tools that let you generate a block man based on the the join positions. However the controller piece of code base some options for turning on the user map and skeleton lines.

In this example I am also using unity pro which allows me to position more than one camera and have each of those generate a texture on another surface.

You will see the main block man appear centrally “in world”. The three screens above him are showing a side view of the same block man, a rear view and interestingly a top down view.

In the bottom right is the “me” with lines drawn on. The kinect does the job of cutting out the background. So all this was recorded live running Unity3d.

The registration of the block man and the joints isn’t quite accurate enough at the moment for precise Choi movements, but this is the old Kinect, the new Kinect 2.0 will no doubt be much much better as well as being able to register your heart rate.

The cut out “me” is a useful feature but you can only have that projected onto the flat camera surface, it is not a thing that can be looked at from left/right etc. The block man though is actual 3d objects in space. The cubes are coloured so that you can see join rotation.

I think I will reduce the size of the joints and try and draw objects between them to give him a similar definition to the cutout “me”.

The point here though is that game technology and virtual world technology is able to give a different perspective of a real world interaction. Seeing techniques from above may prove useful, and is not something that can easily be observed in class. If that applies to Choi Kwang Do then it applies to all other forms of real world data. Seeing from another angle, exploring and rendering in different ways can yield insights.

It also is data that can be captured and replayed, transmitted and experienced at distance by others. Capture, translate, enhance and share. It is something to think about? What different perspectives could you gain of data you have access to?

kinect

Flush Magazine, Holodecks, Kinect patents and geek history

The latest edition of the wonderful Flush magazine has just been published. This issue amongst all the other great content in there I have put forward some views on how close we are to a Holodeck with respect to some inventions we already have and some that may be in the pipeline. So have a read and see what you think. Microsoft kinect and projectors and a little bit of geek history feature.

Or you can just link to here though I do recommend reading the whole magazine!

As usual it looks awesome too. I think it is fasciating the journey an idea takes from words and some image suggestions to a a full layout beautifully presented. Thankyou to @tweetthefashion as usual for doing such a great job putting this all together, and a wave to all my fellow contributors.

The iPad edition will hit the shelves very soon too 🙂

Update: I just realised I am in the same Magazine as an interview with Steve Vai too – RAWK!!!!

More Choi Kwang Do kinect tests

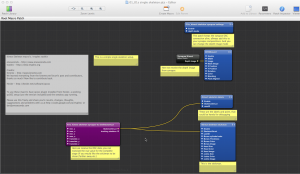

Having tried using a relatively complex set up for the previous text of kinect on the Mac specifically for enhancing or viewing Choi Kwang Do moves I came across a much richer way to visual it. This uses the Tryplex created set of macros in the Quartz Composer on the Mac.

Oddly I had not come across the incredibly powerful and useful Quartz composer sat here on my machine!

It allows structured linking of macros and rendering to create motion graphics and sound.

The Tryplex toolkit has a lot of examples of how to process skeleton information and render it. It comes in two flavours. One is the more complicated to set up OpenNI/Nite/OSCSkeleton as the input for the data. i.e. the setup I had previously for the simple viewer. Or it uses a quicker to run app requiring no setup called Synapse. Despite having the other pieces set up I just used the synapse app for this one.

You simply copy a few macro files into the quartz libraries, run synapse to start the kinect and then run the Quartz simple skeleton.

This requires a cactus stance for calibration, unlike my previous uncalibrated example. Then away it goes.

I am by now means a reference pattern for the Choi moves, but I did try a yellow belt pattern and also a yellow senior speed drill, plus a few others. I also threw in some mistakes like punching from the front foot not the rear to see how easy it is to spot them.(I also tried a music replace with youtube, tongue in cheek 🙂 )

I have to say I was impressed! There are a whole load of other coded demos that show acceleration on a particular body part, or a very cool one involving shaping and moving a cube as a gesture object.

Now I have to figure out how to write Quartz macros, just another tech language 🙂

It also looks like a great way to combine the inputs from something like the BPMPro with the Kinect that I mentioned yesterday

Roll on tonight and another session at South Coast CKD to keep learning and on the journey

Are you a Han or are you a Muppet? – Game crossovers

It seems the interwebs are awash with the horror of a much revered franchise, Star Wars, over stepping the mark of good taste and decency with respect to its characters. The recent Kinect Star Wars appears to not just be the light sabre and force push gestures that fans were looking for, but instead has thrown in some dancing sections where you get to boogie on down with Han Solo and the Princess.

After the backlash of the recut Darth Vader “Nooooooo!” moment and the amazingly tacky Vodafone Yoda adverts, combined with PC World representing the Evil empire it seems that nothing is sacred in Star Wars anymore.

It is easy to get po faced about the seriousness of a beloved icon that many of us grew up with, I was 10 in 1977 when Star Wars came out so it, along with Star Trek and Blakes 7 shaped what I do. I have fond memories of the characters and of the science. Games and games marketing has often had bad film tie ins, yet Star Wars has generally had a good run. The Star Wars lego games in particular would at first seem a disruptive cash in but they played more to playfulness of recreating the Star Wars universe as we all did after we had seen it for the first time and played Jedi. They are tongue in cheek because they are outside the actual virtual reality of Star Wars. The video above though is aiming to be photo realistic, not a lampoon and, along with Yodafone take something away from a cherished experience that then gets tainted with a cashing in bad taste.

For once I decided not to pre-order a game and left Star Wars Kinect for another day. I had heard tales of the content and style and I thought do I actually want to pay for the privilege of being annoyed by how a game does not fit.

This is an example of the sort of crossover that we often talk about not doing in Virtual worlds. Whilst people want content from place x in place y, unified avatar logins etc they really can mess things up for everyone culturally.

A crossover that does work is Little Big Planet Muppets. In a way this is like Star Wars Lego in that it is a cartoon lampoon of the muppets. Of course the Muppets are themselves cartoon lampoons so it manages to re-enforce the fun that is the Muppets. They are comedy characters that are based around odd things happening. Yoda was of course heavily related to the Muppets too. I think though that along with not doing appalling film tie ins of films there should be a responsibility to a brand to not overdo its game ripping off. It used to be the case that light sabres were not allowed in gamer controlled characters as George Lucas held them up as a higher order device that you needed to be a real Jedi to wield. That may have been to far the other way, but it seems he has given up and is just raking it in now which is such a pity.

As you can see above this is great fun, a fantastic blend of the muppet look and the homemade Little Big Planet materials.

I can’t wait to see a remix of this brilliant song done as “Are you a Han or are you a Muppet”

Sparkling on Kinect with 3d finger drawing

Now that I realize I can quicklaunch the Kinect Fun Labs apps individually and do not have to go through the uncharacteristic lockups caused by the Fun Labs launcher app I am getting to see some more of the gadgets.

This one is called sparkler and its results are interesting. You draw in 3d on a backdrop that is made from a foreground and and a background shot. What is really great is this is using slightly more precision on the kinect. To draw you hold up two fingers as a pointer and stop drawing by clenching your fist. Up to now all games have treated your hands as dots at the end of your arms.

The video is very short but it is what is generates along with stills for sharing on Kinect Share.

I am waiting on my call for the next Marvel super hero film 🙂 I can be g33kspark or something 🙂

Is that a dancing shoe or a shoe dancing?

E3 this year had some announcements about the Xbox and its Kinect. I was surprised that the Kinect Fun Labs arrived straight away on an update. These are a set of kinect toys and apps, many inspired by the open source pioneers who built applications with as part of kinect hacking.

The one I tried was Googly Eyes. You hold up any object and the kinect scans in the front, and then the back. It then processes for a few seconds and adds comedy googly eyes to give the object some character.

The thing on screen is then controlled by your body movements. So it is just a few seconds from static real life object to a virtual puppet. As you can see here is is one of elemming’s shoes dancing.

It is very well done, I a sure with the right lighting and object you can make things that are much more complicated.

There are another few apps and more on the way to show off advances in what kinect can do. There is also an avatar creator that makes an xbox style AV but that resembles you (something the nintendo 3DS does well too 🙂 )

Very cool, very interesting and I will be exploring it some more.

Introducing open source to kids TV – yes really!

I really enjoyed the chance to explain something really important on this weeks Cool Stuff Collective. The core of the piece this week was the principle of Open Source collaboration. I had started to lead up to this concept with the wikipedia piece a few weeks ago, showing the views that anyone can get involved can contribute and not just consume on the web.

The way to approach open source though had to be something other than the “traditional” software applications such as the Linux operating system. Whilst it is one of the most advanced and technically rich exemplars of the this self organisation and support eco system its really not compelling enough for kids.

The open source libraries for the Xbox Kinect however are spot on. It is a triumphant story of the explorers out there seeing what they could do with what is already an amazing piece of consumer technology. It being the big xmas hit only a few weeks ago most people can relate to it and what it does in the context of the Xbox. Many of the viewers will have played with one too.

The speed with which the open source community gathered and hacked the kinect, released the code and then people started gathering and building more and more things was so fast it highlights the speed disruptive innovation can side swipe large corporate entities. In the first few days of the hacking Microsoft took a “not with my box of bunnies” approach. Legal proceedings were threatened etc. Somewhere, somehow there was someone with enough sense to stand back and say… “wait a minute, at the very least this is selling even more kinects, people are buying kinects who don’t have xbox’s”. After all no harm was being done really, the kinect was not being stolen, it was not a DRM issue. The thing has a USB plug on it! Now it may have been all calculated to frown and them embrace the hacks but however it has worked out Microsoft come out pretty well having decided to join the party rather than stop it. Whilst not specifically part of the open source movement(s) they are releasing a home hacking kit.

The choice of how to work with your kinect on a computer is a varied one but just for the record (as we did not give any names/URLs out on the show)

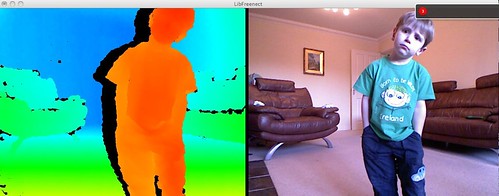

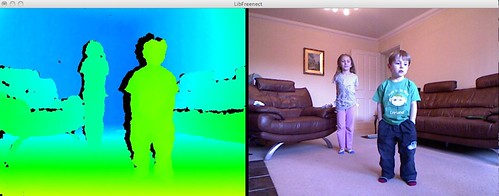

I used (and hence was helping to support) the Libfreenect piece of software on my Mac. All the info you budding hackers need is at Openkinect.org

This let me show Sy the depth of field display running on a Mac. The left hand colour picture reflects distance, one of the key points of the Kinect in sensing movement over an above a regular webcam. I was not altering any code just showing what was available at its very basic level.

I also demoed the audio hack of a Theremin the Therenect by Martin Kaltenbrunner of the Interface Culture Lab. I bumped into this demo via a serendipitous conversation about what a theremin actually is and how it works just before putting this piece together. Martin is also one of the inventors of the ARTag and TUIO integrations that I used in the AR show in Unity3d and the brilliant Reactable that I hope will be in the final Big gadget adventure film towards the end of series 2. (So a friend of the show as his stuff just works whenever I try it!)

There are of course lots more things going on and so many good examples of people working on the kinext and hooking up other free and accessible pieces of code, and more importantly sharing them. @ceejay sent me this link on twitter after the show aired.

Hopefully next (and final record for the series) I will get to do the Opensim piece, more open source wonderfulness to build upon this and the previous conversations.

Many people are not aware just how complicated Open Source is as a concept and the implications it has as part of any eco system. It is a threat and an opportunity, a training ground for new skills, a hobby and a political minefield of ego’s, sub cultures, competing interests. What come out of the early days of Open Source is usually very rough, but it works. If it does not work quite right you change it and contribute back. We have yet to see the ultimate long term effects of open source in a networked world. We have though seen it make massive changes to the software industry, but the principles of gathering and sharing and building applies to way more that our geeky business. It is about governments, banks, manufacturing and even the legal system. It is, not to put too much pathos on this, the will of the people. (just not always the same people who consider themselves in charge or market leaders.)

Open source projects also tend to spring up in response to a popular commercial event, challenging windows with linux as an example. Without something big and unwieldy, or not done quite how people really want it done, an open source movement will not form with enough passion and gravitas. That is not to say that people do not realise lots of things as open source. You write code and share it, build and show etc, but that is open sourcing and not the complexity of an open source movement I think.

So, a heavy subject once you drill down but it is the future and its already here.

Open source is messy, it about people, it tends to not fit all the preconceptions of a product. However people tend to expect a product to work and be supported the same as if they paid for it. Which is why there actually is a financial and business opportunity in wrapping open source up, and providing labelled versions and services with appropriate licensing. The people that build still need to eat and be recognised for their work too. So it is by no means just a load of free stuff on the internet, but you are free to join in and I hope some kids will be inspired to at least take a look or ask their parents and teachers about the social implications of all this too.

Having a go with Kinect Hacks

For reasons that will become apparent in a few weeks time I needed to see if I could get my Mac to talk to the Kinect using the brilliant open source OpenKinect.org. I don’t do too much in the command shell on my Mac so the realms of Homebrew and MacPorts mentioned in the instructions, whilst I knew what the point of them is, meant that my machine was in a bit of a state.

I had used something call Fink a while back, but could not remember why so I tried the Homebrew instructions but failed and had too many paths and bits not very happy to take what is a ready made package. So instead I went to the MacPorts compile it yourself path.

http://openkinect.org/wiki/Getting_Started.

The glview application then ran nicely and told me I had 0 kinects attached to my Mac 🙂

A prerequisite for this is to have the kinect with a power supply as opposed to bundled newer xbox and kinects where the power is built in. I simply took the Mac to the xbox, unplugged the USB from there and popped it into the Mac, ran glview again as a test. Bingo!

At its very basic mode you can see the colouring for depth being rendered as the predlets are nearer or further from the device.

Next step is to hook into the libraries and make sense of the data 🙂

Product development crowds – Kinect hacks

I have really enjoyed the explosion of Kinect hacks that have taken place in such a short period of time since the release of the Kinect. The technology of the Kinect is fascinating in its own right (you can read more from the actual engineers here)

However its the consumer use of the device on easily accessible devices that really is driving things forward. Previously games hardware is locked away with preferred developers, its hard for ideas to happen anywhere other than in the studios. Here however we have people trying out all sorts of demonstration applications, some are sensible, some are mad but they all really help drive forward the product development.

Microsoft were initially saying they were unhappy at this, but I find that hard to believe in this day and age as giving things over to people to experiment and share globally is crowd sourcing at its best.

Not every demo gets released as an opensource piece of code but many of them are using an open source base and a commercial product with the Kinect.

In many ways this will expose more people to the concept of open source development. Let face it most people would not really grok the open source and sharing interaction that goes together to make software like Linux or Droid or even Opensim. However when video pop up os a device they have only just seen in the shops and may indeed have, doing things that are not sat on the shelves of game shops it becomes very real and prompts the question how are they doing that? Why are they doing that? Why are some people just giving away what they have done?

No doubt the games developers are looking at all the hacks and getting seeds of ideas or seeing things as proof of concepts that will drive even better kinect games.

Kinect and motion sensing is not the be all and end all of Human computer interaction but it does work well for youtube and vimeo demonstrations and pushes the world forward. Now if the Kinect had been locked down as Xbox only and not hacked in this way there would have only been about 20 kinect applications whilst we wait on the more polished production of the the games companies.

Standards exist already as can be seen from this article from the excellent Kinect Hacks site which is a great place to follow this trend.

Kinect – Yes its fantastic

The current Cool Stuff Collective show airing or Tx8 (of 13) as they call it in the trade 🙂 features yet more great and cool gadgets and games. For me though the true star of the show was the Xbox Kinect. Having it set up in the studio and having had a little bit of time to look at it, seeing other peoples reactions to it it truly is one awesome piece of kit.

The show aired on Monday 1st November on CITV but you can see it on ITV this Saturday 6th on ITV1 9:25.

Being able to stand up and present this and explain it to Sy let me do my usual arm waving, what was great was that the avatar on the screen was obviously mirroring me as I occasionally stopped steering the boat with Sy in the and gesticulated to get the points across about how revolutionary this really is for commercial in the home technology.

Seeing the debug console earlier and all the points of the body and various cameras at work is a very surreal experience too. Obviously the games are about controlling an environment and immersion but the avatars responding to you is more about self reflection. You know its not you, its not really a video of you but a fully rigged puppet responding to you. This is much more engaging and emotive that I expected it to be.

Of course being The Cool Stuff Collective it cant all be serious philosophical points about the nature of self and existence.

So here is a picture of Monkey, Me and the Pop Crash Grannies Janice and Victoria as we have a joke sleepover party at the end of the show.

Yes that is a seagull flying past on the end of a fishing rod… oh I gave the secret away….

Yes we also had the introduction of Donkey to the show this week and the return of Cave Girl

Of course the irony/serendipity of working with Cave Girl who arrived on the show due to Monkey inventing a time machine that I helped him with, when my main presentations on the direction of virtual worlds and gaming technology is called washing away cave paintings really cant be ignored either. Its all linked you see 🙂

As is the whole Chicken suit in fable3 that I mentioned here

Here is to next week with yet more bizarreness.