Holiday’s give you time to ponder and inject serendipitous thoughts and events into your life. For me this time is was seeing an episode of Coast telling part of the story of Albert Einstein in Belgium. It got me thinking about relativity and points of view that people approach things with. In particular this related to just how many people and things need to be in a virtual space at any one time.

If you arrive at metaverse interaction from a “business” perspective or are used to Second Life and/or Opensim then having more than 50 avatars in one region or space at a time is viewed as an unfortunate limitation. The point of view being used is that of needing to be a mass communication vehicle, running huge events for thousands of people who will all listen to your message. That however is a flat web mentality. A billboard, even with interaction and a sense of belonging.

If you arrive at metaverse interaction from a “games” perspective. Having 32 people in once space performing a task is pretty much the maximum (in general). Having more doesn’t always add to the experience. Ok so Eve Online has 60,000 users on one server but you don’t really get to see them. It is also the exception. A few games have dabbled with FPS scenes with 128 people battling. You battle however is with the few dug in around you seldom the people the other side of the map.

So we have two relative view points. It is odd that the “business” perspective where it is about meetings and communication, sharing ideas and understanding will use the objection of “oh only 50 people?” when in fact the gathering it needs are targeting small groups collaborating on fast ideas. The games industry direction is one of “whats the point having all that freedom and that many people” when that industry is about mass take up and use of produced resources.

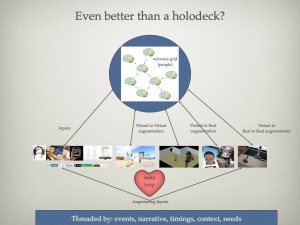

If we had the ultimate virtual environment that could house an infinite number of objects and avatars at amazing resolutions would we be any better off in working out how to communicate or play with one one another?

It is the restrictions of the mediums themselves that brings the greatest creativity?

If everyone could log on and all be in one place surely we would still have sparse areas where no one was or over crowded areas where it seemed to busy you would use your client to not bother rendering quite so many people.

I am not saying we should not strive for more and bigger spaces, I would love to see them. I am also not saying lets stick with 32 soldiers in a war torn town. I am saying that everyone should consider the other perspectives of what they are trying to do and why and not discount the other view. The chances are the other view may be what you actually want to do.

I was also struck by scale and relative size whilst wandering around the amazing sand sculptures in portugal. It was like a texture free virtual world.

Each of these is crafted from millions of particles of sand. It is not just a surface rendering, the whole thing is sand right through. No computer style rendering shortcuts. So each particle is a physical pixel, a phixel ? It takes up space and can only be viewed by the number of people who can fit in the space. The constraints of the medium again make it interesting. There is a choice to sculpt in lots of materials, yet this huge gathering chose only to use sand, because of its properties and impact and a craft and talent required to use them.

This all blended in mentally with Minecraft.

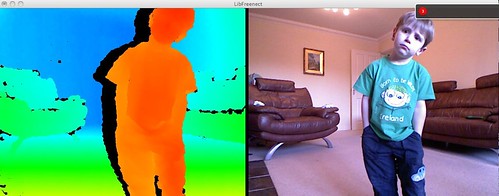

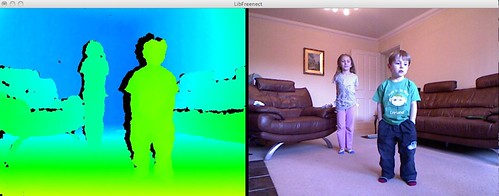

The predlets got interested seeing me start to play and actually understand the point and pointlessness of Minecraft.

I was only really doing a few bits and pieces, but once you start digging and rebuilding, combining and digging some more it is (as it success has shown) a totally intriguing experience. I think it works because of its lego like qualities. You are restricted in the medium and in the resources, but challenges you set yourself become very real despite the old school doom like block graphics.

So once again it is all relative, it is not everything to everyone with one perfect ultra solution.

In the mean time it is good news that Intel is pushing forward with 1,000 avatars in a sim (i.e. more than most high end games would dream of going near) as reported here http://www.hypergridbusiness.com/2011/06/1000-avatars-soon-coming-to-a-region-near-you/ and there are some interesting papers on how Opensim scales and works from Intel here