Way back in 2006 when many of us started to use the persistent multi user networked virtual world with self created content (Second Life) in all sort so of place like business and education we thought we were on the cusp of a virtual world revolution. As a metaverse evangelist in a large corporation it was often an uphill struggle to persuade people that communicating in what looked like a game environment was valuable and worthwhile. It is of course valuable and worthwhile but not everyone see that straight away. In fact some people went out of their way to stop virtual environments and the people that supported and pioneered their use. Being a tech evangelist means patiently putting up with the same non arguments and helping people get to the right decision. Some people of course just can’t get past their pride, but you can’t win them all.

For me and my fellow renegades it started small in Second Life

I also captured many of the events over the course that first year in a larger set along with loads of blog posts on eightbar including this my first public post that always brings back a lot of memories of the time and place. It was a risky thing to post (if you are worried about career prospects and job security) to publicly post about something that you know is interesting and important but that is not totally supported.

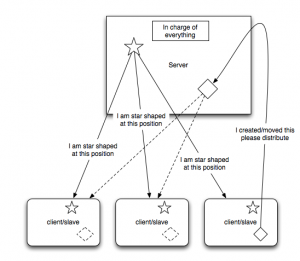

One of the many ways people came to terms with virtual worlds was in the creation of mirror worlds. We all do it. In a world of infinite digital possibilities we ground ourselves by remaking out home, our office our locale. We have meeting rooms with seats and screens for powerpoint. This is not wrong. As I wrote in 2008 when we had a massive virtual worlds conference in London

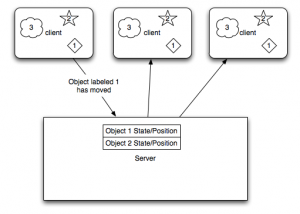

It seems we are seeing many more mirror world applications appear once more and generally the builds are in Minecraft. It is interesting Minecraft has all the attributes of more detailed virtual worlds like Second Life and Opensim. It is a persistent virtual environment. It has the 8 bit block look and much less detailed and animated avatars, but it does have a very easy approach to building. You stack blocks. You still have to navigate the 3d space get you first person view in the right place and drop a block. (Many of the objects in 2006 were from people who felt they could not navigate 3d space easily something I think that has started to melt away as an objection). The relative resolution of a build in minecraft is low, you are not building with pixels but with large textured blocks. This does have a great levelling effect though. The richer environments require some other 3d and texturing skills to make things that look good. Minecraft is often compared to Lego. In Lego you can make very clever constructions but they still look like Lego. This becomes much less of an visual design challenge for those people less skilled in that art. A reduced pallet and single block type means not having to understand how to twist graphic primitives, upload textures and consider lighting maps etc. It is a very direct and instant medium. Minecraft has blocks that act as switches and wires that allows the creation of devices that react, but it does not have the feature that I used the most in Second Life and Opensim of being able to write code in the objects. There are ways to write code for Minecraft mods but it is not as instant as the sort of code scripts in objects in more advance virtual environments.Those scripts alter the world, respond to it push data to the outside and pull it back to the inside. It allows for a more blended interaction with the rest of the physical and digital world. Preserving state, creating user interfaces etc. It is all stuff that can be done with toolsets like Unity3d and Unreal Engine etc, but those are full dev environments. Scripting is an important element unless you are just creating a static exhibit for people to interact in. Minecraft also lack many of the human to human communication channels. It does not have voice chat by default though it is side loaded in some platforms. It has text chat but it is very console based and not accessible to many people. The social virtual worlds thrive on not just positional communication (as in Minecraft you can stand near something or someone) but on other verbal and non verbal communication.

The popularity of Minecraft has led to some institutions using it to create or wish to create mirror worlds. Already there was the the Ordanance Survey UK creation which “is believed to be the biggest Minecraft map made using real-world geographic data.” (Claiming firsts for things was a big thing back in 2006/7 for virtual worlds). It has just been updated to include houses not just terrain. By houses though this is not a full recreation of your house to walk into but a block showing its position and boundary. This map uses 83 billion blocks each at showing 25m of the UK, according to a story by the BBC

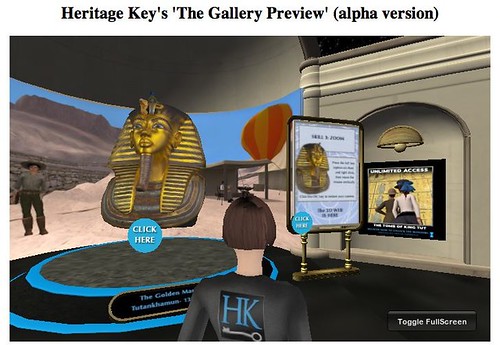

The BBC also reported this week on the British Museum looking at recreating all its exhibits and its buildings in Minecraft. This is an unusual approach to their exhibits. It will be an interesting build and they are asking the public to help. So once again Minecraft’s simplicity and accessibility will allow anyone to create the artefacts. It will, however, create a very low rez low texture experience. So it is a mirror world, in that it will be like the museum but it is more of a model village approach. You admire the talent to make it in the medium. It seems more of an art project than a virtual world project. It is all good though 🙂

The BBC seem to be on a Minecraft vibe just as they were on a Second Life Vibe back in the last wave. Paul Mason and BBC Newsnight came and did a piece with me and the team of fellow Virtual World pioneers in 2007 We were starting to establish a major sub culture in the corporate world of innovators and explorers looking into who we could use virtual worlds and how it felt. Counter culture of this nature in a corporate is not always popular. I have written this before but the day we filmed this for the BBC in the morning I had my yearly appraisal. My management line we not keen on virtual worlds not the success so gave me the lowest rating they could. I went straight for that (which is very annoying and disheartening) into an interview with Paul on camera. Sometimes you just have to put up with misguided people trying to derail you, other times you just get out of the situation and go do it elsewhere.

Anyway, Minecraft offers a great deal, it does allow mirror worlds, though it does allow for an other worldy approach. Most blocks do not obey gravity. You can build up and then a platform out with no supporting structure, you can dig tunnels and underground (the point of the mine in Minecraft and not worry about collapsing. You still have real world up and down left and right though. Most virtual worlds do this. Disney Infinity, Project Spark, Second Life, Opensim and the new Hi-Fidelity etc are all still avatars and islands at their core. People might mess with the scale of things and the size, size as the OS map or the Hard Drive machines and Guitars mentioned in this BBC piece on spectacular builds.

I feel we have another step to take in how we interact at distance or over time with others. Persistent virtual worlds allow us to either be in them, or even better to actually be them. My analogy here is that I could invite you to my mind and my thoughts, represented in a virtual environment. It may not be that those things are actual mirrors of the real world, they might be concepts and ideas represented in all sorts of ways. It may not mean gravity and ground need to exists. We are not going to get to this until we have all been through mirror work and virtual world experiences. That is the foundation of understanding.

Whilst many people got the virtual worlds of Second Life et al back in 2006 we were only continuing the ground preparation of all the other virtual world pioneers before. Minecraft is the first experience to really lay the foundations (all very serious it is fun to play with too!). These simple 8 bit style virtual bricks are the training ground for the next wave of virtual worlds, user generated content and our experiences of one another ideas. They may be mirror worlds, they have great value. There is no point me building an esoteric hospital experience when we need to have real examples to train on. However there is a point to having a stylised galleon floating in space as a theme for our group counselling experience we created.

The test for the naysayers of “it looks like a game” is really dying off now it seems. It will instead be it does’t look like the right sort of game. This is progress and I still get a rush when I see people understand the potential we have to connect and communicate in business, art, education or just good old entertainment. We could all sit and scoff as say yeah we’ve done that already, yes we know that etc. I am less worried about that, there might be a little voice in me saying I told you so, or wow how wrong were you to try and put a stop to this you muppets, but it is a very quiet voice. I want every one to feel the rush of potential that I felt when it clicked for me, whatever their attitude was before. So bring it on 🙂