Way back in the dim and distant era of 2009 I was exploring a lot of tools to help me build virtual environments with avatars and characters that could be animated, typically in Unity. 3D modelling is an art in its right and then rigging those models for animation and applying animations to those is another set of skills too. At the time I was exploring Evolver, which in the end was bought by Autodesk in 2011. A decade on and there is a new kid on the block from Epic/Unreal called Metahuman. This cloud based application (where the user interface is also a cloud streamed one) runs in a browser and produces incredibly detailed digital humans. The animation rigging of the body is joined by very detailed facial feature rigging allow these to be controlled with full motion capture live in the development environment of Unreal. Having mainly used Unity there is a lot of similarity in the high level workflow experience of the two, as they are the leading approaches to assembling all sorts of game, film and XR content. However there was a bit of a leaning curve.

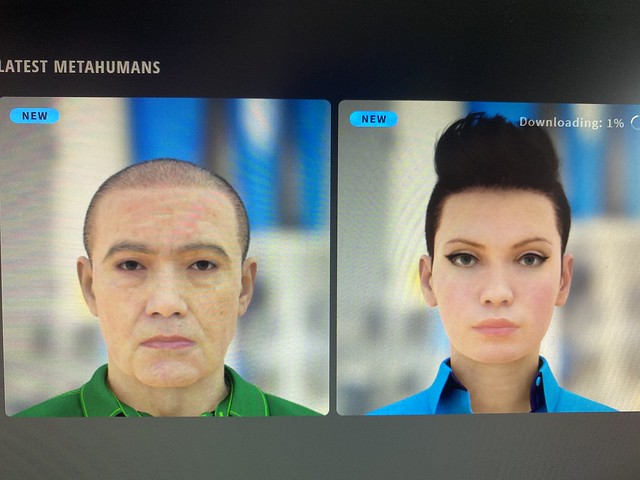

I decided to generate a couple of characters and ended up making what to me feels like Roisin and the Commander from my Reconfigure novels. Creating them in the tool plays instant animations and expressions on the faces and is really quite surreal an experience. I installed Unreal on my gaming rig with its RTX gfx card and all teh trimmings and set about seeing what I needed to do to get my characters working.

First there is an essential application called Quixel Bridge that would have been really handy a decade ago as it brokers the transfer of file formats between systems, despite standards being in place there are some quirks when you move complex 3D rigged assets around. Quixel can log directly into the metahuman repository and there is a specific plugin for the editor to import the assets to Unreal. Things in Unreal have a data and control structure called a blueprint that is a kind of configuration and flow model that can be used in a no-code (but still complex) way to get things working. You can still write c++ is needed of course.

My first few attempts to get Roisin to download failed as the beta was clearly very popular. I only took a photo of the screen not a screen cap, a bit low quality but there is more to come.

However, eventually I got a download and the asset was there and ready to go. Unreal has a demo application with two MetaHumans in it showing animation and lip synching and some nice camera work. Running this on my machine was a sudden rush to the future from my previous efforts with a decade old tech for things such as my virtual hospitals and the stuff on the TV slot I had back then.

The original demo video went like this

Dropping into the edits and after a lot of shader compilation I swapped Rosin with the first metahuman by matching the location coordinates and moving the original away. Then in the Sequence controller, the film events list I swapped the target actor from the original to mine and away we go.

This was recorded without the sound as I was just getting to grips with how to render a movie rather than play or compile an application to then screen cap instead. Short and sweet but proves it works. A very cool bit of tech.

I also ran the still image through the amusing AI face animation app Wombo.AI this animates stills rather than the above which is animating the actual 3d model. I am not posting that as they are short audio clips of songs and teh old DMCA takedown bots end to get annoyed at such things.

Now I have plan/pipe dream to see if I can animate some scenes from the books, if not make the whole damn movie/tv series 🙂 There are lots of assets to try and combine in this generation of power tooling. I also had a go at this tutorial, one of many that shows the use of a Live facial animation capture via an iPhone streamed to the metahuman model. I will spare Roisin public humiliation of sounded like me and instead leave it to the tutorial video for anyone wishing to try such a thing.

Lets see where this takes us all 🙂