I actually get asked a lot about how Unity3d stacks up against Opensim/Second Life. This question is usually based on wanting to use a virtual world metaphor to replicate what Opensim/Second Life do but with a visually very rich browser based client such as Unity3d.

There is an immediate clash of ideas here though and a degree of understanding that Unity3d is not comparable in the usual sense with SecondLife/OpenSim.

At its very heart you really have to consider Opensim and Second Life as being about being a server, that happens to have a client to look at it. Unity3d is primarily a client that can talk to other things such as servers but really does not have to to be what it needs to be.

Now this is not a 100% black and white description but it is worth taking these perspectives to understand what you might want to do with either type of platform.

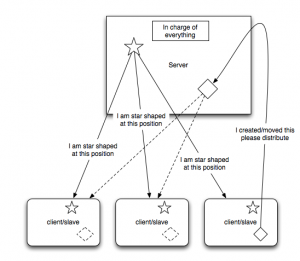

Everything from an Opensim style server is sent to all the clients that need to know. The shapes, the textures, the position of people etc. When you create things in SL you are really telling a server to remember some things and then distribute them. Clearly some caching occurs as everything is not sent every time, but as the environment is designed to be constantly changing in every way it has to be down to the server to be in charge.

Now compare this to an “level” created in Unity3d. Typically you build all the assets into the unity3d file that is delivered to the client. i.e. its a stand alone fully interactive environment. That may be space invaders, car racing, a FPS shooter or an island to walk around.

Each person has their own self contained highly rich and interactive environment, such as this example. That is the base of what Unity3d does. It understands physics, ragdoll animations, lighting, directional audio etc. All the elements that make an engaging experience with interactive objects and good graphic design and sound design.

Now as unity3d is a container for programming it is able to use network connectivity to be asked to talk to other things. Generally this is brokered by a type of server. Something has to know that 2,3 or many clients are in some way related.

The simplest example is the Smartfox server multiplayer island demo.

Smartfox is a state server. It remembers things, and knows how to tell other things connected to it that those things have changed. That does not mean it will just know about everything in a unity3d scene. It its down to developers and designer to determine what information should be shared.

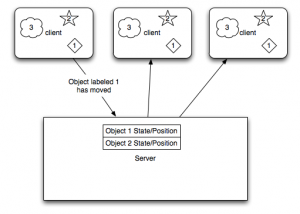

In the case above a set of unity clients all have objects numbered 1, 2 and 3 in them. It may be a ball, a person and a flock of birds in that order.

When the first client moves object number 1 smartfox on your own remote web server somewhere in the ether is just told some basic information about the state of that ball. Its not here now its here. Each of the other unity clients is connected to the same context. Hence they are told by the server to find object number 1 and move it to the new position. Now in gaming terms each of those clients might be a completely different view of the shared system. The first 2 might be a first person view, the thirds might be a 2d top down map view which has no 3d element to it at all. All they know is the object they consider to be object number 1 has moved.

In addition object number 3 in this example never shares any changes with the other clients. The server does not know anything about it and in the unity3d client it claims no network resources.

This sort of game object is one that is about atmosphere, or one that has no real need to waste network sending changes around. In the island example form unity3d this is a flock of seagulls on the island. They are a highly animated, highly dynamic flock of birds, with sound, yet technically in each client they are not totally the same.

(Now SL and Opensim use principle this for things such as particles and clouds but that is designed in)

For each user they merely see and hear seagulls, they have a degree of shared experience.

Games constantly have to balance the lag and data requirements of sending all this information around versus things that add to the experience. If multiplayer users need to have a common point of reference and it needs to be precise then it needs to be shared. e.g. in a racing game, the track does not change for each person. However debris and the position of other cars does.

In dealing with a constantly changing environment unity3d is able to be told to dynamically load new scenes and new objects in that scene, but you have to design and decide what to do. Typically things are in the scene but hidden or generated procedurally. i.e. the flock of seagulls copies the seagull object and puts it in the flock.

One of the elements of dealing the network lag in shuffling all this information around is interpolation. Again in a car example typically if a car is travelling north at 100 mph there if the client does not hear anything about the car position for a few milliseconds it can guess where the car should be.

Very often virtual worlds people will approach a game client expecting a game engine to be the actual server packaged, like wise game focused people will approach virtual worlds as a client not a server.

Now as I said this is not black and white, but opensim and secondlife and the other virtual world runnable services and toolkits are a certain collection of middleware to perform a defined task. Unity3d is a games development client that with the right programmers and designers can make anything, including a virtual world.

*Update (I meant to link this in the post(thanks Jeroen Frans for telling me 🙂 but hit send too early!)

Rezzable have been working on a unity3d client with opensim, specifically trying to extract the prims from opensim and create unity meshes.

Unity3d and voice is another question. Even in SL and Opensim voice is yet another server, it just so happens than who is in the voice chat with you is brokered by the the main server. Hence when comparing to unity3d again, you need a voice server, you need to programatically hook in what you want to do with voice.

As I have said before though, and as is already happening to some degree some developers are managing to blend thing such as the persistence of the opensim server with a unity3d client.

Finally in the virtual world context in trying to compare a technology or set of technologies we actually have a third model of working. A moderately philosophical point, but in trying use virtual worlds to create mirror worlds at any level will suffer from the model we are basing it on, name the world. The world is not really a server and we are not really clients. We are all in the same ecosystem, what happens for one happens for all.