It is not very often I get to write in much detail about some of the work that I do as often it is within other projects and not always for public consumption. My recent MMIS (Multidisciplinary Major Incident Simulator) work for Imperial College London and in particular for Dave Taylor is something I am able to talk about. I have know Dave for a long while through our early interactions in Second Life when i was at my previous company being a Metaverse Evangelist and he was at NPL. Since then we have worked together on a number of projects, along with the very talented artist and 3d Modeller Robin Winter. If you do a little digging you will find Robin has been a major builder of some of the most influential places in Second life.

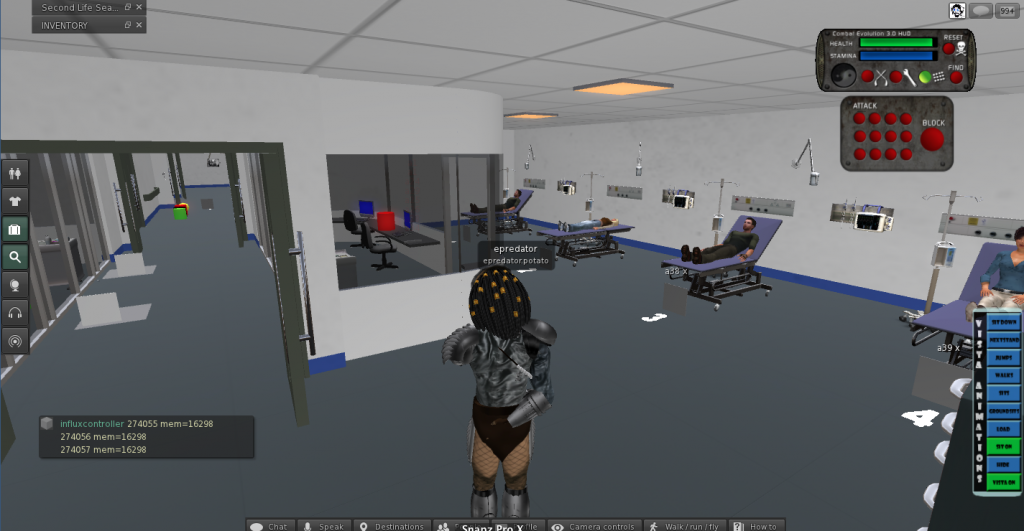

Our brief was for this project was to create a training simulation to deal with a massive influx patients to an already nearly full hospital. The aim being several people running different areas of the hospital have to work together to make space and move patients around to deal with the influx of new patients. It is about the human cooperation and following protocol to reach as good an answer as possible. We also had a design principle/joke of “No Zombies”

Much of this sort of simulation happens around a desk at the moment, in a more role play D&D type of fashion. That sort of approach offers a lot of flexibility to change the scenario, to throw in new things. In moving to a n online virtual environment version of the same simulation activity we did not want to loose that flexibility.

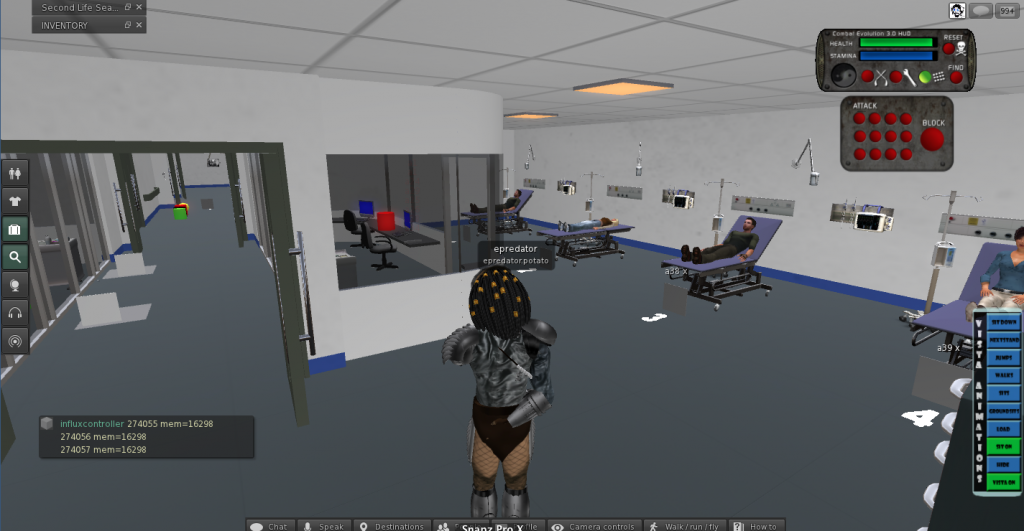

Initially we prototyped the whole thing in Second Life. Robin built a two floor hospital and created the atmosphere and visual triggers that participants would expect in a real environment.

Note this already moves on from sitting around a table focussing on the task and starts to place it in context. However also something to note is that the environment and creation of it can become a distraction from the learning and training objective. It is a constant balance between real modelling, game style information and finding the right triggers to immerse people.

For me the challenge was how to manage an underlying data model of patients in beds in places, of inbound patients and a simple enough interface to allow bed management to be run by people in world. An added complication was that of specific timers and delays needing to be in place. Each patient may take more of less time to be moved depending on their current treatment. So you need to be able to request a patient is moved but you then may have to wait a while until the bed is free. Incoming patients to a bed also have a certain time to be examined and dealt with before they can then be possibly moved again.

A more traditional object orientated approach might be for each patient to hold their own data and timings but I decided to centralise the data model for simplicity. The master controller in world decided who needed to change where and sends a message to any interested party to do what they need to do. That meant the master controller held the data for the various timers on each patient and acted as the state machine for the patients.

In order to have complete flexibility of hospital layout too I made sure that each hospital bay was not a fixed point. This meant dynamically creating patients and beds and equipment at the correct point in space in world. I used the concept of a spawn point. Uniquely identified bay names placed as spawn points around the hospital meant we could add and removes bays and change the hospital layout without changing any code. Making this as data driven as possible. Multiple scenarios could then be defined with different bay layouts and hospital structure, with different types of patients and time pressures, again without changing code. The ultimate aim was to be able to generate a modular hospital based on the needs of the scenario. We stuck to the basics though, of a fixed environment (as it was easy to move walls and rooms manually in Second Life, with dynamic bays that rezzed things in place.

This meant I could actually build the entire thing in an abstract way on my own plot of land, also as a backup.

I love to use shapes to indicate the function of something in context. The controller is the box in this picture. The wedge shape is the data. They are close to one another physically. The torus are the various bays and beds. The flat planes represent the white board. They are grouped in order and in place. You can be in the code. Can think about object responsibility through shape and space. It may not work for everyone but it does for me. The controller has a lot of code in it that also has a more traditional structure. One day it would be nice to be able to see and organise that in a similar way.

This created a challenge in Second Life as there is only actually 64k of memory available to each script. Here I had a script dealing with a variable number of patients, around 50 or so. Each patient needed data for several timer states and some identification and description text. Timers in Second Life are a 1 per script sort of structure so instead I had to use a timer loop to update all the individual timers and check for timeouts on each patient. Making the code nice a readable with lots of helper functions proved to not be the ideal way forward. The overhead of tidyness was more bytes in the stack getting eaten up. So gradually the code was hacked back to being inline operations of common functions. I also had to initiate a lookup in a separate script object for the longer pieces of text, and ultimately yet another to match patients to models.

The prototype enabled the customers (doctors and surgeons) to role play the entire thing through and helped highlight some changes that we needed to make.

The most notable was that of indicating to people what was going on. The atmosphere of pressure of the situation is obviously key. Initially the arriving patients to the hospital were sent directly to the ward or zone that was indicated on the scenario configuration. This meant I had to write a way to find the next available / free bed in a zone. This also has to be generic enough to deal with however many beds have been defined in the dynamic hospital. Patients arrived, neatly assigned to various beds. Of course as a user of the system this was confusing. Who had arrived where. Teleporting patients into bays is not what normally happens. To try and help I added non real work indicators, lights over beds etc that meant a across a ward could show new patients that needed to be dealt with.

If a patient arrived automatically but there was no bed they were places on one of two beds in the corridor. A sort of visual priority queue. That was a good mechanism to indicate overload and pressure for the exercise. However we were left with the problem of what happened when that queue was full. The patients had become active and arrived in the simulation but had nowhere to go. This of course in game terms is a massive failure, so I treated it as such and held the patients in an invisible error ward but put out a big chat message saying this patient needed dealing with.

I felt this was too clunky to have to walk around the ward keeping an eye out so as I had a generic messaging system that told patients and beds where to be I was able to make more than one object respond to a state change. This led to a quick build of a notice board in the ward. At a glance red, green and yellow status on beds could be seen. Still I was not convinced this was the right place for that sort of game style pressure. It needed a different admissions process once that was controlled by the ward owners. They would need to be able to still say “yes bring them in to the next available bed (so my space finding code would still be work)” or direct a patient to a bed.

The overal bed management process once a patient was “in” the hospital

The prototype led to the build of the real thing. It was a natural path of migration to Unity3d as I knew we could efficiently build the same thing in a way we could then simply use web browsers to access the hospital. I also knew that using Exit Games Photon Server I could get client applications talking to one another in in synch. From a software engineering point of view I knew that in C# I could create the right data structures and underlying model to be able to replicate the Second Life version but in a much better code structure. It also meant I could initiate a lot more user interface elements more simply as this was a dedicated client for this application. HUD’s work in Second Life for many things but ultimately you are trying to not make things happen, you don’t want people building or moving things etc. In a fixed and dedicated unity client you can focus on the task. Of course Second Life already had a chat system and voice so there was clearly a lot of extra things to build in Unity, but there is more than one way to skin a virtual cat.

The basic hospital and patient bed spawning points connected via Photon in Unity was actually quite quick to put together, but as ever the devil is in the detail. Second Life is server based application that clients connect too. In Unity you tend to have one of the clients as a server, or you have each client responsible for something and let the others take a ghosted synchronisation. Or a mixture as I ended up with. Shared network code is complicated. Understanding who has control and responsibility for something, when it is distributed across multiple clients takes a bit of thought and attention.

The easiest one is the player character. All the Unity and Photon examples work pretty much the same way. Using the Photon Toolkit you can instantiate a Unity object on a client and have that client as the owner. You then have the a parameters or data that you want to synchronise defined in a fairly simple interface class. The code for objects has two modes that you cater for. The first is being owned and moved around, the other is being a ghosted object owned by someone else just receiving serialised messages about what to do. There are also RPC calls that can be made asking objects on other clients to do something. This is the basis for everything shared across clients.

For the hospital though I needed a large control structure that defined the state of all the patients and things that happened to them. It made sense to have the master controller built into the master client. In Unity and photon the player that initiates a game and allows connection of others is the master. Helpfully there are data properties you can query to find this sort of thing out. So you end up with lots of code that is “if master do x else do y”.

Whoever initiates the scenario then becomes the admin for these simulations. This became a helpful differentiation. I was able to provide some overseeing functions, some start, stop pause functions only to the admin. This was something that was a bit trickier in SL but just became a natural direction in Unity/Photon.

One of my favourite admin functions was to just turn off all the walls just for the admin. Every other client is able to still see the walls but the admin has super powers and can see what is going on everywhere.

This is a harder concept for people to take in when they are used to Second Life or have a real world model in their minds. Each client can see or act on the data however it chooses. What you see in one place does not have to be an identical view to others. Sometimes that fact is used to cheat in games, removing collision detection or being able to see through walls. However here is it a useful feature.

This formed the start of an idea for some more non real world admin functions to help monitor what is going on such as cameras textures that let you see things elsewhere. As an example wards can be looked at as top down 2d or more like a cctv camera seeing in 3d. Ideally the admin is really detached from the process. They need to see the mechanics of how people interact, not always be restricted to the physical space. Participants however need to be restricted in what they can see and do in order to add the elements of stress and confusion that the simulation is aiming for.

Unity gave me a chance to redesign the patient arrival process. Patients did not just arrive in the bays but instead I put up a simple window of arrivals. Patient numbers and where there we supposed be be heading. This seemed to help, though a very simple technique, in a general awareness for all participants that things were happening. Suddenly 10-15 entries arriving in quick seemingly at the door to the hospital triggers more awareness than lights turned on in and around beds. The lights and indicators were still there as we still needed to show new patients and ones that were moving. When a patient was admitted to a bed the I put in the option to specify a bed or to just say next available one. In order to know where the patient had gone the observation phase is now additionally indicated by a group of staff around the patient. I had some grand plans using the full version of Unity Pro to use the path finding code to have non player character (NPC) staff dash towards the beds and to have people walking to and fro around the hospital for atmosphere. This turned out to be a bit to much of a performance hit for lower spec machines, though it is back on the wish list. It was fascinating seeing how the pathfinding operated. You are able to define buffer zones around walls and indicated what can be climbed or what needs to be avoided. You can then tell an object an end point and off it goes dynamically recalculating paths avoiding things and dealing with collision, giving doors enough room and if you do it right (or wrong) leaping over desks beds and patients to get to where they need to go 🙂 )

One of the biggest challenges was that of voice. Clearly people needed to communicate, that was the purpose of the exercise. I used a voice package that attempted to package messages across the network using photon. This was not spatial voice in the same way people were used to with Second Life. However I made some modifications as I already had roles for people in the simulation. If you were in charge of A&E I had to know that. So role selection became an extra feature not used in SL where it was implied. This meant I could alter the voice code to deal with channels. A&E could hear A&E. Also the admin was able to act as a tannoy and be heard by everyone. This also then started to double up as a phone system. A&E could call the Operating Theatre channel and request they take a patient. Initially this was a push to talk system. I made the mistake of changing it to an open mic. That meant every noise or sound made was constantly sent to every client, and the channel implementation meant the code chose to ignore things not meant for it. This turned out to be (obviously) massively swamp the Photon server when we had all out users online. So that needs a bit of work!

Another horrible gotcha was that I needed to log data. Who did what when was important for the research. As this was in Unity I was able to create those logging structures and their context. However because we were in a web browser I was not able to write to the file system. So a next best solution was to have a logging window for the admin that they could at least cut and paste all the log from. (This was to avoid having to set up another web server and send the logs to it over http as that was added overhead to the project to manage). I create the log data and a simple text window that all the data was pumped to. It scrolled and you could get an overview and also simply cut and paste. I worked out the size was not going to break any data limits or so I thought. However in practice this text window stopped showing anything after about a few thousand entries. Something I had not tested far enough. It turns out that text is treated the same as any other vertex based object and there are limits to the number of vertices and object can have. So it stopped being able to draw the text, even though lots of it was scrolled off screen. It meant the definition of the object had become too big. i.e. this isn’t like a web text window. It makes sense but it did catch me out as I was thinking it was “just text”.

An interesting twist was the generic noticeboard that gave an overview of the dynamic patients in the dynamic wards. This became a bigger requirement than the quick extra helper in Second Life. As a real hospital would have a whiteboard, with patient summary and various notes attached to it, then it made sense to build one. This meant that you would be able to take some notes about a patient, or indicate they needed looking at or had been seen. It sounds straight forward but the note taking turned out to be a little more complicated. Bear in mind this is a networked application multiple people can have the same noticeboard open, yet it is controlled by the master client. Typing in notes needed to be updated in the model and changes sent to others. Yes it turned out I was rewriting google docs ! I did not go quite that far but did have to indicate if someone had edited the things you were editing too.

We had some interesting things relating to the visuals too. Robin had made a set of patients or various sizes, shapes and gender. However with 50 patients or so, (as there can be any number defined) and each one described in text “a 75 year old lady” etc it meant it was very tricky to have all the variants that were going to be needed. I had taken the view that it would have been better to have generic morph style characters in the beds to avoid content bloat. The problem with “real” characters is they have to match the text (that’s editorial control), and also you need more than one of each type. If you have a ward full of 75 year old ladies and there are only 4 models it is a massive uncanny valley hit. The problem then balloons when you start building injuries like amputations into the equation. Very quickly something that is about bed management can become about details of a virtual patient. IN a fully integrated simulation of medical practice and hospital management that can happen, but the core of this project was the pressure of beds. i.e. in air traffic control terms we needed to land the planes, the type of plane was less important (though had a relevance still)

It is always easy to lose sight of the core learning or game objective with the details of what can be achieved in virtual environments. There is a time and cost to more content, to more code. However I think we managed to get a good balance with the release we have, and now can work on the tweaks to tidy it up and make it even better.

The application has also been of interest to schools. We had it on the Imperial College stand at the Bang education festival. I had to make an offline version of this. I was hoping to simply use Unity’s publish offline web version. This is supposed to remove the need to have any network connection or plugin validation. It never worked properly for me though. It always needed network. I am not sure if anyone else is having that problem, but don’t rely on it. That meant I then had to build standalone versions for mac and windows. Not a huge step but an extra set of targets to keep in line. I also had to hack the code a bit. Photon is good at doing “offline” and ignoring some of the elements but I was relying on a few things like how I generated the room name to help identify the scenario. In offline mode the room name is ignored and a generic name is put in place. Again quite understandable but cause me a a bit of offline rework that I probably could have avoided.

In order to make it a bit more accessible Dave wrote a new scenario with some funnier ailments. This is where were broke our base design principle and yes we put zombies in. I had the excellent Mixamo models and a free Gangnam style dance animation. Well it would have been silly not to put them in. So in the demos is the kids started to drift off the “special” button could get pushed and there they were.

I have shared a bit of what it takes to build one of these environments. It has got easier, but that means the difficult things have hidden themselves elsewhere.

If you need to know more about this and similar projects application the official page for it is here

Obviously if you need something like this built, or talked about to people let me know 🙂