This weekend I finally was able to purchase a digital copy of the film version Ready player One. It is something we saw as a family at the cinema but I had been wanted to take another look. I don’t buy many individial movies any more as with Sky, Netflix and Amazon things are usually easy to access as needed. It seems the US got to the home release first, another weird thing to be doing in the 21st century, slowly rolling out digital/streaming availability is so archaic and only really leads to piracy and lost revenue.

I had to make a choice on how to get to a digital version of the movie. Do I pay Sky for its buy and keep, where they also send blu-ray to go with the download. Or buy a blu-ray and attempt to figure out the film industry approach to digital, which, as with the release schedule is pretty archaic. Instead I went for Amazon Video. This we have on all our TV’s in the house, computers, pads and phones, alongside with Netflix. The last movies I bought were also on Amazon, John Wick 2 and before that The Raid 2, so its ideal to have next door to those.

I sparked up Amazon on the TV and home cinema and started to enjoy spotting even more of the references from my childhood and growing through the 80s as a gamer. Plus of course the who depth of engagement in VR is what flows through my head most of the time as a metaverse evangelist. Really though it is this targeted set of references and in jokes for me and my generation of geeks that I love. Wade’s Delorean with what seems to be a Knight Rider front grill, Duke Nuke-em aiming a launcher during the first battle scene, hello kitty and friends waddling along the bridge next to Wade, it goes on.

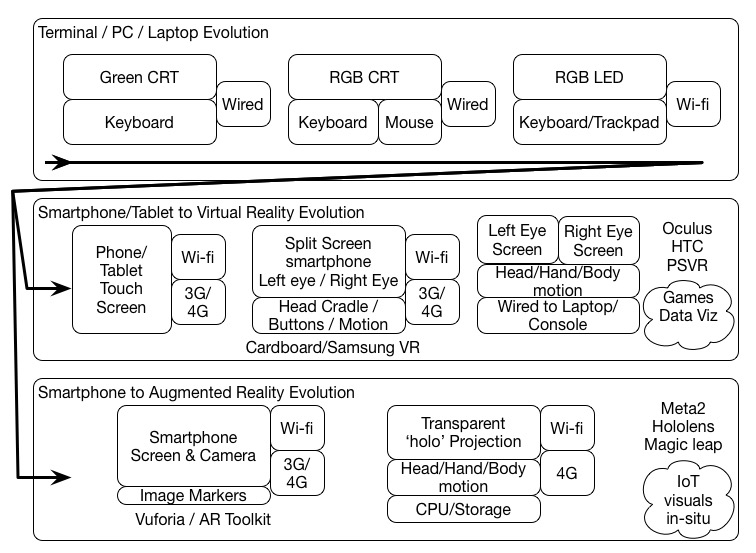

Being in VR though, with the headsets, suits, and running walkways I though I should at least try and watch it on my Oculus Go. I paused the TV stream, popped the headset on that is always next to me. I hoped an Amazon app had made it to the store, but no. Instead using the web browser I headed to Amazon video, logged in and found my movie, clicked play…. Nothing. It was a little deflating. Then though I noticed the “Use Desktop version” button in the top right and bingo it worked. Picking up where we left off at the start of the race with characters like SF’s Ryu wandering off to his car and seeing the Akira motorcycle raz into view. Selecting full screen gave a nice curved view of the 2D movie, enough I had to turn my head to look across the picture a little. Proper front row stuff. Not immersed as much as the main characters are int eh OASIS but a nice blend of traditional film, with new view tech and all a little bit meta 🙂

Another thing I noticed, which I think I had forgotten, was that when Wade first appears he has green hair, which he then dynamically changes to show avatar customisation in the OASIS. For me thats a big “yay” because I spent a long while with green hair in Second Life before finding my predator Avatar. My green spiked hair was a thing I identify with, and even got 3d printed. I also often referenced that in corporate presentations as the choice to have green hair is a subtle prod at conformity and social norms, just on the border of whether that would be acceptable to many in an office. It is not as full on extreme body shapes, or cartoon looks. The predator AV took this a stage further in the conversations. I also use green hair in almost every avatar in every platform as its a much quicker mental attachment than finding or trying to build a complex Yautja avatar. PS Home famously (from my point of view) did not allow green hair. Very odd!

So seeing Wade, in a movie about VR, the 80s and my generation my ego naturally assumed that any research the production team may, just may have come across my green hair metaverse evangelizing 🙂

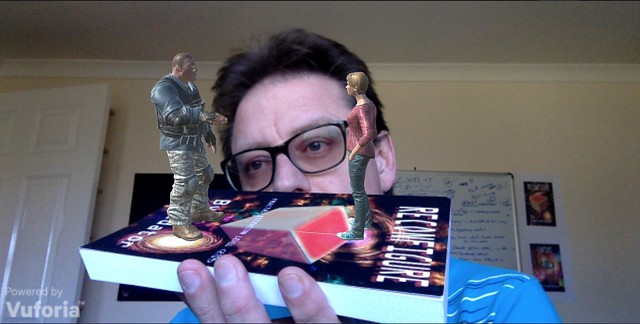

If you like player one ready, or don’t, you will still like my own VR and AR story telling with real world gaming and tech references though with Reconfigure and Cont3xt. Its got a love of Marmite in it too 🙂