I like a good tech challenge and I decided to look a bit more into the world of shaders. I had not fully appreciated how many competing languages there were for these low level compute systems. I had used shaders in Unity3D and I knew we could do fancy things with them. They are wonderfully complicated and full of maths. As with all code you can go native and just write the code directly. There are also tools to help. Things like shadertoy let you see some of the fun that can be had. It reminds me of hacking with the copper process in the old Amiga days. Low level graphics manipulation, direct to the pipeline.

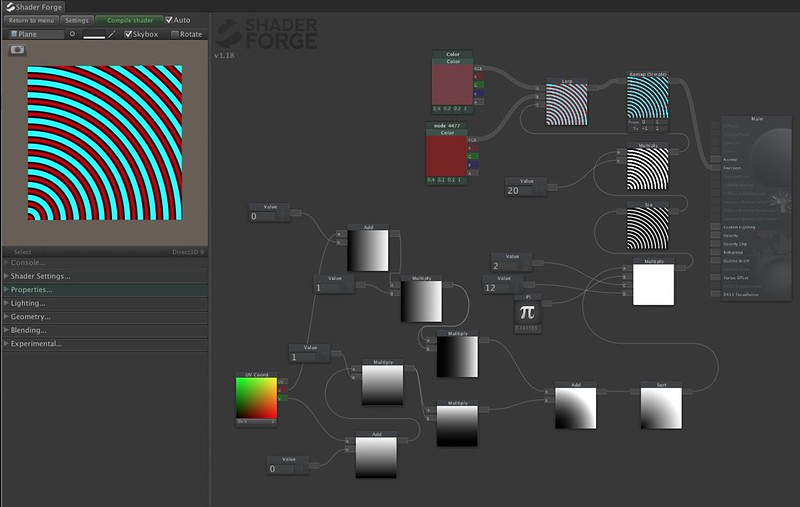

In Unity3d there is a tool I bought a while back called ShaderForge. It allows for editing of the shaders but in a visual way. Each little node shows the results of the maths that is going on in its own area. It is common to have this sort of material editing in 3D applications. There is a lot of maths available and I am only just skimming the surface of what can be done.

I was trying to create a realistic wood ring shader. I wanted to do something like that demonstrated here in normal code (not any of the shader languages).

I ended up with something that looked like this.

It was nearly the concentric rings, but I can only get it to start from the bottom left corner. I have yet to work out which number I can use for an offset so I can get full circles. I have worked out where to put in variance and noise to make the lines wobble a little. So I am a little stuck if anyone has any suggestions I would be very grateful 🙂 I want a random shader texture, slightly different each time which is why I am not using images as I normally do. I am not worried about the colour at the moment BTW. That is a function of scaling the mapping in the sin sweeping function that creates the ripples. I stuck a few extra value modifiers (some set to 0) to see if I could tweak the shader to do what I wanted, but no luck yet. Shaderforge has its own meta data but the thing can be compiled into a full shader in native code.

Just look at what it generates, its fairly full on code.

So it looks like I have quite a lot of learning to do. With code there is always more, always another language or format for something 🙂

***Update Yay for the internet. Dickie replied to this post very very quickly with an image of a very much simpler way for generate the concentric rings. This has massively added to my understanding of the process and is very much appreciated.

// Compiled shader for Web Player, uncompressed size: 14.0KB

// Skipping shader variants that would not be included into build of current scene.

Shader "Shader Forge/wood3" {

Properties {

_Color ("Color", Color) = (0.727941,0.116656,0.0856401,1)

_node_4617 ("node_4617", Color) = (0.433823,0.121051,0.0287089,1)

_node_1057 ("node_1057", Float) = 0.2

}

SubShader {

Tags { "RenderType"="Opaque" }

// Stats for Vertex shader:

// d3d11 : 4 math

// d3d9 : 5 math

// opengl : 10 math

// Stats for Fragment shader:

// d3d11 : 9 math

// d3d9 : 15 math

Pass {

Name "FORWARD"

Tags { "LIGHTMODE"="ForwardBase" "SHADOWSUPPORT"="true" "RenderType"="Opaque" }

GpuProgramID 47164

Program "vp" {

SubProgram "opengl " {

// Stats: 10 math

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

"!!GLSL#version 120

#ifdef VERTEX

varying vec2 xlv_TEXCOORD0;

void main ()

{

gl_Position = (gl_ModelViewProjectionMatrix * gl_Vertex);

xlv_TEXCOORD0 = gl_MultiTexCoord0.xy;

}

#endif

#ifdef FRAGMENT

uniform vec4 _Color;

uniform vec4 _node_4617;

uniform float _node_1057;

varying vec2 xlv_TEXCOORD0;

void main ()

{

vec4 tmpvar_1;

tmpvar_1.w = 1.0;

tmpvar_1.xyz = mix (_node_4617.xyz, _Color.xyz, vec3(((

sqrt((pow ((xlv_TEXCOORD0.x + 0.2), _node_1057) + pow ((xlv_TEXCOORD0.y + 10.0), _node_1057)))

* 11.0) + -10.0)));

gl_FragData[0] = tmpvar_1;

}

#endif

"

}

SubProgram "d3d9 " {

// Stats: 5 math

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

Bind "vertex" Vertex

Bind "texcoord" TexCoord0

Matrix 0 [glstate_matrix_mvp]

"vs_3_0

dcl_position v0

dcl_texcoord v1

dcl_position o0

dcl_texcoord o1.xy

dp4 o0.x, c0, v0

dp4 o0.y, c1, v0

dp4 o0.z, c2, v0

dp4 o0.w, c3, v0

mov o1.xy, v1

"

}

SubProgram "d3d11 " {

// Stats: 4 math

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

Bind "vertex" Vertex

Bind "texcoord" TexCoord0

ConstBuffer "UnityPerDraw" 336

Matrix 0 [glstate_matrix_mvp]

BindCB "UnityPerDraw" 0

"vs_4_0

root12:aaabaaaa

eefiecedaffpdldohodkdgpagjklpapmmnbhcfmlabaaaaaaoeabaaaaadaaaaaa

cmaaaaaaiaaaaaaaniaaaaaaejfdeheoemaaaaaaacaaaaaaaiaaaaaadiaaaaaa

aaaaaaaaaaaaaaaaadaaaaaaaaaaaaaaapapaaaaebaaaaaaaaaaaaaaaaaaaaaa

adaaaaaaabaaaaaaadadaaaafaepfdejfeejepeoaafeeffiedepepfceeaaklkl

epfdeheofaaaaaaaacaaaaaaaiaaaaaadiaaaaaaaaaaaaaaabaaaaaaadaaaaaa

aaaaaaaaapaaaaaaeeaaaaaaaaaaaaaaaaaaaaaaadaaaaaaabaaaaaaadamaaaa

fdfgfpfaepfdejfeejepeoaafeeffiedepepfceeaaklklklfdeieefcaeabaaaa

eaaaabaaebaaaaaafjaaaaaeegiocaaaaaaaaaaaaeaaaaaafpaaaaadpcbabaaa

aaaaaaaafpaaaaaddcbabaaaabaaaaaaghaaaaaepccabaaaaaaaaaaaabaaaaaa

gfaaaaaddccabaaaabaaaaaagiaaaaacabaaaaaadiaaaaaipcaabaaaaaaaaaaa

fgbfbaaaaaaaaaaaegiocaaaaaaaaaaaabaaaaaadcaaaaakpcaabaaaaaaaaaaa

egiocaaaaaaaaaaaaaaaaaaaagbabaaaaaaaaaaaegaobaaaaaaaaaaadcaaaaak

pcaabaaaaaaaaaaaegiocaaaaaaaaaaaacaaaaaakgbkbaaaaaaaaaaaegaobaaa

aaaaaaaadcaaaaakpccabaaaaaaaaaaaegiocaaaaaaaaaaaadaaaaaapgbpbaaa

aaaaaaaaegaobaaaaaaaaaaadgaaaaafdccabaaaabaaaaaaegbabaaaabaaaaaa

doaaaaab"

}

SubProgram "opengl " {

// Stats: 10 math

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

"!!GLSL#version 120

#ifdef VERTEX

varying vec2 xlv_TEXCOORD0;

void main ()

{

gl_Position = (gl_ModelViewProjectionMatrix * gl_Vertex);

xlv_TEXCOORD0 = gl_MultiTexCoord0.xy;

}

#endif

#ifdef FRAGMENT

uniform vec4 _Color;

uniform vec4 _node_4617;

uniform float _node_1057;

varying vec2 xlv_TEXCOORD0;

void main ()

{

vec4 tmpvar_1;

tmpvar_1.w = 1.0;

tmpvar_1.xyz = mix (_node_4617.xyz, _Color.xyz, vec3(((

sqrt((pow ((xlv_TEXCOORD0.x + 0.2), _node_1057) + pow ((xlv_TEXCOORD0.y + 10.0), _node_1057)))

* 11.0) + -10.0)));

gl_FragData[0] = tmpvar_1;

}

#endif

"

}

SubProgram "d3d9 " {

// Stats: 5 math

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

Bind "vertex" Vertex

Bind "texcoord" TexCoord0

Matrix 0 [glstate_matrix_mvp]

"vs_3_0

dcl_position v0

dcl_texcoord v1

dcl_position o0

dcl_texcoord o1.xy

dp4 o0.x, c0, v0

dp4 o0.y, c1, v0

dp4 o0.z, c2, v0

dp4 o0.w, c3, v0

mov o1.xy, v1

"

}

SubProgram "d3d11 " {

// Stats: 4 math

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

Bind "vertex" Vertex

Bind "texcoord" TexCoord0

ConstBuffer "UnityPerDraw" 336

Matrix 0 [glstate_matrix_mvp]

BindCB "UnityPerDraw" 0

"vs_4_0

root12:aaabaaaa

eefiecedaffpdldohodkdgpagjklpapmmnbhcfmlabaaaaaaoeabaaaaadaaaaaa

cmaaaaaaiaaaaaaaniaaaaaaejfdeheoemaaaaaaacaaaaaaaiaaaaaadiaaaaaa

aaaaaaaaaaaaaaaaadaaaaaaaaaaaaaaapapaaaaebaaaaaaaaaaaaaaaaaaaaaa

adaaaaaaabaaaaaaadadaaaafaepfdejfeejepeoaafeeffiedepepfceeaaklkl

epfdeheofaaaaaaaacaaaaaaaiaaaaaadiaaaaaaaaaaaaaaabaaaaaaadaaaaaa

aaaaaaaaapaaaaaaeeaaaaaaaaaaaaaaaaaaaaaaadaaaaaaabaaaaaaadamaaaa

fdfgfpfaepfdejfeejepeoaafeeffiedepepfceeaaklklklfdeieefcaeabaaaa

eaaaabaaebaaaaaafjaaaaaeegiocaaaaaaaaaaaaeaaaaaafpaaaaadpcbabaaa

aaaaaaaafpaaaaaddcbabaaaabaaaaaaghaaaaaepccabaaaaaaaaaaaabaaaaaa

gfaaaaaddccabaaaabaaaaaagiaaaaacabaaaaaadiaaaaaipcaabaaaaaaaaaaa

fgbfbaaaaaaaaaaaegiocaaaaaaaaaaaabaaaaaadcaaaaakpcaabaaaaaaaaaaa

egiocaaaaaaaaaaaaaaaaaaaagbabaaaaaaaaaaaegaobaaaaaaaaaaadcaaaaak

pcaabaaaaaaaaaaaegiocaaaaaaaaaaaacaaaaaakgbkbaaaaaaaaaaaegaobaaa

aaaaaaaadcaaaaakpccabaaaaaaaaaaaegiocaaaaaaaaaaaadaaaaaapgbpbaaa

aaaaaaaaegaobaaaaaaaaaaadgaaaaafdccabaaaabaaaaaaegbabaaaabaaaaaa

doaaaaab"

}

SubProgram "opengl " {

// Stats: 10 math

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" "VERTEXLIGHT_ON" }

"!!GLSL#version 120

#ifdef VERTEX

varying vec2 xlv_TEXCOORD0;

void main ()

{

gl_Position = (gl_ModelViewProjectionMatrix * gl_Vertex);

xlv_TEXCOORD0 = gl_MultiTexCoord0.xy;

}

#endif

#ifdef FRAGMENT

uniform vec4 _Color;

uniform vec4 _node_4617;

uniform float _node_1057;

varying vec2 xlv_TEXCOORD0;

void main ()

{

vec4 tmpvar_1;

tmpvar_1.w = 1.0;

tmpvar_1.xyz = mix (_node_4617.xyz, _Color.xyz, vec3(((

sqrt((pow ((xlv_TEXCOORD0.x + 0.2), _node_1057) + pow ((xlv_TEXCOORD0.y + 10.0), _node_1057)))

* 11.0) + -10.0)));

gl_FragData[0] = tmpvar_1;

}

#endif

"

}

SubProgram "d3d9 " {

// Stats: 5 math

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" "VERTEXLIGHT_ON" }

Bind "vertex" Vertex

Bind "texcoord" TexCoord0

Matrix 0 [glstate_matrix_mvp]

"vs_3_0

dcl_position v0

dcl_texcoord v1

dcl_position o0

dcl_texcoord o1.xy

dp4 o0.x, c0, v0

dp4 o0.y, c1, v0

dp4 o0.z, c2, v0

dp4 o0.w, c3, v0

mov o1.xy, v1

"

}

SubProgram "d3d11 " {

// Stats: 4 math

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" "VERTEXLIGHT_ON" }

Bind "vertex" Vertex

Bind "texcoord" TexCoord0

ConstBuffer "UnityPerDraw" 336

Matrix 0 [glstate_matrix_mvp]

BindCB "UnityPerDraw" 0

"vs_4_0

root12:aaabaaaa

eefiecedaffpdldohodkdgpagjklpapmmnbhcfmlabaaaaaaoeabaaaaadaaaaaa

cmaaaaaaiaaaaaaaniaaaaaaejfdeheoemaaaaaaacaaaaaaaiaaaaaadiaaaaaa

aaaaaaaaaaaaaaaaadaaaaaaaaaaaaaaapapaaaaebaaaaaaaaaaaaaaaaaaaaaa

adaaaaaaabaaaaaaadadaaaafaepfdejfeejepeoaafeeffiedepepfceeaaklkl

epfdeheofaaaaaaaacaaaaaaaiaaaaaadiaaaaaaaaaaaaaaabaaaaaaadaaaaaa

aaaaaaaaapaaaaaaeeaaaaaaaaaaaaaaaaaaaaaaadaaaaaaabaaaaaaadamaaaa

fdfgfpfaepfdejfeejepeoaafeeffiedepepfceeaaklklklfdeieefcaeabaaaa

eaaaabaaebaaaaaafjaaaaaeegiocaaaaaaaaaaaaeaaaaaafpaaaaadpcbabaaa

aaaaaaaafpaaaaaddcbabaaaabaaaaaaghaaaaaepccabaaaaaaaaaaaabaaaaaa

gfaaaaaddccabaaaabaaaaaagiaaaaacabaaaaaadiaaaaaipcaabaaaaaaaaaaa

fgbfbaaaaaaaaaaaegiocaaaaaaaaaaaabaaaaaadcaaaaakpcaabaaaaaaaaaaa

egiocaaaaaaaaaaaaaaaaaaaagbabaaaaaaaaaaaegaobaaaaaaaaaaadcaaaaak

pcaabaaaaaaaaaaaegiocaaaaaaaaaaaacaaaaaakgbkbaaaaaaaaaaaegaobaaa

aaaaaaaadcaaaaakpccabaaaaaaaaaaaegiocaaaaaaaaaaaadaaaaaapgbpbaaa

aaaaaaaaegaobaaaaaaaaaaadgaaaaafdccabaaaabaaaaaaegbabaaaabaaaaaa

doaaaaab"

}

SubProgram "opengl " {

// Stats: 10 math

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" "VERTEXLIGHT_ON" }

"!!GLSL#version 120

#ifdef VERTEX

varying vec2 xlv_TEXCOORD0;

void main ()

{

gl_Position = (gl_ModelViewProjectionMatrix * gl_Vertex);

xlv_TEXCOORD0 = gl_MultiTexCoord0.xy;

}

#endif

#ifdef FRAGMENT

uniform vec4 _Color;

uniform vec4 _node_4617;

uniform float _node_1057;

varying vec2 xlv_TEXCOORD0;

void main ()

{

vec4 tmpvar_1;

tmpvar_1.w = 1.0;

tmpvar_1.xyz = mix (_node_4617.xyz, _Color.xyz, vec3(((

sqrt((pow ((xlv_TEXCOORD0.x + 0.2), _node_1057) + pow ((xlv_TEXCOORD0.y + 10.0), _node_1057)))

* 11.0) + -10.0)));

gl_FragData[0] = tmpvar_1;

}

#endif

"

}

SubProgram "d3d9 " {

// Stats: 5 math

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" "VERTEXLIGHT_ON" }

Bind "vertex" Vertex

Bind "texcoord" TexCoord0

Matrix 0 [glstate_matrix_mvp]

"vs_3_0

dcl_position v0

dcl_texcoord v1

dcl_position o0

dcl_texcoord o1.xy

dp4 o0.x, c0, v0

dp4 o0.y, c1, v0

dp4 o0.z, c2, v0

dp4 o0.w, c3, v0

mov o1.xy, v1

"

}

SubProgram "d3d11 " {

// Stats: 4 math

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" "VERTEXLIGHT_ON" }

Bind "vertex" Vertex

Bind "texcoord" TexCoord0

ConstBuffer "UnityPerDraw" 336

Matrix 0 [glstate_matrix_mvp]

BindCB "UnityPerDraw" 0

"vs_4_0

root12:aaabaaaa

eefiecedaffpdldohodkdgpagjklpapmmnbhcfmlabaaaaaaoeabaaaaadaaaaaa

cmaaaaaaiaaaaaaaniaaaaaaejfdeheoemaaaaaaacaaaaaaaiaaaaaadiaaaaaa

aaaaaaaaaaaaaaaaadaaaaaaaaaaaaaaapapaaaaebaaaaaaaaaaaaaaaaaaaaaa

adaaaaaaabaaaaaaadadaaaafaepfdejfeejepeoaafeeffiedepepfceeaaklkl

epfdeheofaaaaaaaacaaaaaaaiaaaaaadiaaaaaaaaaaaaaaabaaaaaaadaaaaaa

aaaaaaaaapaaaaaaeeaaaaaaaaaaaaaaaaaaaaaaadaaaaaaabaaaaaaadamaaaa

fdfgfpfaepfdejfeejepeoaafeeffiedepepfceeaaklklklfdeieefcaeabaaaa

eaaaabaaebaaaaaafjaaaaaeegiocaaaaaaaaaaaaeaaaaaafpaaaaadpcbabaaa

aaaaaaaafpaaaaaddcbabaaaabaaaaaaghaaaaaepccabaaaaaaaaaaaabaaaaaa

gfaaaaaddccabaaaabaaaaaagiaaaaacabaaaaaadiaaaaaipcaabaaaaaaaaaaa

fgbfbaaaaaaaaaaaegiocaaaaaaaaaaaabaaaaaadcaaaaakpcaabaaaaaaaaaaa

egiocaaaaaaaaaaaaaaaaaaaagbabaaaaaaaaaaaegaobaaaaaaaaaaadcaaaaak

pcaabaaaaaaaaaaaegiocaaaaaaaaaaaacaaaaaakgbkbaaaaaaaaaaaegaobaaa

aaaaaaaadcaaaaakpccabaaaaaaaaaaaegiocaaaaaaaaaaaadaaaaaapgbpbaaa

aaaaaaaaegaobaaaaaaaaaaadgaaaaafdccabaaaabaaaaaaegbabaaaabaaaaaa

doaaaaab"

}

}

Program "fp" {

SubProgram "opengl " {

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

"!!GLSL"

}

SubProgram "d3d9 " {

// Stats: 15 math

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

Vector 0 [_Color]

Float 2 [_node_1057]

Vector 1 [_node_4617]

"ps_3_0

def c3, 0.200000003, 10, 11, -10

def c4, 1, 0, 0, 0

dcl_texcoord v0.xy

add r0.xy, c3, v0

pow r1.x, r0.x, c2.x

pow r1.y, r0.y, c2.x

add r0.x, r1.y, r1.x

rsq r0.x, r0.x

rcp r0.x, r0.x

mad r0.x, r0.x, c3.z, c3.w

mov r1.xyz, c1

add r0.yzw, -r1.xxyz, c0.xxyz

mad oC0.xyz, r0.x, r0.yzww, c1

mov oC0.w, c4.x

"

}

SubProgram "d3d11 " {

// Stats: 9 math

Keywords { "DIRECTIONAL" "SHADOWS_OFF" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

ConstBuffer "$Globals" 144

Vector 96 [_Color]

Vector 112 [_node_4617]

Float 128 [_node_1057]

BindCB "$Globals" 0

"ps_4_0

root12:aaabaaaa

eefiecedbabakleflijnjmcmgkldbcbdippjnhehabaaaaaaceacaaaaadaaaaaa

cmaaaaaaieaaaaaaliaaaaaaejfdeheofaaaaaaaacaaaaaaaiaaaaaadiaaaaaa

aaaaaaaaabaaaaaaadaaaaaaaaaaaaaaapaaaaaaeeaaaaaaaaaaaaaaaaaaaaaa

adaaaaaaabaaaaaaadadaaaafdfgfpfaepfdejfeejepeoaafeeffiedepepfcee

aaklklklepfdeheocmaaaaaaabaaaaaaaiaaaaaacaaaaaaaaaaaaaaaaaaaaaaa

adaaaaaaaaaaaaaaapaaaaaafdfgfpfegbhcghgfheaaklklfdeieefcgeabaaaa

eaaaaaaafjaaaaaafjaaaaaeegiocaaaaaaaaaaaajaaaaaagcbaaaaddcbabaaa

abaaaaaagfaaaaadpccabaaaaaaaaaaagiaaaaacabaaaaaaaaaaaaakdcaabaaa

aaaaaaaaegbabaaaabaaaaaaaceaaaaamnmmemdoaaaacaebaaaaaaaaaaaaaaaa

cpaaaaafdcaabaaaaaaaaaaaegaabaaaaaaaaaaadiaaaaaidcaabaaaaaaaaaaa

egaabaaaaaaaaaaaagiacaaaaaaaaaaaaiaaaaaabjaaaaafdcaabaaaaaaaaaaa

egaabaaaaaaaaaaaaaaaaaahbcaabaaaaaaaaaaabkaabaaaaaaaaaaaakaabaaa

aaaaaaaaelaaaaafbcaabaaaaaaaaaaaakaabaaaaaaaaaaadcaaaaajbcaabaaa

aaaaaaaaakaabaaaaaaaaaaaabeaaaaaaaaadaebabeaaaaaaaaacambaaaaaaak

ocaabaaaaaaaaaaaagijcaaaaaaaaaaaagaaaaaaagijcaiaebaaaaaaaaaaaaaa

ahaaaaaadcaaaaakhccabaaaaaaaaaaaagaabaaaaaaaaaaajgahbaaaaaaaaaaa

egiccaaaaaaaaaaaahaaaaaadgaaaaaficcabaaaaaaaaaaaabeaaaaaaaaaiadp

doaaaaab"

}

SubProgram "opengl " {

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

"!!GLSL"

}

SubProgram "d3d9 " {

// Stats: 15 math

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

Vector 0 [_Color]

Float 2 [_node_1057]

Vector 1 [_node_4617]

"ps_3_0

def c3, 0.200000003, 10, 11, -10

def c4, 1, 0, 0, 0

dcl_texcoord v0.xy

add r0.xy, c3, v0

pow r1.x, r0.x, c2.x

pow r1.y, r0.y, c2.x

add r0.x, r1.y, r1.x

rsq r0.x, r0.x

rcp r0.x, r0.x

mad r0.x, r0.x, c3.z, c3.w

mov r1.xyz, c1

add r0.yzw, -r1.xxyz, c0.xxyz

mad oC0.xyz, r0.x, r0.yzww, c1

mov oC0.w, c4.x

"

}

SubProgram "d3d11 " {

// Stats: 9 math

Keywords { "DIRECTIONAL" "SHADOWS_SCREEN" "LIGHTMAP_OFF" "DIRLIGHTMAP_OFF" "DYNAMICLIGHTMAP_OFF" }

ConstBuffer "$Globals" 144

Vector 96 [_Color]

Vector 112 [_node_4617]

Float 128 [_node_1057]

BindCB "$Globals" 0

"ps_4_0

root12:aaabaaaa

eefiecedbabakleflijnjmcmgkldbcbdippjnhehabaaaaaaceacaaaaadaaaaaa

cmaaaaaaieaaaaaaliaaaaaaejfdeheofaaaaaaaacaaaaaaaiaaaaaadiaaaaaa

aaaaaaaaabaaaaaaadaaaaaaaaaaaaaaapaaaaaaeeaaaaaaaaaaaaaaaaaaaaaa

adaaaaaaabaaaaaaadadaaaafdfgfpfaepfdejfeejepeoaafeeffiedepepfcee

aaklklklepfdeheocmaaaaaaabaaaaaaaiaaaaaacaaaaaaaaaaaaaaaaaaaaaaa

adaaaaaaaaaaaaaaapaaaaaafdfgfpfegbhcghgfheaaklklfdeieefcgeabaaaa

eaaaaaaafjaaaaaafjaaaaaeegiocaaaaaaaaaaaajaaaaaagcbaaaaddcbabaaa

abaaaaaagfaaaaadpccabaaaaaaaaaaagiaaaaacabaaaaaaaaaaaaakdcaabaaa

aaaaaaaaegbabaaaabaaaaaaaceaaaaamnmmemdoaaaacaebaaaaaaaaaaaaaaaa

cpaaaaafdcaabaaaaaaaaaaaegaabaaaaaaaaaaadiaaaaaidcaabaaaaaaaaaaa

egaabaaaaaaaaaaaagiacaaaaaaaaaaaaiaaaaaabjaaaaafdcaabaaaaaaaaaaa

egaabaaaaaaaaaaaaaaaaaahbcaabaaaaaaaaaaabkaabaaaaaaaaaaaakaabaaa

aaaaaaaaelaaaaafbcaabaaaaaaaaaaaakaabaaaaaaaaaaadcaaaaajbcaabaaa

aaaaaaaaakaabaaaaaaaaaaaabeaaaaaaaaadaebabeaaaaaaaaacambaaaaaaak

ocaabaaaaaaaaaaaagijcaaaaaaaaaaaagaaaaaaagijcaiaebaaaaaaaaaaaaaa

ahaaaaaadcaaaaakhccabaaaaaaaaaaaagaabaaaaaaaaaaajgahbaaaaaaaaaaa

egiccaaaaaaaaaaaahaaaaaadgaaaaaficcabaaaaaaaaaaaabeaaaaaaaaaiadp

doaaaaab"

}

}

}

}

Fallback "Diffuse"

}